So much to test, so little time? Here’s how to create a software testing risk matrix for maximum results.

When it comes to testing software, it can be a bit overwhelming when you get started. One resource that one can turn to is the software testing wheel that we came up with at Abstracta, based on the ISO 25010 standards for software product quality. It explains all of the different quality factors and how to test them. But, it will soon occur to you the enormity of things that should be tested with only the finite time and resources you have.

That’s when you have to apply Pareto’s Principle: What is that 20% of things that you can test that will create 80% of the value of testing? Or to put it differently, take a risk-based approach, choosing tasks that allow you to mitigate the aspects with the highest risk first. In this post, I’ll show you an activity that proposes to do this analysis using a risk matrix for software testing.

Risk Matrices

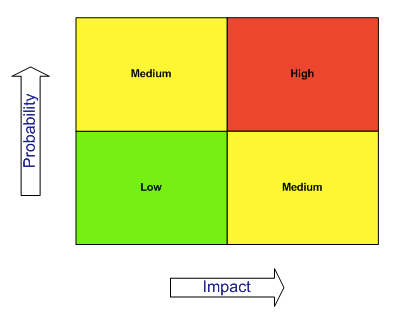

Risk is composed of two factors: the probability of something happening and the (negative) business impact that it would have. So, if we draw it in a matrix, we will be able to distinguish zones according to risk, where the extremes will be:

- Very likely, high impact: We must test it!

- Unlikely, high impact: We should test it.

- Very likely, low impact: If there is time, we could test it.

- Unlikely, low impact: If we want to throw money down the drain, we’ll test this. That is, the test is too expensive for the value it provides. So, we won’t test it.

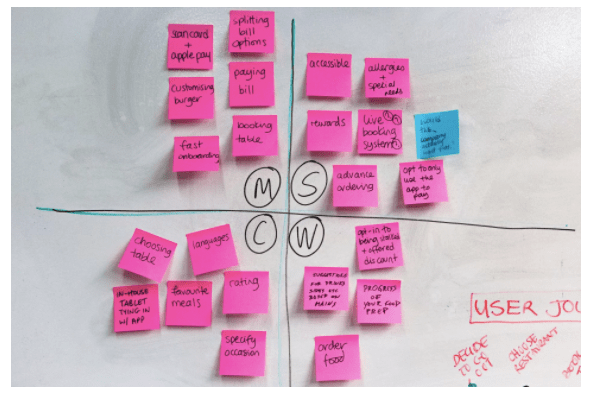

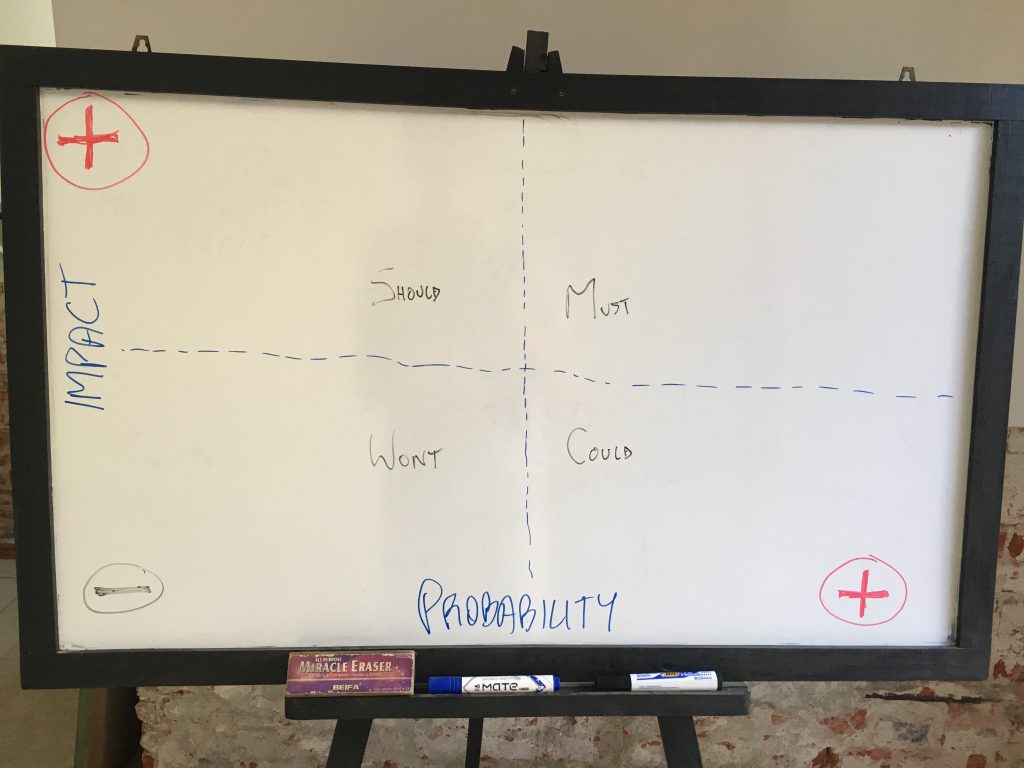

This is associated with the MoSCoW method: Must (high probability and impact), Should (high probability, medium impact), Could (medium probability, low impact), Wont (low probability and impact). The following image shows a matrix to conduct a risk analysis with this method (and no, it is not in the same order as the previous matrix):

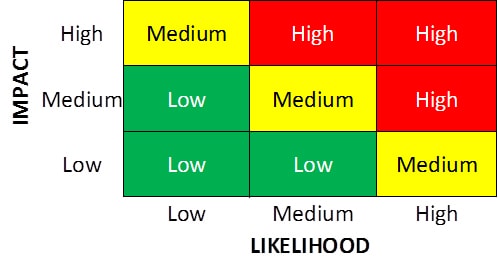

You can also go for a more refined version of the matrix:

How to Make Your Very Own Software Testing Risk Matrix

Here are the steps to make your own software testing risk matrix in order to lay out a solid testing plan:

#1 – Think about the factors that affect the probability of an incident or bug appearing.

For example:

- The complexity of the solution

- Dependence on external systems

#2 – Think about the factors that generate a negative impact on the business, in case the functionality has problems.

For example:

- Functionalities that operate with sensitive data

- Most used features

#3 – Then, you implement this method in different ways, thinking about the testing techniques to apply or the functionalities to be tested, etc.

For example, you can place the different functionalities or put “security tests”, “performance tests”, etc in the different quadrants. It could also be applied to decide what features will need which types of tests. Another example is using it to define how much time you should devote to exploratory testing for each functionality.

Here is an example of how we like to set up our matrix at Abstracta, in which the quadrants are according to risk, and we incorporate the MoSCoW technique:

Something that we have found interesting, is that from this risk matrix, the Definition of Done (DoD) could be separated out, distinguishing different DoDs according to the criticality of the user story/functionality. Then, for some stories labeled category 3 (the “Coulds”), certain types of tests may be defined, automation with how much coverage, etc. Then, for another item categorized as a 1 (“Must”) there will be a different DoD, with other associated tasks that are more demanding in terms of quality control.

This technique can also be very useful for a retrospective, focusing on quality tasks.

Have you used the software testing risk matrix or similarly, yet different, requirements traceability matrix before?

Recommended for You

The Software Testing Wheel

Why So Much Talk Around DevOps Culture?

Tags In

Federico Toledo, Chief Quality Officer at Abstracta

Related Posts

Holistic Testing: New Course on Agility at Abstracta

Would you like to have a deeper knowledge of agile testing and all its possibilities? Don’t miss this in-depth interview on the subject with Arcadio Abad, who will be teaching the course “Holistic Testing: strategies for agile teams” in October 2022, created by Janet Gregory…

Devs, You Have Unit Testing All Wrong

Why you shouldn’t skip unit testing for building high-quality software If you’re a developer or work in the world of software development, you might have heard some misconceptions about unit testing. We’ll go debunking some of them one by one in this post, and maybe…

Search

Contents

Categories

- Acceptance testing

- Accessibility Testing

- AI

- API Testing

- Development

- DevOps

- Fintech

- Functional Software Testing

- Healthtech

- Mobile Testing

- Observability Testing

- Partners

- Performance Testing

- Press

- Quallity Engineering

- Security Testing

- Software Quality

- Software Testing

- Test Automation

- Testing Strategy

- Testing Tools

- Work Culture