How to boost your software’s performance with benchmark software testing? Find out in this guide, hand-in-hand with Abstracta experts!

When software performance can make or break user satisfaction, how do we know our applications are truly up to par? Benchmark software testing offers a way forward.

By providing a structured approach to evaluate and compare software performance against predefined standards, benchmark testing helps us stay on top of our game.

In this article, we’ll dive into the heart of benchmark software testing. We’ll explore its goals, methods, key metrics, and the challenges you might face. Plus, we’ll look at how it fits into the software development lifecycle and its impact on user experience.

Looking to optimize your software’s performance? Explore our Performance Testing Services!

What Is Benchmark Software Testing?

Benchmark software testing is a method to evaluate a software application’s performance against predefined standards. It helps us understand how well our software performs under various conditions. This is crucial for identifying performance bottlenecks and boosting our software’s performance to meet industry standards.

It is a subtype of performance testing, involving the comparison of our software’s performance against a set of benchmarks. These benchmarks serve as a reference point, allowing us to measure various performance metrics such as response time, throughput, and resource utilization.

By performing benchmark testing, you can identify areas for improvement and optimize your software’s performance.

Objectives of Benchmark Software Testing

Here are the key objectives we focus on at Abstracta:

- Measure Software Performance: Accurately assess our software’s performance under various conditions, helping us understand its capabilities and limitations.

- Identify Areas for Improvement: Pinpoint specific performance bottlenecks and inefficiencies, allowing us to optimize the software to make it faster and more efficient.

- Meet User Expectations: Enhance the software to deliver a seamless and satisfying user experience, which is essential for keeping users engaged.

- Enhance User Satisfaction: Boost overall user satisfaction by providing a reliable and efficient software solution, leading to positive feedback and continued use.

- Maintain Competitive Edge: Stay ahead in the market by continuously optimizing our software’s performance, helping us remain a top choice for users and outperform competitors.

Types of Benchmark Tests

Different performance needs require distinct types of benchmark tests. Below, you can find some of the most widely used:

- Application Benchmarks: Focus on evaluating the performance of a specific application or module. These are useful to assess efficiency at a micro level.

- System Benchmarks: Broader tests that examine how well the entire system performs. This includes aspects like CPU, memory, storage area networks, and specific hardware components.

- Synthetic Benchmarks: Simulate workloads through predefined scripts to test individual components or subsystems under controlled conditions. These are particularly useful when real-world usage data is unavailable.

- Real-World Benchmarks: Use actual usage patterns and data to replicate production behavior. This type delivers high relevance and accuracy.

- Infrastructure Benchmarks: Assess the performance of underlying network components, databases, and processing infrastructure. These tests help identify whether technical limitations in the architecture could impact system responsiveness and scalability.

- Platform Benchmarks: Focus on evaluating the capabilities of an optimized operating system, helping to confirm that the software runs efficiently in the selected environment.

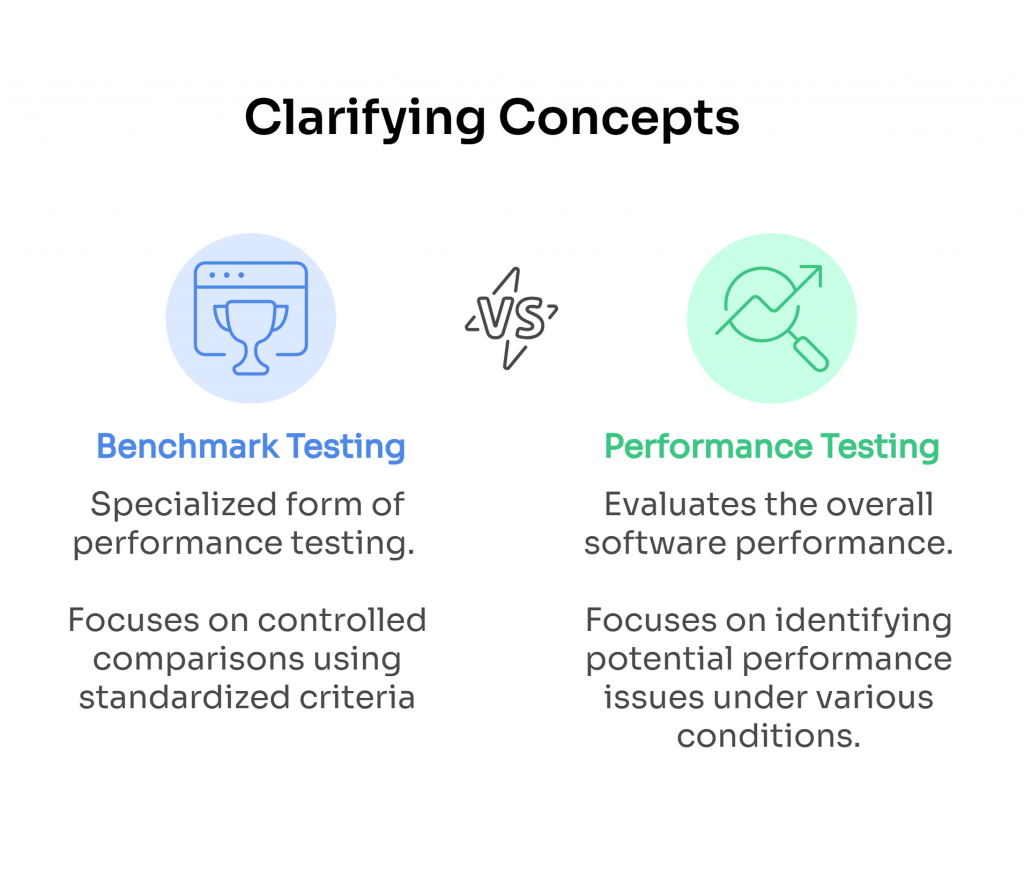

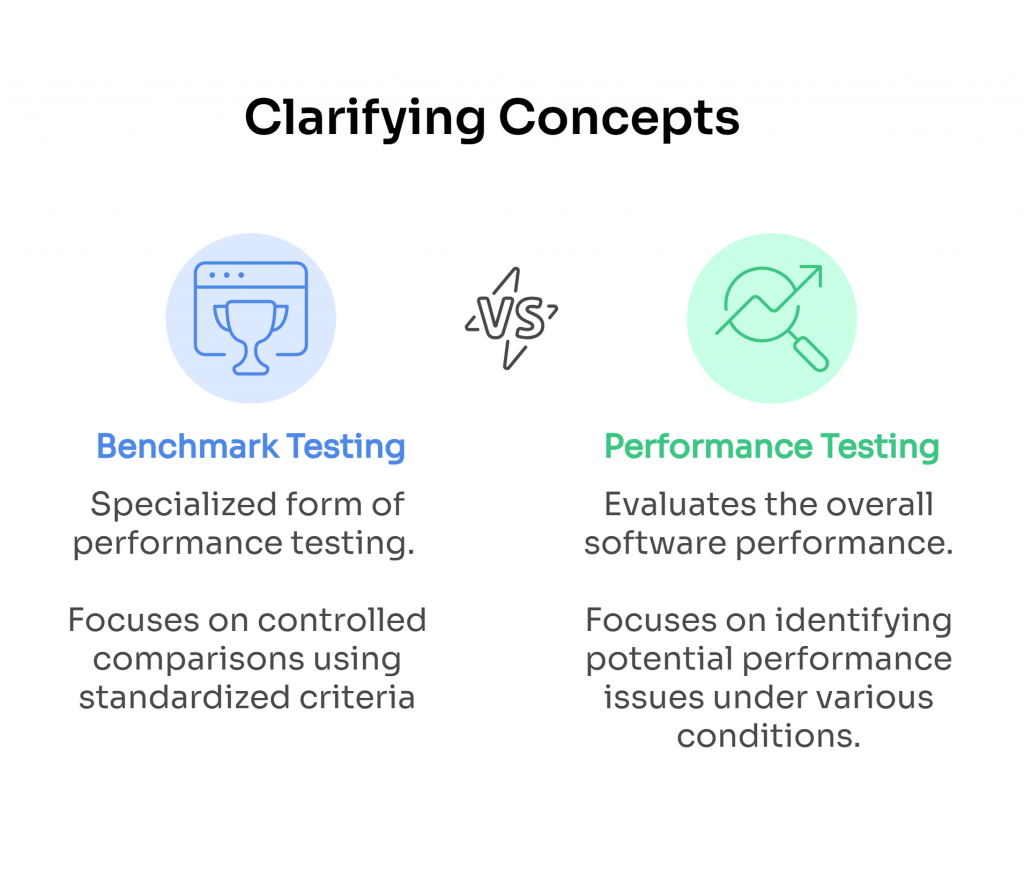

What’s the Difference Between Benchmark and Performance Testing?

Here, it is important to emphasize that benchmark testing is a specialized form of performance testing that emphasizes comparison against established standards. This comparison helps evaluate where the software stands concerning industry standards or competitor products. It’s a reference point for assessing quality and efficiency, providing insights into the software’s competitive standing.

On the other hand, performance testing encompasses a wide array of tests aimed at evaluating the overall performance of software under various conditions and identifying potential performance issues. Performance testing includes load, stress, endurance testing, and more. Each type targets different performance aspects, from handling peak loads to sustaining long-term usage.

In contrast to the benchmark types described in the previous section, these are broader in scope. While benchmark tests focus on controlled comparisons using standardized criteria, performance tests are designed to expose system behavior under diverse, and often extreme, real-world conditions.

This broader approach in performance testing provides a detailed view of how the software operates in different scenarios and helps prepare it to handle real-world usage effectively.

Revolutionize Your Testing with Abstracta Copilot! Boost productivity by 30% with our new AI-powered assistant for efficient testing.

Common Challenges

While selecting benchmarks is essential, implementing benchmark software testing comes with its challenges. These obstacles can hinder the effectiveness of the testing process if we don’t address them properly.

Selecting Appropriate Benchmarks

Choosing benchmarks that accurately reflect the software’s performance requirements can be difficult. The benchmarks must be relevant and aligned with the specific goals of the software.

Interpreting Results

Analyzing benchmark test results to identify performance bottlenecks requires a deep understanding of the data. Misinterpretation can lead to incorrect conclusions and ineffective optimizations. That’s why it’s essential to interpret benchmark test results with context in mind, leveraging visualization and monitoring tools to extract actionable insights.

Integrating Testing

Incorporating benchmark testing into the entire testing process without disrupting the development workflow is a significant challenge. It requires careful planning and coordination among different teams.

Test Environment Setup

Creating a controlled test environment that accurately simulates real-world scenarios is crucial for obtaining reliable results. This involves configuring hardware, software, and network settings to match production conditions.

Test Data Management

Validating the availability and accuracy of test data for benchmark tests is essential. Inaccurate or incomplete test data can skew results and lead to incorrect assessments of software performance

Each of these challenges can be addressed through a test strategy and structured benchmark testing process. Next, we break down the method we use at Abstracta to overcome them effectively.

Achieve better outcomes with a powerful software test strategy!

Want a more scalable, reliable software test strategy? Book a meeting!

Step-by-Step: The Benchmark Testing Method

At Abstracta, we follow a structured approach that captures accurate and actionable insights. This process addresses the most common challenges in benchmark testing through a series of structured steps that build upon each other to deliver a comprehensive evaluation of software performance.

Main Steps

1. Define the performance metrics and benchmarks.

We start by identifying key performance indicators (KPIs) relevant to the software. These KPIs could include response time, throughput, and resource utilization. Setting specific, measurable benchmarks for each KPI helps us establish clear performance goals.

2. Create a benchmark test plan.

Next, we outline the test scenarios that will evaluate performance. This involves determining the test data required for each scenario and establishing the criteria for success and failure. A well-defined test plan covers all aspects of performance.

3. Outline the test scenarios.

Designing realistic test scenarios is essential for the effectiveness of benchmark testing. Each scenario should align with the software’s intended use and reflect a variety of operating conditions. This includes variations in load, user behavior, and infrastructure setups. A well-thought-out scenario adds depth to the evaluation process by representing real-world performance expectations.

4. Execute the benchmark tests in a controlled test environment.

We set up a test environment that closely replicates production conditions. Running the benchmark tests according to the test plan in this controlled setting allows us to capture reliable data. Consistent test conditions are essential for obtaining accurate results.

5. Collect the benchmark test results.

During the execution phase, we gather data from the tests, including performance metrics and any anomalies. Using automated tools for data collection helps us capture precise information, which is essential for a thorough analysis.

6. Analyze the data to identify performance bottlenecks.

Once we collect the data, we compare it against the predefined benchmarks. This step involves identifying areas where performance falls short of expectations and using diagnostic tools to pinpoint the root causes of these issues. A detailed analysis reveals the underlying problems affecting performance.

7. Implement improvements based on the benchmark testing results.

Finally, we develop a plan to address the identified performance bottlenecks. This may involve making changes to the software or infrastructure. After implementing these improvements, we re-test to confirm that the issues have been resolved and that performance has improved.

Understanding the context in which we apply benchmarks is vital for effective testing. By following this structured approach, we can build software that meets performance standards and delivers a seamless user experience.

Components of Benchmark Testing

To obtain reliable results, apart from following each of the aforementioned steps, you should validate that each benchmark is composed of several interconnected components, since every element plays a specific role in boosting the quality of the testing process. These are the most critical:

- Test Environment: A stable and consistent test environment closely mirrors production, which is essential for accurate results. Hardware, operating systems, and configurations should replicate real-world usage to reflect true performance.

- Test Data: Carefully prepared data sets that simulate expected user behavior and interactions. This supports reliable test execution and enables meaningful outcomes.

- Performance Metrics: Metrics like response time, throughput, and resource utilization guide the analysis. These metrics need to align with your business objectives and reflect key factors such as network performance to offer a complete picture.

- Benchmark Criteria: Predefined performance goals based on standards, internal baselines, or competitor comparisons. These are essential for consistent evaluation.

- Execution Plan: A strategy that defines how to execute benchmark tests, when to run them, and under what conditions, helping to maintain consistency and comparability.

- Analysis Tools: Diagnostics and monitoring tools are used during the analysis phase to interpret results, detect issues, and prioritize improvements.

Key Metrics and KPIs in Benchmark Testing

To effectively implement the method, it’s crucial to focus on key metrics and KPIs. In benchmark testing, we use several critical metrics and KPIs. As mentioned earlier, these include response time, throughput, and resource utilization.

By tracking these performance metrics, we can gain insights into our software’s performance and identify areas for optimization. These metrics are key to understanding how our software performs under different conditions and for making data-driven decisions to enhance its performance.

Don’t miss this article! 3 Key Performance Testing Metrics Every Tester Should Know

Selecting the Right Benchmarks

Choosing the right benchmarks is critical to effective testing, as it helps us to validate whether our testing is meaningful and actionable. This involves defining clear benchmark criteria and using benchmark testing frameworks to guide the testing process.

On this path, at Abstracta, we consider factors such as the software’s purpose, user expectations, and industry standards to select benchmarks that truly reflect real-world usage and deliver insights that drive meaningful improvements.

As we delve deeper into the benchmark testing process, it’s important to address some of the common challenges and their solutions.

Role in the Software Development Lifecycle

At Abstracta, we integrate testing at all stages, from development to deployment. This enables our software to meet performance standards throughout its lifecycle.

Benchmark Testing in Each SDLC Stage

It is possible to integrate benchmark testing throughout the entire software development lifecycle to optimize performance at every stage. This approach aligns well with Agile methodologies, which emphasize continuous performance testing and iterative development.

Here’s how we incorporate benchmark testing in each phase:

- Requirements Phase: Define performance expectations and benchmarks. Establish clear performance goals and criteria that the software must meet. This sets the foundation for all subsequent testing activities and aligns with business objectives.

- Design Phase: Incorporate performance considerations into the software design. Make architectural and design choices that support the defined performance benchmarks. This includes selecting appropriate technologies and designing for scalability and efficiency. Agile practices encourage collaborative design sessions to address performance early on.

- Development Phase: Perform benchmark testing on individual software components to verify if they meet the performance criteria. This helps in identifying and addressing performance issues early in the development process. Agile sprints allow for continuous integration and testing, making it easier to catch issues early.

- Integration Phase: Evaluate the interaction between different modules and their combined performance. Benchmark testing during the integration phase helps detect bottlenecks that might not appear during isolated component testing.

- Analysis Phase: After component and integration testing, we enter the analysis phase to evaluate collected benchmark data in depth. This allows us to identify bottlenecks, unexpected behaviors, or degradations. The findings here guide final tuning before moving into production.

- Deployment Phase: Validate performance in the production environment. Before fully deploying the software, we conduct benchmark tests in a staging environment that closely mirrors the production setup. This helps in identifying any last-minute performance issues that might arise in the live environment. Agile practices often include automated deployment pipelines, which can incorporate performance testing as a final check.

- Maintenance Phase: Continuously monitor and optimize software performance. After deployment, we keep an eye on the software’s performance in the production environment. Regular benchmark tests help in identifying performance degradation over time and enable us to make necessary optimizations. Agile’s iterative nature means that performance improvements can be planned and executed in future sprints.

On the whole, we conduct comprehensive benchmark tests to evaluate system performance throughout the development process. We rigorously test the entire system to verify it meets the performance benchmarks. This includes load testing, stress testing, and other performance-related tests.

Community and Open Source Contributions

The open-source community offers valuable tools and resources for benchmark testing. Tools like Apache JMeter and Gatling are widely used and continuously improved by the community. They provide robust and flexible solutions for a variety of testing needs, making them invaluable assets in our testing toolkit.

At Abstracta, we heavily invest in R&D and have launched open-source performance testing solutions such as JMeter DSL, and several JMeter plugins. We have implemented assisted migrators to accelerate the adoption of new tools, moving away from legacy software like LoadRunner.

By participating in the open-source community, we stay up-to-date with the latest advancements in benchmark testing. Engaging with the community allows us to share knowledge, learn from others, and contribute to the ongoing development of these tools. This collaboration enhances our testing capabilities and helps us stay at the forefront of industry best practices.

FAQs about Benchmark Software Testing

What Is Software Benchmarking?

Software benchmarking is the process of comparing the performance, functionality, or capabilities of software applications against industry standards, competitors, or internal benchmarks. It helps organizations identify areas for improvement, validate software quality, and make informed decisions during development.

What Is Benchmark Testing in Software Testing?

It is a method used to measure and evaluate the performance of software against predefined standards or other systems. This type of testing identifies how well an application performs under specific conditions, such as response time, throughput, or resource usage, enabling teams to optimize and improve its efficiency.

What Is an Example of a Benchmark Test?

An example of a benchmark test could be evaluating the response time of a web application under a simulated load of 1,000 concurrent users. This test compares the results against industry standards or competitor systems to verify if the application meets performance expectations.

Which Are The Best Practices for Benchmark Testing?

Here are some key practices to follow for effective benchmark testing:

- Define clear objectives: Identify the goals and performance metrics you want to evaluate.

- Use automated performance testing tools: Leverage tools to achieve consistency and scalability.

- Simulate real-world conditions: Create test scenarios that replicate actual user behavior and workloads.

- Regularly update benchmarks: Adapt benchmarks to reflect changes in standards, competitors, or application requirements.

- Analyze results thoroughly: Look for trends and pinpoint performance bottlenecks to focus your optimization efforts.

- Document findings: Keep detailed records of the testing process and results for better collaboration and decision-making.

Why Is Benchmark Testing Important?

Because it reveals performance gaps, highlights bottlenecks, and drives performance optimization efforts, enabling systems to align with standards and deliver a better user experience. It also provides actionable insights to help teams make informed decisions about system improvements.

What Is the Difference Between Baseline Testing and Benchmark Testing?

Baseline testing focuses on capturing the initial performance metrics of a system to establish a reference point for future evaluations. Benchmark testing compares system performance against predefined standards or other systems, providing insights into competitiveness and efficiency. Both are essential for enhancing software quality in different ways.

How We Can Help You

With over 16 years of experience and a global presence, Abstracta is a leading technology solutions company with offices in the United States, Chile, Colombia, and Uruguay. We specialize in software development, AI-driven solutions, and end-to-end software testing services.

Our expertise spans across industries. We believe that actively bonding ties propels us further and helps us enhance our clients’ software. That’s why we’ve built robust partnerships with industry leaders like Microsoft, Datadog, Tricentis, Perforce BlazeMeter, and Saucelabs to provide the latest in cutting-edge technology.

Our holistic approach enables us to support you across the entire software development lifecycle.

Contact us and embrace cost-effectiveness through our Performance Testing Services!

Follow us on Linkedin & X to be part of our community!

Recommended for You

Observability-Driven Quality: From Code to UX Clarity

Tags In

Abstracta Team

Related Posts

How to Optimize E-Commerce Website Performance For Black Friday

Black Friday: What’s your Last Minute Game Plan? Time to optimize e-commerce website performance It’s almost that wonderful time of year again, the SuperBowl of shopping, Black Friday, followed by Cyber Monday. While online shoppers are creating a strategic game plan by pre-scoping out the…

A Quick BlazeMeter University Review

A senior performance tester’s review of the new courses by BlazeMeter Last week, I was looking for fresh knowledge on performance testing, so I asked a teammate of mine if she knew of any courses I could take. She recommended I try BlazeMeter University which…

Search

Contents

Categories

- Acceptance testing

- Accessibility Testing

- AI

- API Testing

- Development

- DevOps

- Fintech

- Functional Software Testing

- Healthtech

- Mobile Testing

- Observability Testing

- Partners

- Performance Testing

- Press

- Quallity Engineering

- Security Testing

- Software Quality

- Software Testing

- Test Automation

- Testing Strategy

- Testing Tools

- Work Culture