Teams shifting from generative AI to agentic AI systems face new responsibilities, decisions, and forms of coordination. This article distills the patterns leaders keep returning to.

Large engineering teams are reaching a point where traditional QA simply can’t keep up. The growing dependency on complex integrations makes it clear that manual routines and isolated automation are no longer enough. Leaders need systems that help the team understand what’s happening, anticipate what’s coming, and act with confidence.

In many organizations, the shift begins when AI stops being a set of disconnected prompts and starts supporting entire workflows. Teams start experimenting with agents that can observe, interpret, and execute tasks inside defined boundaries.

These agents work alongside people, helping them handle the parts of QA that demand more speed, consistency, and context awareness than any team can sustain on its own.

This evolution introduces decisions that fall directly on engineering leadership. It raises questions about how much context these systems need, how humans supervise their behavior, and how much autonomy is appropriate inside a high-stakes delivery pipeline.

In this article, we look at the ideas that engineering leaders are prioritizing as they scale QA with AI, including:

- Differences between Generative AI and Agentic AI

- The pressure behind scaling QA in enterprise environments

- Where generic AI falls short in delivery workflows

- The shift toward agentic systems

- The leadership responsibilities that emerge in this transition

- The 10 lessons leaders rely on when adopting AI at scale

If your team is navigating this shift and needs both custom solutions and support to reduce the Quality Intelligence gap, we can help you adopt AI in a responsible and grounded way.

Understanding the Shift: From Generative AI to Agentic AI

Before looking at how agents take part in QA workflows, it helps to outline what distinguishes generative models from agentic systems and how each contributes to software delivery.

What Is Generative AI?

Generative AI supports tasks that require producing new content based on patterns learned from large datasets. These models respond to prompts by generating text, code, summaries, test ideas, or variations that help teams explore possibilities, document reasoning, and accelerate early-stage work. They are useful when the goal is to create options, refine thinking or draft material that can later be validated or integrated into broader workflows.

What Is Agentic AI?

Agentic AI is an approach where AI agents combine conversational abilities with the capacity to act within real workflows. AI agents operate with evolving context, follow structured instructions, integrate with engineering tools, update their internal state as conditions shift, and execute tasks within boundaries defined by teams. Their role expands as they demonstrate consistent behavior in production-adjacent environments.

How They Relate Inside QA

Both generative models and agentic systems contribute to QA in complementary ways. Generative AI supports exploration and drafting, and agentic AI builds on those capabilities by working with context, interacting with tools, and participating in workflows that evolve over time. Each expands what teams can do as systems grow in complexity.

The Real Pressure Behind Scaling QA in Enterprise Environments

QA teams operate over years of accumulated operational debt. Environments change unevenly, integrations age at different speeds, and small design decisions compound until they shape how every release behaves. This makes quality work heavier, not because people lack skill, but because often the system they’re protecting grows unpredictable.

Engineering leaders feel this weight when stability depends on knowledge scattered across teams and tools. Scaling quality becomes a way to regain control over a landscape that no longer behaves in linear ways.

The Limits of Relying on Generic AI for Complex Delivery Workflows

Some delivery workflows depend on signals and tools that don’t surface through text alone. They involve CI/CD pipelines, issue trackers, API monitors, performance dashboards, log streams and datasets that shift under real usage. These elements create a level of context that generic models cannot infer on their own.

Quality work in these environments requires:

- Awareness of how changes move through services and integrations

- Sensitivity to data that evolves under real traffic

- Understanding of dependencies that anchor each release

- Outputs that remain stable under governance and traceability

- The ability to interact reliably with core tools like Jira, CI pipelines, monitors and test runners

These conditions define how much autonomy an agent can assume inside a workflow and how teams decide which responsibilities can be safely delegated.

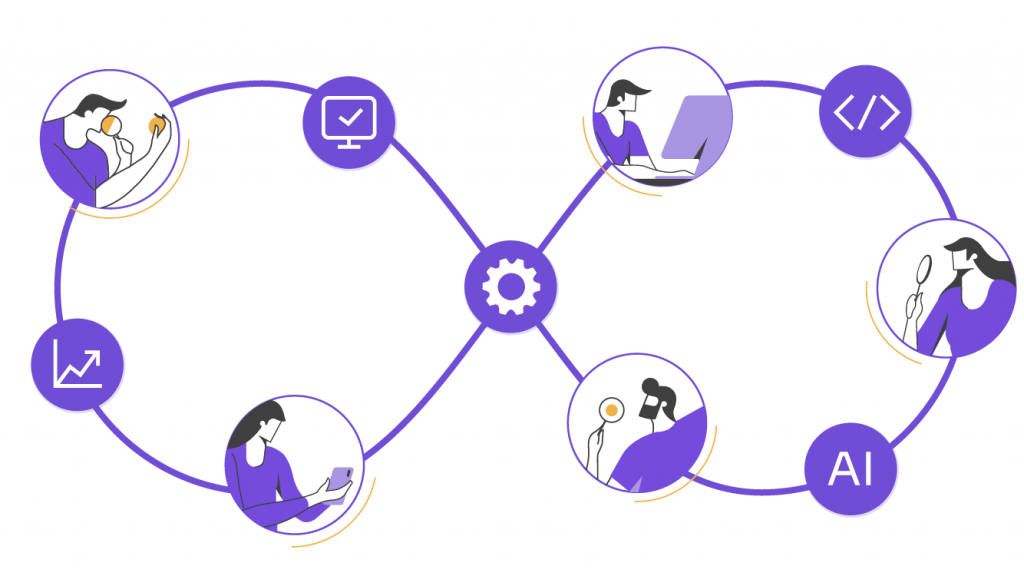

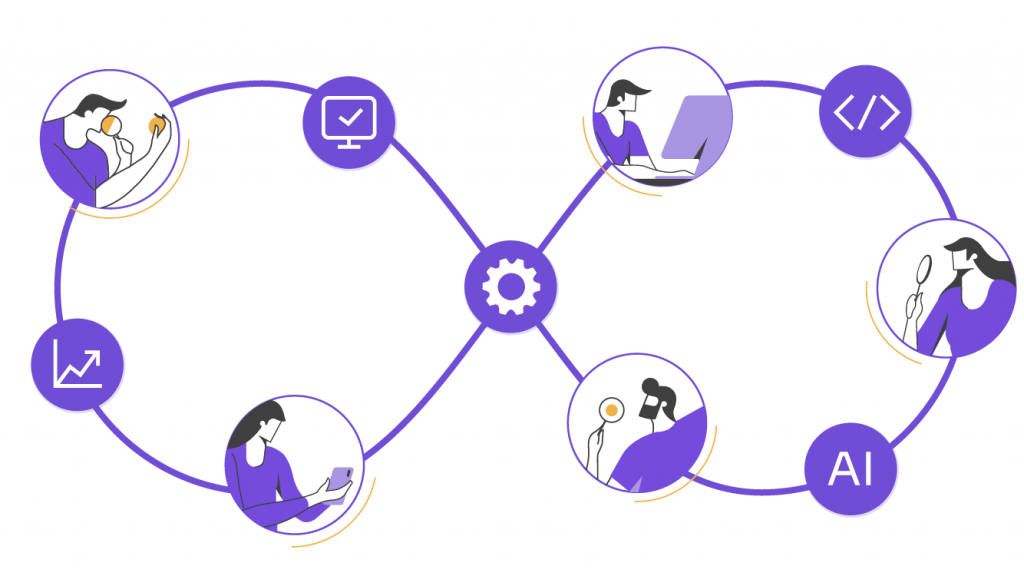

The Shift Toward Agentic Systems

Teams exploring AI inside QA often move through a gradual expansion of responsibility. Agents begin by reading signals, then support specific tasks, and eventually take part in delivery workflows as their reliability becomes evident.

| Stage | Agent Participation | Team Adjustment |

| Signal Awareness | Agents read logs, traces, metrics, and test artifacts to highlight conditions worth noticing. | Teams review these signals and decide which patterns should influence QA routines. |

| Task Support | Agents perform focused tasks such as data preparation, environment checks, API probes, or early validation steps. | Teams specify boundaries, approval points, and how results feed into existing processes. |

| Workflow Integration | Agents run parts of QA workflows, monitor checkpoints, correlate failures, and prepare information for release decisions. | Teams observe reliability over time and adjust where the agent participates and how often. |

| Operational Roles | Agents maintain recurring QA activities such as tracking regressions, observing environments and escalating when conditions drift. | Agents maintain recurring QA activities such as tracking regressions, observing environments, and escalating when conditions drift. |

Autonomy expands as teams gather evidence of consistent behavior across real workflows.

The Leadership Responsibilities That Emerge in This Transition

As agents begin taking part in QA workflows, leadership becomes responsible for creating the conditions that keep these systems reliable.

This includes defining the operational context each agent needs, deciding which workflows can absorb automation, and establishing how results are reviewed before influencing release decisions.

Leaders coordinate how QA, Dev, SRE, and product teams share information, track signals and respond to changes surfaced by the agent. They decide when to expand a system’s participation, when to pause it, as well as how to document its behavior for governance and audit needs.

These responsibilities shape the rhythm of adoption and give teams a clear framework for using agentic capabilities without compromising delivery stability.

The 10 Enterprise Lessons in Scaling QA with AI

As organizations integrate agentic systems into QA, certain lessons appear consistently across industries. Here we share 10 lessons we’ve learned so far to move from experimentation to sustained use.

1. Context defines everything

Teams move forward when agents receive the full picture: systems, constraints, business rules, fragile paths, and historical failures. Without real context, AI produces noise instead of insight, and nothing truly scales.

2. Planning is a leadership responsibility

Scaling QA with AI starts with choices about priorities, risk tolerance, and expected outcomes. When leadership sets direction, teams can execute with clarity instead of guessing what “AI adoption” means.

3. Impact must be measurable from day one

Engineering leaders anchor AI initiatives to outcomes that matter: fewer critical bugs, fewer hours lost to rework, more stable releases, healthier pipelines. Without impact, AI becomes an experiment that drifts.

4. Human supervision protects the system

AI accelerates, but it doesn’t safeguard. Human judgment catches blind spots, misclassifications, incomplete assumptions, and subtle inconsistencies that models miss. Oversight is what keeps confidence in complex environments.

5. Agents become part of the team

Agents evolve with data, dependencies, and business logic. Treating them as static tools leads to failures. Treating them as systems that need tuning, updates, and observation keeps them reliable.

6. Adoption needs real support

Teams trust AI when someone guides them through the learning curve. Pairing, shared reviews, and open space for questions remove fear and help people understand when to rely on an agent and when to override it.

7. Communication reduces friction

Scaling QA with AI creates interdependencies across QA, Dev, SRE, and product. Regular conversations reduce misunderstandings, surface risks early, and keep priorities aligned across functions.

8. Quality architecture shapes outcomes

AI strengthens whatever structure it finds. When data flows are clear, logs are accessible, and environments are consistent, agents deliver meaningful value. When things are fragmented, AI amplifies the fragmentation.

9. Security and governance become daily practice

AI interacts with sensitive data and critical pipelines. Governance is no longer a checkbox—it’s how engineering leaders keep systems safe, maintain traceability, and avoid surprises in production.

10. Leadership sets the tempo

Adopting AI in QA reshapes habits, roles, and expectations. When leaders protect the process, give teams room to learn, and highlight early wins, the change becomes sustainable instead of overwhelming.

Where This Shift Leads: Abstracta Intelligence

Enterprises adopting agentic systems need platforms that operate with real context, connect to delivery tools, and produce evidence that teams can trust. That’s the purpose of Tero, our open-source framework for AI agents, focused on QA and software delivery.

Tero is the core of Abstracta Intelligence, an Abstracta solution that integrates frameworks, copilots, and context-aware agents with a proven services model. Abstracta Intelligence closes the Quality Intelligence Gap by linking delivery tools and environments (Jira, Postman, Playwright/Selenium, CI/CD pipelines, AS/400, core banking systems, etc.) with an AI-powered comprehension layer (RAG + prompts) and the knowledge of developers and quality teams.

The result? Context-aware agents that act safely and traceably inside real workflows. Pilots across banking and fintech have already shown 50% faster debugging, 30% quicker releases, and 30% productivity gains.

If your teams are exploring agentic AI, this shift is already underway.

How We Can Help You

Founded in Uruguay in 2008, Abstracta is a global leader in software quality engineering and AI transformation. We have offices in the United States, Canada, the United Kingdom, Chile, Uruguay, and Colombia, and we empower enterprises to build quality software faster and smarter. We specialize in AI-driven innovation and end-to-end software testing services.

We believe that actively bonding ties propels us further and helps us enhance our clients’ software. That’s why we’ve forged robust partnerships with industry leaders like Microsoft, Datadog, Tricentis, Perforce BlazeMeter, Sauce Labs, and PractiTest.

If you’re evaluating agentic AI for QA or delivery, we can help you test it safely, measure impact early, and scale only when the evidence supports it.

Follow us on Linkedin & X to be part of our community!

Recommended for You

Introducing Abstracta Intelligence: Our New AI-Powered Delivery Platform

Natalie Rodgers, Content Manager at Abstracta

Related Posts

Leading AI Tools for Software QA

Discover our top picks for AI tools for software QA! Dive into our comprehensive review and streamline your quality assurance processes.

UX Writing: Crafting Impactful Content for Memorable Experiences

Discover why integrating UX Writing is essential to the core of your software development process.

Search

Contents

Categories

- Acceptance testing

- Accessibility Testing

- AI

- API Testing

- Development

- DevOps

- Fintech

- Functional Software Testing

- Healthtech

- Mobile Testing

- Observability Testing

- Partners

- Performance Testing

- Press

- Quallity Engineering

- Security Testing

- Software Quality

- Software Testing

- Test Automation

- Testing Strategy

- Testing Tools

- Work Culture