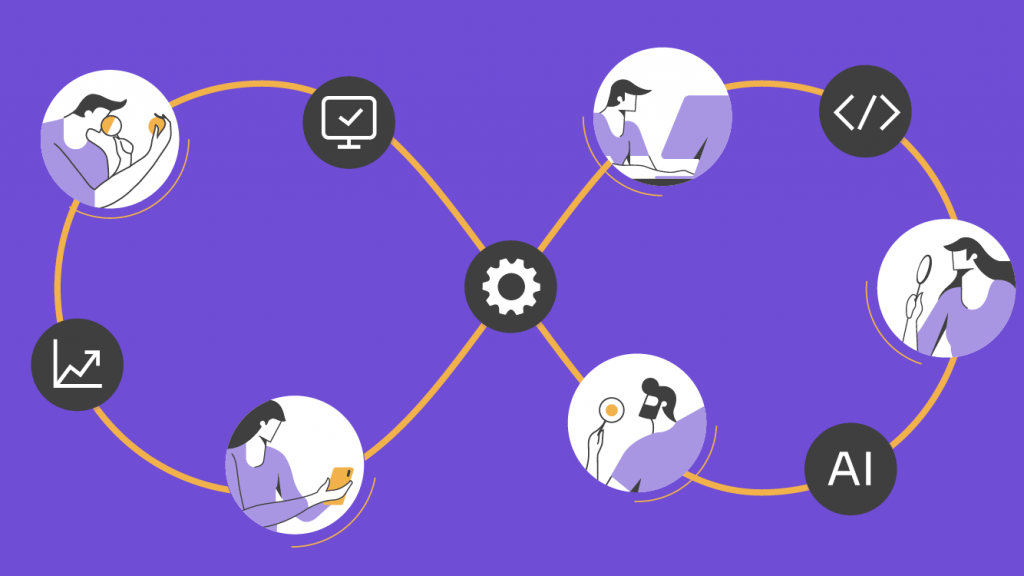

Turn alpha testing vs beta testing into continuous, AI-powered cycles that cut defects, lift adoption, and de-risk releases for enterprise-scale products.

Releasing software at scale is a high-stakes decision. A single defect in production can cost millions, while a poorly validated release can erode user trust overnight. So, while it’s key to understanding the differences between alpha and beta testing, enterprises’ focus should be on how to integrate both as continuous, AI-powered validation cycles within agile delivery.

Alpha testing inside a controlled environment detects critical issues early. Beta testing with external users validates usability, adoption, and performance in real conditions. When orchestrated together across multiple test cycles, they become a mechanism for reducing defect costs, accelerating adoption, and protecting business credibility.

At Abstracta, we help organizations embed these cycles directly into their agile pipelines, applying AI-driven automation to detect hidden issues, process user feedback at scale, and transform software testing into a growth driver.

Book a meeting with our experts!

Definition: Alpha Testing vs Beta Testing

Alpha testing is an internal validation cycle executed by internal testers, developers, and other internal employees in a controlled environment to detect critical issues before external release. Beta testing is an external validation cycle where real users provide feedback on usability, performance, and adoption under real-world conditions.

Comparative Table: Alpha vs Beta Testing

Alpha and beta testing differ in scope, environment, and participants. Alpha validates stability internally, while beta validates usability externally. Both act as continuous cycles in agile delivery.

| Aspect | Alpha Testing | Beta Testing | Shared Value |

|---|---|---|---|

| Purpose | Detect critical bugs, validate stability, and core functionality | Validate usability, adoption, and customer satisfaction | Both reduce release risk |

| Participants | Development team, internal testers, internal employees | External users, public beta testers, target audience | Both provide structured feedback |

| Environment | Controlled testing environment, internal infrastructure | Real-world devices and conditions | Both gather feedback from real users |

| Timing | Iterative internal cycles starting early and continuing during sprints and post-release | Experiments with external users beginning after internal stabilization and continuing pre- and post-release through the feature flags, canary, or staged rollout | Both integrate into the CI/CD pipeline and are not treated as only phases |

| Focus | Core stability, major bugs, and internal stability objectives | User experience, usability testing, and adoption signals | Both improve future versions |

Business Impact Through Case Examples

Alpha and beta testing apply across industries, addressing compliance in finance, safety in healthcare, and adoption in e-commerce and technology, adapting to diverse business contexts.

- Alpha testing cycle in banking: In a mobile banking platform, alpha testing cycles revealed encryption failures in high-value transaction flows. Fixing them before beta avoided regulatory penalties and prevented exposure of sensitive data. In the beta cycle, selected external users tested loan application journeys, reducing drop-offs and increasing completion rates by 40%.

- Alpha testing cycle in healthcare: In a digital health solution, alpha testing identified integration defects with electronic medical records that could have disrupted physician workflows. Correcting them reduced operational risk. In beta testing with a pilot group of doctors and patients, feedback on prescription management workflows improved usability, driving a 30% increase in adoption during rollout.

- Alpha testing cycle in e-commerce: During alpha testing of a global e-commerce platform, internal testers found pricing calculation errors under promotional campaigns. Fixing them internally prevented potential revenue leakage. Beta testing with real shoppers validated checkout flows at scale, and AI-driven analysis of user feedback highlighted cart abandonment triggers, cutting abandonment by 50%.

- Alpha testing cycle in AI applications: For a customer service chatbot, alpha testing detected bias in AI-generated responses during controlled simulations. Addressing these flaws enabled compliance with corporate communication standards. In beta testing with actual users, sentiment analysis showed improved trust and satisfaction, leading to a 25% increase in successful automated resolutions.

Best Practices: Continuous Alpha and Beta Testing

Seven Practices that Drive Results

- Define scope for each cycle: Alpha validates stability and critical issues in a controlled testing environment; beta validates usability, adoption signals, and customer satisfaction with real users.

- Select testers strategically: Internal testers and development team members run alpha; external users and public beta testers validate in real-world conditions aligned with the target audience.

- Embed automation with AI: Use black box testing and white box testing, supported by tailored AI-driven agents, to scale coverage and accelerate defect detection.

- Run multiple test cycles: Repeat alpha and beta cycles across sprints to reduce release risk and deliver value earlier in the software development lifecycle.

- Gather feedback at scale: Collect structured feedback from real users and apply AI to analyze user behaviors, transforming raw data into valuable insights.

- Prioritize fixes by business impact: Focus on critical issues and core functionality that directly influence adoption, compliance, or revenue.

- Validate before release: Conduct acceptance testing and usability testing as part of the final test stage to confirm readiness for deployment.

At Abstracta, we help organizations operationalize these practices at enterprise scale. Our AI-driven testing agents cut test execution time by up to 40% while transforming user feedback into actionable insights. Take a closer look at our case studies.

AI-Driven Tools and Technologies

AI agents are transforming alpha and beta testing from manual checkpoints into continuous, data-rich validation cycles that directly influence business outcomes.

Tools like Abstracta Copilot illustrate this shift by enabling natural language interaction with systems and providing real-time visibility into software behavior. As a result, teams can simulate user behaviors at scale, accelerate bug detection, and extract insights that would otherwise remain hidden.

Key enablers for enterprise delivery include:

- AI-powered black box testing techniques: Simulate thousands of user journeys across devices to identify hidden defects that impact adoption and revenue.

- Test orchestration platforms: Coordinate distributed testing teams, enabling multiple test cycles in parallel without delaying delivery.

- AI-driven feedback analysis: Process feedback from real users to detect sentiment, usability pain points, and adoption blockers in real time.

- Monitoring in beta testing stage: Track the performance of beta versions under real conditions to identify critical issues before final release.

Challenges and Key Considerations

Alpha and beta testing at scale introduce challenges that go beyond QA teams and impact businesses:

- Blind spots in controlled environments: Alpha cycles may validate stability but miss usability flaws that frustrate real users. Solution: Continuous monitoring with AI agents reduces this gap.

- Confidentiality risks in beta: Early exposure of future versions to external users increases the risk of leaks and reputational damage. Solution: Access controls and targeted closed beta programs help mitigate.

- Feedback overload: Large volumes of user feedback slow down decision-making unless filtered by AI. Solution: Automated feedback analysis prioritizes insights that impact adoption or revenue.

- Fragmented validation workflows: Without integration into the software development lifecycle, alpha and beta initiatives slow down decision-making. Solution: Embedding them into CI/CD pipelines boosts a faster time-to-market.

- Balancing speed and depth: Short release windows demand sharper prioritization of issues that directly impact compliance, adoption, or revenue. Solution: AI-driven triage enables business-aligned prioritization.

In a Nutshell

Alpha and beta testing are not linear checkpoints but iterative validation cycles that safeguard quality and adoption in agile delivery. Alpha builds confidence internally; beta validates the product externally with actual users. Together, they reduce risk, cut costs, and elevate customer satisfaction.

With AI, these cycles evolve into continuous intelligence: automated defect detection, real-time analysis of user behaviors, and actionable insights for decision-making.

Abstracta partners with enterprises to embed these cycles into their delivery pipelines. Through AI-driven testing and custom AI agents, we help reduce risk exposure, accelerate release cycles, and convert software quality into measurable business impact.

FAQs – Alpha Testing vs Beta Testing

What Is the Key Difference between Alpha Testing and Beta Testing?

The key differences in alpha testing vs beta testing reflect audience, risk tolerance, and data fidelity within the product development process. Leaders use alpha for controlled learning and beta for market-signal validation, aligning release scope, investment timing, and support readiness with measurable business outcomes.

What Is an Example of Alpha Testing?

A practical alpha testing example is a cross-functional team where alpha testers exercise critical software functions under instrumented builds during time-boxed sprints. This approach helps identify bugs with real telemetry, accelerating triage, sharpening acceptance criteria, and reducing downstream rework and incident exposure.

What Comes First, Alpha or Beta Testing?

Sequence depends on business risk and learning objectives; in Agile, alpha or beta testing is arranged pragmatically rather than prescribed by stage gates. In practice, testing may occur in multiple short cycles, with a beta release following internal stabilization to validate adoption, scale, and support assumptions with customers.

How Does the Environment Differ between Alpha and Beta Testing?

The environment for alpha and beta testing differs by control, observability, and risk appetite across stakeholder groups. Here, beta testing takes place in production-like settings, where a curated group of users yields user interaction patterns for prioritizing usability, performance, and adoption risks.

What Are the Main Techniques Used in Alpha Testing?

Core techniques used in alpha testing include exploratory charters, fault injection, contract checks, and targeted automation focused on early high-risk workflows and new features. These practices create actionable evidence for design and engineering, enhancing product quality while shortening learning loops and containing operational risk.

When Should a Team Switch from Alpha to Beta Testing?

Trigger the transition when risk hotspots trend down and learning yield declines; many teams pilot a closed beta to validate scale and support workflows. Over a few weeks, terms like alpha testing phase and beta testing phase serve as planning markers, while execution remains iterative and data-driven.

How Do Tester Roles Vary in Each Testing Phase?

We do not treat the testing phase as a linear gate; roles flex by iteration to maximize learning, safety, and speed-to-value. Engineers and product leads co-own user acceptance testing, while customers contribute valuable feedback through structured experiments, aligning operational readiness with commercial objectives.

What Are Common Challenges Faced during Beta Testing?

Frequent challenges in beta testing include inconsistent participant engagement, sparse telemetry, misaligned incentives between product, support, and sales, and unclear success definitions. Mitigations include eligibility criteria, incentives linked to insights, strong observability, and a clear escalation path to protect customers and learn meaningfully.

How Do AI Agents Influence Alpha and Beta Initiatives?

AI agents influence alpha and beta initiatives by generating test data, orchestrating environments, prioritizing anomalies, and predicting risks across validation cycles. Deployed responsibly, they reduce manual toil, accelerate insight velocity, and help leaders allocate budgets toward experiences that materially shift acquisition, retention, and expansion.

What KPIs Matter Most for Steering Alpha and Beta Efforts?

The KPIs that matter most for steering alpha and beta efforts include learning velocity, defect burn-down, time-to-mitigate, conversion effects, and support load. These metrics align experiments with business outcomes, connecting activation, retention, satisfaction, and revenue impact directly to release decisions and investment priorities.

How We Can Help You

With over 17 years of experience and a global presence, Abstracta is a leading technology solutions company with offices in the United States, Chile, Colombia, and Uruguay. We specialize in software development, AI-driven innovations & copilots, and end-to-end software testing services.

We believe that actively bonding ties propels us further. That’s why we’ve forged robust partnerships with industry leaders like Microsoft, Datadog, Tricentis, Perforce, Saucelabs, and PractiTest, empowering us to incorporate cutting-edge technologies.

Visit our Solutions Webpage!

Contact us to improve your system’s performance.

Follow us on Linkedin & X to be part of our community!

Recommended for You

AI for Business Leaders: Strategic Adoption for Real-World Impact

Sofía Palamarchuk, Co-CEO at Abstracta

Related Posts

The Software Testing Wheel

What are the different factors of software quality and how do we test them? Software quality… sounds vague, doesn’t it? We made a fun software testing wheel to depict all of the parts that make up the “whole” when we mean software quality. According to ISO…

Michael Bolton to Speak at TestingUY, Latin America’s Largest Testing Conference

Michael Bolton is coming this May to Montevideo to deliver the closing keynote at the fourth annual TestingUY. Since it all started in 2014, Abstracta has been an organizer of TestingUY, with our co-founder, Federico Toledo PhD, playing a major role in planning the event…

Search

Contents

Categories

- Acceptance testing

- Accessibility Testing

- AI

- API Testing

- Development

- DevOps

- Fintech

- Functional Software Testing

- Healthtech

- Mobile Testing

- Observability Testing

- Partners

- Performance Testing

- Press

- Quallity Engineering

- Security Testing

- Software Quality

- Software Testing

- Test Automation

- Testing Strategy

- Testing Tools

- Work Culture