AI SaaS product classification criteria for banking and fintech. A practical framework focused on governance, risk, compliance, and enterprise decision-making.

Financial institutions adopt AI SaaS products across processes that involve operational risk, regulatory compliance, and institutional trust. In these environments, AI SaaS product classification criteria play a central role in guiding technology decisions before adoption and scale.

Clear classification gives technical, risk, and business teams a shared way to reason about how AI affects financial operations over time. This article presents classification criteria designed specifically for banking and fintech contexts, where decision quality and system reliability are critical.

Abstracta develops AI solutions for banking and fintech designed to operate reliably in complex, regulated environments. If you are exploring or scaling AI SaaS in regulated financial contexts, explore our financial software development services.

Why AI SaaS Classification is Critical in Financial Services

AI SaaS classification in financial services is closely tied to how responsibility, escalation paths, and operational ownership are defined as AI enters core workflows.

In banking and fintech, technology decisions shape decision-making processes that affect customers, transactions, and regulatory obligations. Classification criteria provide a structured way to evaluate AI SaaS products based on their role within the organization.

Well-defined product classification criteria help institutions:

- Understand how an AI SaaS product participates in regulated workflows

- Define governance and oversight responsibilities early

- Align testing strategies with operational exposure

- Support consistent decisions across technology and business teams

This structure transforms isolated evaluations into repeatable, auditable decisions, which are core to governance and ROI across AI solutions.

A Risk-driven Approach to AI SaaS Classification

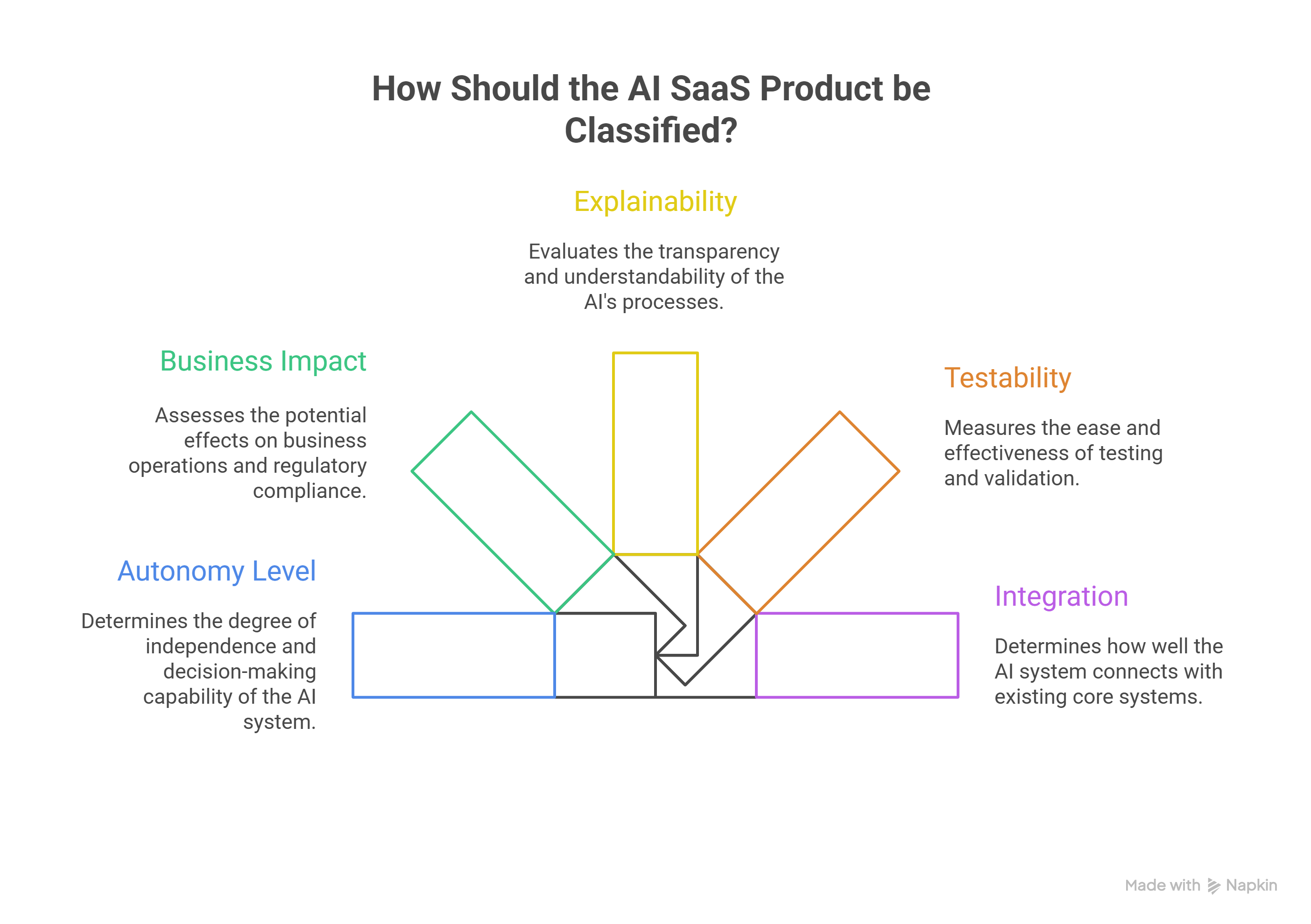

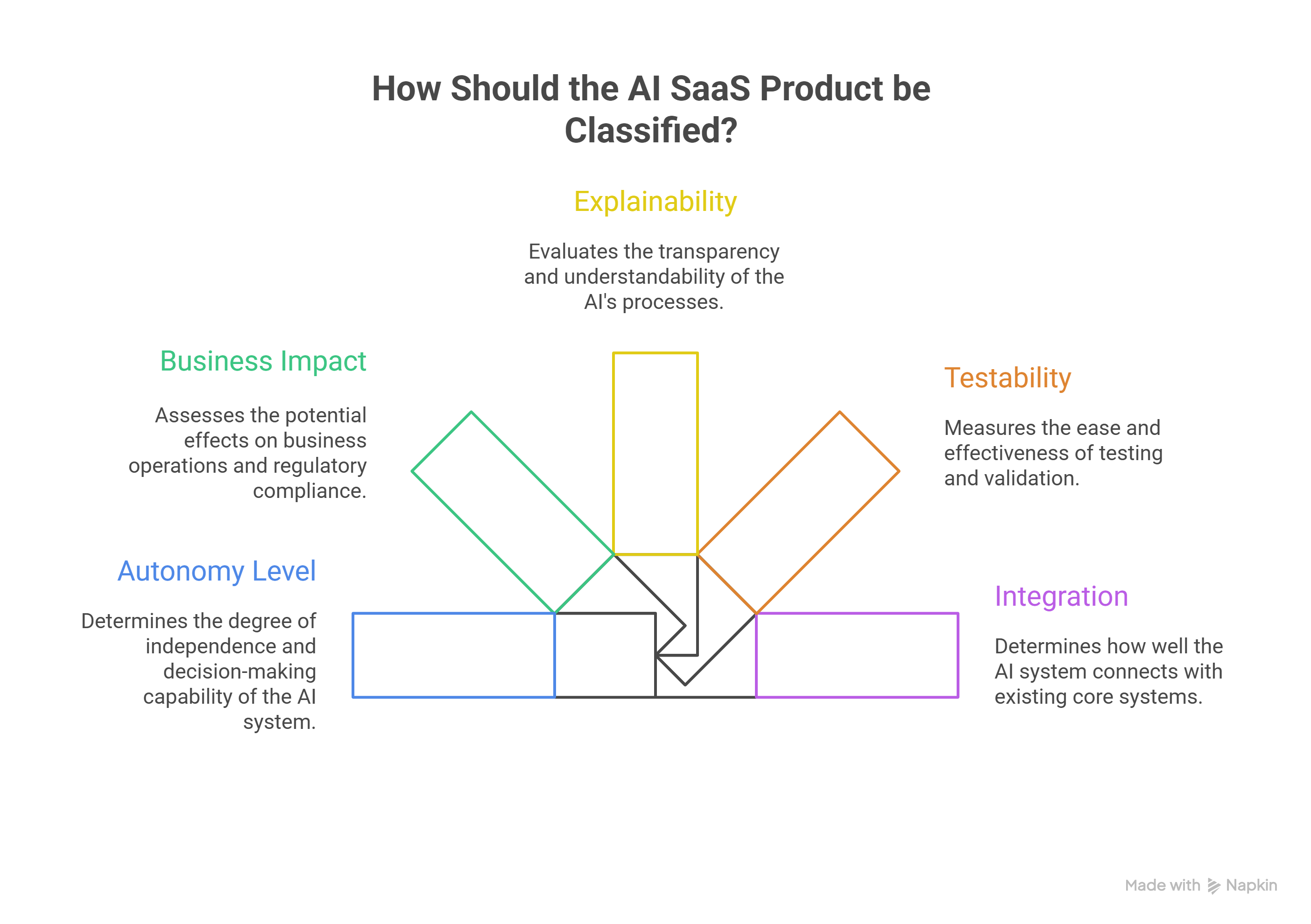

An effective AI SaaS classification approach focuses on how a product operates within real systems. This perspective considers autonomy, impact, validation capability, and integration depth rather than surface-level features.

The following key classification criteria describe how AI SaaS products behave once they become part of day-to-day financial operations.

Classification Criterion #1: Level of Autonomy

Autonomy defines how an AI SaaS product participates in operational flows and how responsibility is distributed.

- Assistive AI

Supports human activity through analysis or recommendations. Validation focuses on output quality and contextual relevance. - Decision Support

Influences human decisions within defined workflows. Classification at this level drives requirements for traceability and review. - Decision Execution

Performs actions inside operational processes. This level of autonomy directly shapes control mechanisms, escalation paths, and accountability models.

In financial systems, autonomy levels influence how accountability and escalation are designed across teams. This criterion anchors governance and testing strategy expectations from the outset.

Classification Criterion #2: Business and Regulatory Impact Surface

The impact surface describes which parts of the financial system interact with the AI SaaS product.

Common areas include:

- Customer experience and service interactions

- Financial operations and transactions

- Regulatory requirements and supervisory expectations

- Handling of sensitive data and personal information

- Data privacy obligations across jurisdictions

Classifying by impact surface clarifies which stakeholders must be involved and how controls should be applied.

Classification Criterion #3: Explainability and Auditability

Explainability and auditability define how decisions can be reviewed and justified over time. In banking and fintech, alignment with compliance standards depends on the ability to reconstruct how outcomes were produced.

AI SaaS products suitable for regulated environments provide:

- Consistent records of inputs, outputs, and context

- Traceable decision paths

- Evidence accessible for audits and supervisory reviews

This capability supports long-term regulatory compliance and operational confidence.

Classification Criterion #4: Testability and Validation Capability

Testability determines how effectively an organization can validate and monitor an AI SaaS product throughout its lifecycle.

Key aspects include:

- Functional and non-functional testing design

- Behavior under edge and stress conditions

- Monitoring of production outcomes

- Detection of performance degradation or drift

- Controlled adjustment mechanisms

Strong validation capability allows institutions to manage business impact through evidence rather than assumptions.

Classification Criterion 5: Integration with Core Systems

Integration depth influences both risk exposure and operational complexity. AI SaaS product classification distinguishes between:

- Peripheral API-based integrations

- Direct interaction with core platforms

- Context-dependent workflows spanning multiple systems

This criterion informs dependency mapping, incident containment strategies, and recovery planning.

Taken together, these criteria describe how AI SaaS moves from isolated capability to operational component within financial systems.

A Practical AI SaaS Classification Matrix

By combining autonomy, impact surface, explainability, testability, and integration depth, organizations can construct a practical matrix for saas product classification criteria.

Such a matrix enables:

- Objective comparison of AI SaaS products

- Early definition of governance and validation scope

- Shared language across technical and executive teams

Important note: The matrix evolves as regulatory and operational contexts change.

This combined view becomes most valuable when it is shared across teams and applied consistently throughout the organization.

Evaluation Patterns in Financial Institutions

Financial institutions typically assess AI SaaS products through cross-functional collaboration. Engineering, QA, risk, and compliance teams apply classification criteria to align expectations and responsibilities. When this alignment occurs early, evaluations remain consistent from pilot stages through enterprise adoption.

These evaluation patterns become especially relevant when AI is applied to regulatory and compliance processes. In projects like our work with Akua, classification decisions around autonomy, integration depth, and validation scope shaped how AI accelerated compliance while maintaining regulatory control.

How Abstracta Approaches AI SaaS Evaluation in Banking and Fintech

At Abstracta, we design and build AI solutions for banking and fintech where classification decisions have direct operational consequences. Our experience comes from working with systems that cannot afford ambiguity once AI reaches production.

If you are exploring or scaling AI SaaS in regulated financial contexts, our solutions help bridge the gap between evaluation and execution, turning classification decisions into reliable, governed AI implementations.

Closing Thoughts

Clear AI SaaS product classification criteria support confident technology decisions in banking and fintech. A structured classification approach strengthens governance, improves validation practices, and aligns stakeholders around shared expectations.

Organizations exploring AI SaaS classification often begin with a structured conversation around criteria, impact, and control. Teams need to establish this shared framework early to clarify decisions before broader adoption.

FAQs about AI SaaS Product Classification Criteria

How Does a Classification System Help Choose The Right AI SaaS Product?

A classification system organizes product categories across multiple dimensions using key criteria that executives can review consistently. This structure supports selecting the right ai saas product with clear accountability and repeatable evaluation outcomes.

How Do Regulatory Compliance and Data Privacy Affect AI SaaS Selection?

Regulatory compliance and data privacy determine how AI SaaS products manage sensitive data and meet compliance standards. These factors influence deployment model choices and long-term governance viability.

What is The Difference Between Decision Support and Agentic AI?

Decision support systems influence human decisions, while agentic AI initiates actions within defined operational boundaries. This distinction shapes accountability, testing depth, and risk exposure.

Why Is Human Oversight Essential in AI SaaS Products?

Human oversight establishes supervision points for ai technologies involved in automated or semi-automated processes. It reinforces accountability across decision execution and repetitive tasks.

What Are AI Tools In Banking and Fintech AI SaaS?

AI tools use artificial intelligence and AI technologies to deliver AI solutions through SaaS platforms provided by saas providers. These capabilities support governance, evaluation, and selection decisions across regulated financial workflows.

How Do Natural Language Processing and Predictive Analytics Fit AI SaaS?

Natural language processing and predictive analytics complement machine learning, deep learning, generative ai, advanced ai, and edge ai within AI SaaS. These model choices shape validation scope, explainability expectations, and operational controls in regulated environments.

What Defines The AI SaaS Market for Decision Makers?

The AI SaaS market is shaped by market trends and market research across each target market, including healthcare ai saas and regulated finance. Decision makers compare vendors against risk, governance, and adoption readiness, beyond saas ideas.

How Do AI Ethics and AI Maturity Affect Governance?

Ai ethics guides responsible use, while AI maturity and ai maturty describe governance capability across policy, monitoring, and change control. Human oversight operationalizes governance through defined review points, escalation paths, and documented decisions.

How Do Automating Tasks and Fraud Detection Change Operations?

Automate tasks and reduce repetitive tasks through controlled workflows, including content generation in customer and internal operations. Fraud detection benefits from monitored AI outputs, traceable evidence, and clear human decision accountability.

How Should Teams Run a Step By Step Process for Adoption?

A step-by-step process starts from customer persona priorities, then maps pain points, business challenges, and success criteria. This sequence keeps evaluation practical, audit-ready, and aligned with real decision constraints in regulated banking and fintech.

About Abstracta

With nearly 2 decades of experience and a global presence, Abstracta is a technology company that helps organizations deliver high-quality software faster by combining AI-powered quality engineering with deep human expertise.

Our expertise spans across industries. We believe that actively bonding ties propels us further and helps us enhance our clients’ software. That’s why we’ve built robust partnerships with industry leaders, Microsoft, Datadog, Tricentis, Perforce BlazeMeter, Saucelabs, and PractiTest, to provide the latest in cutting-edge technology.

Embrace agility and cost-effectiveness through Abstracta quality solutions.

Contact us to discuss how we can help you grow your business.

Follow us on Linkedin & X to be part of our community!

Recommended for You

QA Outsourcing Services: Complete Guide to Quality Assurance Outsourcing

Leading the Shift to Agentic AI in QA: 10 Lessons for Enterprises

AI Signals Your QA Team Should Track (Without Drowning in Data)

Sofía Palamarchuk, Co-CEO at Abstracta

Related Posts

How to Use Generative Artificial Intelligence to Empower Your Business

The massification of the use of Generative Artificial Intelligence is leading to its democratization. This can empower companies in unimaginable ways. How can we take advantage of all its benefits? We invite you to review everything we talked about in our last webinar, organized by…

Tips for Using ChatGPT Safely in Your Organization

Is resisting change worth it? At Abstracta, we believe it’s essential to prepare ourselves to make increasingly valuable contributions to our society every day and to do so securely. In this article, we share practical and actionable tips so you can harness the full potential…

Search

Contents

Categories

- Acceptance testing

- Accessibility Testing

- AI

- API Testing

- Development

- DevOps

- Fintech

- Functional Software Testing

- Healthtech

- Mobile Testing

- Observability Testing

- Partners

- Performance Testing

- Press

- Quallity Engineering

- Security Testing

- Software Quality

- Software Testing

- Test Automation

- Testing Strategy

- Testing Tools

- Work Culture