Testing Generative AI applications requires new strategies—ones that address variability, scale, and enterprise compliance. In this article, we highlight critical aspects of GenAI testing and how Abstracta helps enterprises adopt it with confidence.

With the widespread use of advanced technologies like GPT and other Large Language Models (LLMs), the methods we employ to enhance quality in software development and testing must evolve to match the complexity and adaptability of these systems.

Unlike traditional deterministic applications, AI-driven models introduce inherent variability, requiring novel testing strategies to enable reliability, accuracy, and security.

Applications integrating GPT and other LLMs are transforming industries such as customer service, medicine, education, finance, content creation, and beyond. These systems operate through natural language processing, image generation, and multimodal capabilities, making them highly versatile but also introducing significant challenges in evaluation and control.

Such advancements bring unique challenges, especially in software quality, highlighting the need for generative AI testing strategies that go beyond conventional validation techniques. Boosting software robustness requires dynamic and adaptive software development approaches that can assess AI behavior under diverse and unpredictable conditions.

The ability to integrate these models via APIs opens up novel possibilities in product development, but it also raises critical questions regarding reproducibility, fairness, and security. This introduces additional layers of complexity in test automation, as it requires handling non-deterministic responses and optimizing token consumption while maintaining meaningful evaluation criteria.

Design enterprise-ready GenAI testing strategies with Abstracta’s experts.

Check our case studies to see proven impact in action

Hyperparameters

Hyperparameters are external configurations of a machine-learning model. They are set before training and are not learned by the model itself from the data.

Unlike model parameters, which are learned from the data during training, the development team is the one who determines the hyperparameters. They are crucial for controlling the behavior and performance of the algorithm.

To illustrate, consider a video game in development: hyperparameters would be like the difficulty settings configured before starting a test session. These settings, such as the power of enemy characters or how often they appear, don’t change during the game. Yet, they can greatly impact how the game feels and its difficulty level.

In Generative Artificial Intelligence, the right choice of hyperparameters is key to optimizing the model’s accuracy and effectiveness. Common examples include the learning rate, the number of layers in a neural network, and the batch size.

Temperature and Seed in Generative AI Models

Although these terms don’t fit the classic definition of hyperparameters (like learning rate or batch size used in machine learning model training), they serve a similar function in generative model practice and are fundamental for testing with Generative Artificial Intelligence support.

Their importance in generative model settings and their impact on outcomes make them akin to hyperparameters in practical terms. Therefore, in some contexts, especially in describing generative models, they are referred to as hyperparameters, albeit a special type of configuration.

They affect how the model behaves (in terms of randomness or reproducibility) but are not learned by the model from data. They are configured before using the model for generation.

Temperature

When using the API, it’s important to consider the temperature we choose, which determines the balance between precision and creativity in the model’s responses. It can vary from 0 to 2: the lower the temperature we select, the more precise and deterministic the response. The higher it is, the more creative or random.

Seed

This is an initial value used in algorithms to enable the reproducibility of random results. Using a specific seed, AI can help foster conditions where, under the same inputs, the model generates consistent responses.

This is crucial for testing, as it increases the reproducibility of results and allows for more precise and reliable evaluation of model behavior in different scenarios and after changes in code or data. Reproducibility plays a key role in testing environments. Using consistent test data helps improve the quality and efficiency of applications based on Generative Artificial Intelligence.

Tokens

In language or generative models, tokens are basic text-processing units, like words or parts of them. Each token can be a word, part of a word, or a symbol.

The process of breaking text into tokens is called tokenization, and it’s crucial for the model to understand and generate language. Essentially, tokens are building blocks the model uses to interpret and produce language. If you’re interested in learning more about the tokenization process, we recommend visiting OpenAI’s tokenizer.

It’s important to note that models have a limit on the number of tokens they can process in a single step. Upon reaching this limit, they may start to “forget” previous information to incorporate new tokens, which is a crucial aspect of managing the model’s memory.

Max Tokens Parameter and Cost

Token limit and cost: Not only does the number of tokens processed affect the model’s performance, but it also has direct implications in terms of cost. In cloud-based services, token usage is a key factor in billing: charges are based on the number of tokens processed. This means that processing more tokens increases the service cost.

Max_tokens parameter: Using the max_tokens parameter is essential in API configuration. It acts as a controller that determines when to cut off the model’s response to avoid consuming more tokens than necessary or desired.

In practice, it sets an upper limit on the number of tokens the model will generate in response to a given input and allows managing the balance between the completeness of the response and the associated cost.

Although tokens are not hyperparameters, they are an integral part of the model’s design and operation. The number of tokens processed and the setting of the max_tokens parameter are critical both for performance and for cost management in applications based on Generative Artificial Intelligence.

For all these reasons, at Abstracta, we emphasize the need to find a proper balance between cost and outcome when providing the number of tokens the API can use.

Applicable Testing Approaches in Generative Artificial Intelligence

Testing applications using Generative Artificial Intelligence, as well as testing generative AI systems like LLMs, presents unique challenges that demand innovative testing techniques. In fact, what they require is a mix of traditional and AI-specific approaches.

Below, we explore different methods to evaluate these systems effectively. To address these, let’s review the most well-known categorization in the context of testing generative AI apps: black-box and white-box methods

Black-Box

Black-box methods focus on the system’s inputs and outputs, regardless of the underlying code. The key here is understanding the domain, the system, and how people interact with it. Internal implementation comprehension is not necessary.

The major limitation is the lack of insight into what happens inside the system, i.e., its implementation. This can lead to less test coverage and redundant cases. We cannot control internal system aspects. Moreover, testing can only begin once the system is somewhat complete.

Recommended read: Overcome Black-Box AI Challenges.

White-Box

In contrast to black-box methods, the white-box approach requires a deep understanding of the system’s interior.

We can analyze how parameters and prompts are configured and used, and even how the system processes responses. This is feasible because we can look inside the box and leverage available information to design tests.

White-box approaches enable more detailed analysis, help avoid redundant test execution, and support efforts to create tests with broader coverage.

Still, there are drawbacks: programming knowledge and API handling are necessary. This might lead to bias, precisely due to knowledge of the construction. Such bias could limit our creativity in generating tests and risk losing focus on the real experiences and challenges of people using the systems.

If you’re interested in diving deeper into testing concepts, we recommend you read Federico Toledo’s book ‘Introduction to Information Systems Testing.’ You can download it for free!

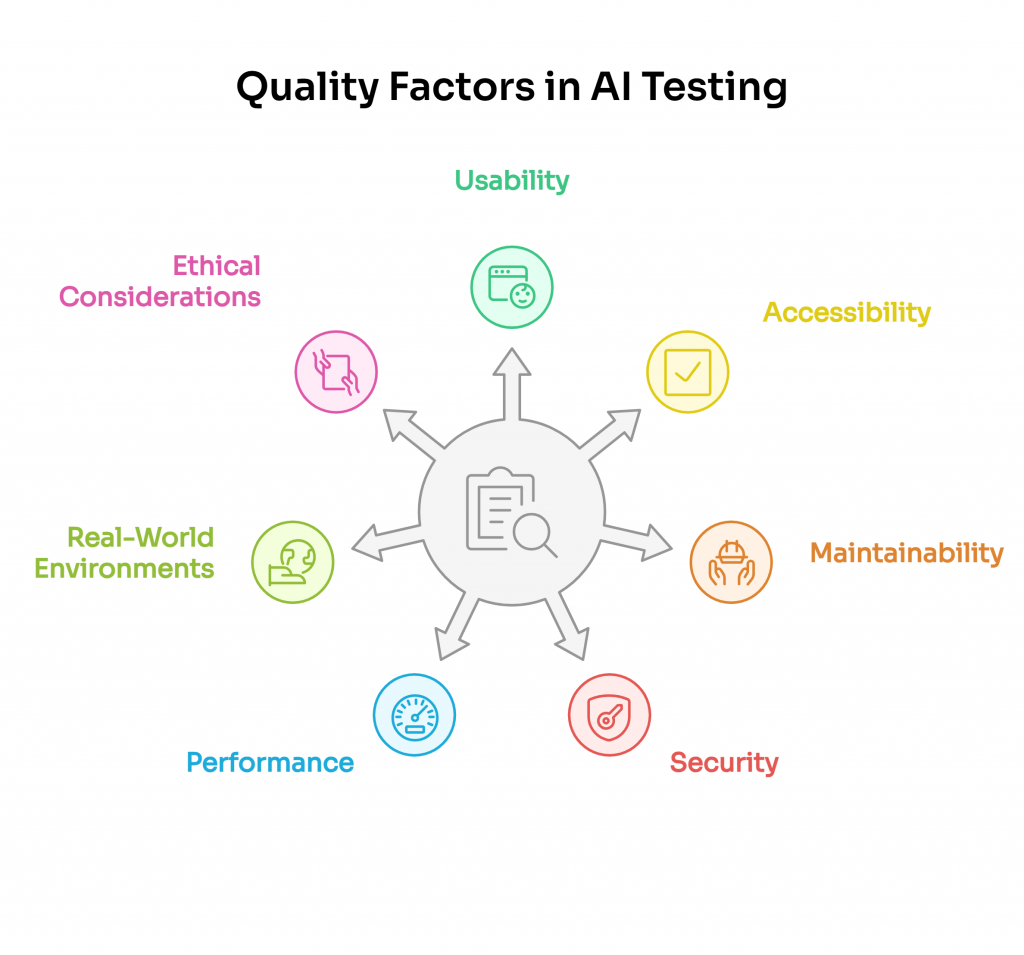

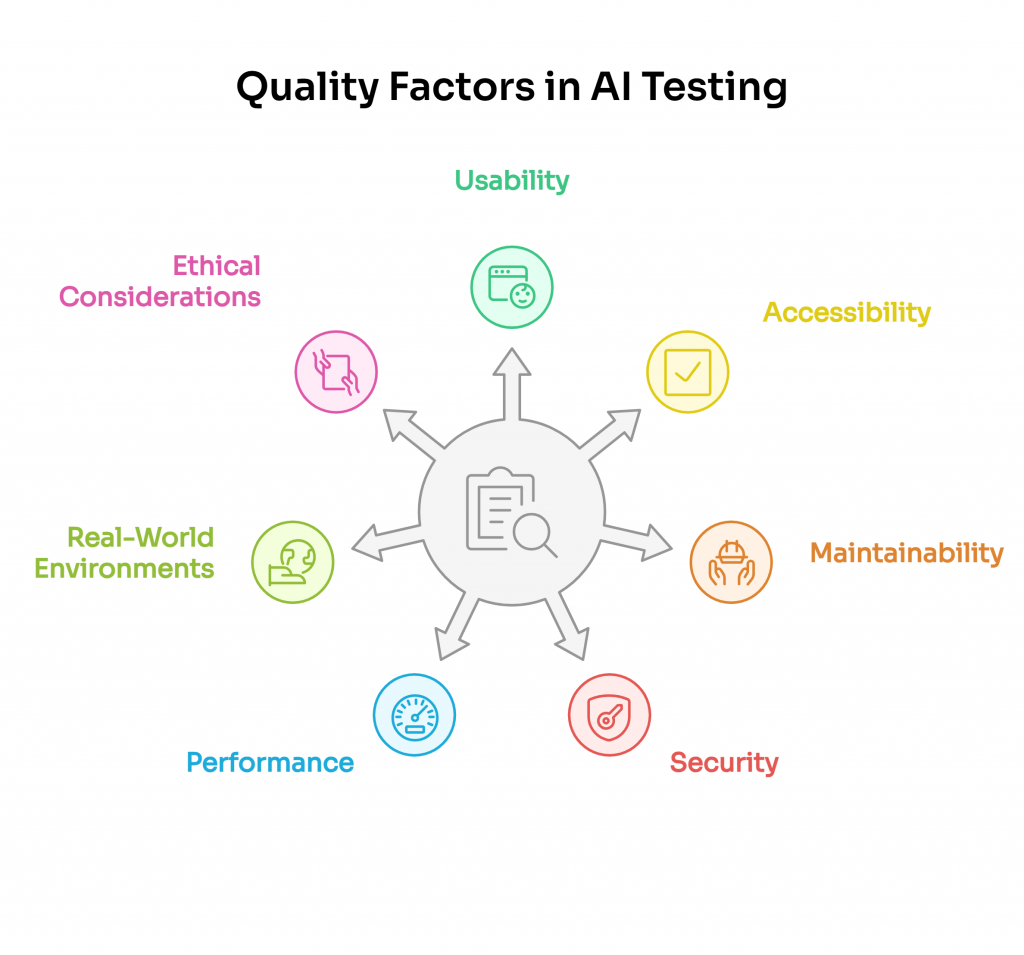

Quality Factor-Based Approach

Another high-level approach involves asking questions guided by different software quality factors. These include reliability, usability, accessibility, security, real-world environments, and ethical considerations. In each of these aspects, it’s important to consider both general and specific questions for systems based on Generative Artificial Intelligence.

Usability and Accessibility

As applications increasingly have conversational interfaces, we face the challenge of making them inclusive and adaptable. It’s crucial to validate whether they are intuitive and useful for all people.

They should comprehend different voices under various conditions and provide understandable responses in different contexts and needs. Here, accessibility goes beyond mere compatibility with assistive tools. It seeks effective and accessible communication.

Maintainability

In the maintainability aspect, we aim to understand how easy it is to update and change the system. How can we automate tests for a system with non-deterministic results (that is, not always yielding the same results)? Are regression tests efficiently managed to prevent negative impacts on existing functionalities? Are prompts managed like code, easy to understand, well-modularized, and versioned?

Security

Within the security framework, GAI introduced a new type of vulnerability known as ‘Prompt Injection.’ Like SQL Injection, an attacker could alter the system’s expected behavior through unauthorized data manipulation, injecting malicious instructions, or extracting unintended information.

They do this by maliciously manipulating inputs, knowing that if not carefully processed and concatenated to a preset prompt, they could exploit this vulnerability. In testing, we have to consider these types of attacks. We review how the system handles unexpected or malicious inputs that could alter its functioning.

One important aspect of security evaluation is adversarial testing, where AI models are exposed to intentionally crafted inputs designed to exploit vulnerabilities. This approach helps identify and mitigate generative AI risk, fostering robust and secure applications. Another critical threat vector is when the model generates code or scripts that could be used to execute malicious code, particularly in coding assistants or autonomous agents.

How does the system respond to inputs designed to provoke errors or unwanted responses? It may return harmful, misleading, or nonsensical outputs. Or, in some cases, it may reveal system prompts or sensitive data. Testing these scenarios helps us detect such behaviors early and implement safeguards to prevent them in real-world use.

This evaluation is essential to check if applications based on Generative Artificial Intelligence are safe and resistant to manipulation attempts, which calls for thorough testing.

We invite you to read this article! AI for Business Leaders: Strategic Adoption for Real-World Impact

Performance

Here, we focus on how the system handles load and efficiency under different conditions, applying principles of performance testing. In the context of GAI, many questions arise.

How does our system perform when OpenAI’s service, used via the API, experiences slowness or high demand? This includes evaluating the system’s ability to handle a high number of requests per second and how this affects the quality and speed of responses.

Moreover, we need to consider efficient computational resource management, especially regarding token usage and its impact on performance and operational costs. Analyzing performance under these circumstances is crucial to check whether applications based on GAI are not only functional and safe but also scalable and efficient in a real-use environment.

Real World Environments

Traditional testing setups often simulate ideal conditions, but Generative Artificial Intelligence systems must also be validated in real-world environments, where factors like noisy inputs, fluctuating internet speeds, incomplete user prompts, and diverse user behaviors impact the system’s reliability.

Testing in these environments helps uncover issues that remain hidden in lab conditions, providing a more accurate picture of how the system behaves under real-world conditions. However, doing so also introduces specific risks, especially when the system under test is capable of generating dynamic content or interacting with real users or external APIs.

Uncontrolled environments can lead to unexpected outputs, trigger unwanted actions, or expose sensitive information if not properly sandboxed. This makes data security a critical concern, as systems may inadvertently access or reveal protected content during testing.

That’s why testing in real-world scenarios must be planned carefully, using isolated environments, realistic but anonymized data, and strict monitoring. The goal is to simulate production-level conditions without compromising privacy, performance, or system integrity.

Ethical Considerations

Beyond technical robustness, testing Generative AI also demands attention to ethical considerations. These include identifying biased outputs, preventing misinformation, and evaluating how systems handle sensitive topics such as gender, accessibility, religion, ageism, or politics.

Moreover, quality engineers must assess how AI behaves when prompted in ethically complex scenarios, where the correct response may depend on context, cultural norms, or subjective human judgment.

Responsible testing includes proactively identifying these risks and focusing on mitigating ethical issues before they can affect real users. This requires not only diverse testing teams and comprehensive scenario coverage, but also a clear framework to define acceptable outputs and flag potentially harmful behaviors.

Generative AI: Why We Recommend Using Evals

At Abstracta, we recognize the importance of responsible and efficient use of LLMs and the tools we build based on them. To keep pace with development, we face the challenge of automatically testing non-deterministic systems. The variability in expected outcomes complicates the creation of effective tests.

Faced with this challenge, we recommend adopting OpenAI’s framework known as ‘Evals’ for regression testing. It works through different mechanisms. To address the non-determinism of responses, it allows the use of an LLM as a ‘judge’ or ‘oracle.’ This method, integrated into our regression tests, lets us evaluate the results against an adaptable and advanced criterion. It aims to achieve more effective and precise tests.

What are OpenAI Evals?

OpenAI Evals is a framework specifically developed for evaluating LLMs or generative AI tools that leverage these models. This framework is valuable for several reasons:

- Open-Source Test Registry: It includes a collection of challenging and rigorous test cases, in an open-source repository. This facilitates access and use.

- Ease of Creation: Evals are simple to create and do not require writing complex code. This makes them accessible to a wider variety of people and contexts.

- Basic and Advanced Templates: OpenAI provides both basic and advanced templates. These can be used and customized according to each project’s needs.

Incorporating Evals into our testing process strengthens our ability to validate if LLMs and related tools function as intended, while improving overall test quality. Additionally, organizations are increasingly adopting generative AI testing tools to enhance automation and efficiency in generative AI-based testing.

If you’re interested in this topic, we recommend reading the article ‘Decoding OpenAI Evals.’ It offers a detailed view of this framework and its application in different contexts.

Challenges and Solutions in Testing Generative AI Applications

One of the most challenging aspects of testing AI in the context of Generative Artificial Intelligence is creating effective test sets that account for variability, subjectivity, and contextual dependencies in model outputs. This area, filled with complexities and nuances, requires a combination of creativity, deep technical understanding, and a meticulous approach.

Key Challenges in Testing Generative AI

- Complexity and Variability of Responses: Generative Artificial Intelligence-based systems, like LLMs, can produce a wide range of different responses to the same input. This variability makes it difficult to predict and verify the correct or expected responses, presenting challenges that traditional testing methods are not designed to handle and complicating the creation of a test set that adequately covers all possible scenarios.

- Need for Creativity and Technical Understanding: Designing tests for Generative AI requires not only a deep technical understanding of how these models work but also a significant dose of creativity, as in exploratory testing, where testers dynamically analyze system behavior. This is to anticipate and model the various contexts and uses that users might apply to the application.

- Context Management and Continuity in Conversations: In applications like chatbots, managing context and maintaining conversation continuity are critical. This means that tests must be capable of simulating realistic and prolonged interactions to assess the system’s response coherence and relevance.

- Evaluation of Subjective Responses: Often, the ‘correctness’ of generative AI outputs can be subjective or context-dependent. This is especially true when evaluating whether the model produces or tolerates harmful content such as hate speech, which requires not only technical evaluation but ethical judgment as well. So this requires a more nuanced evaluation approach than traditional software testing.

- Bias and Security Management: Generative AI models can reflect and amplify biases present in the training data. Identifying and mitigating these biases in tests is crucial to enable the system’s fairness and safety. Additionally, it is necessary to evaluate scenarios that could lead to data leakage, where sensitive information from training datasets might be unintentionally exposed in generated outputs.

- Rapid Evolution of Technology: Understanding what generative AI means is crucial, since this technology is constantly evolving and redefining how we test. As a result, test sets must be continually updated to remain relevant and effective. To dive deeper into its foundations and applications, we recommend the article “AI for Dummies, a Powerful Guide for All.”

- Integration and Scalability: In many cases, Generative AI is integrated with other systems and technologies. Effectively testing these integrations, especially on a large scale, can be complex.

- Observability: Given the complexity of systems, and as most approaches are black-box due to context, data, or code access restrictions, observability solutions play a key role in our effectiveness in testing.

While this presents a major challenge, it also presents a singular opportunity to venture into uncharted territories in software quality.

Our Solution

At Abstracta, we embrace this challenge with Abstracta Copilot, our AI powered testing tool and solution designed to enhance manual testing and observability in complex systems.

By leveraging Generative AI, Abstracta Copilot helps teams streamline test case creation, identify edge cases, and analyze large volumes of test results with greater efficiency.

Thanks to the ability to understand and make visible through observability platforms what happens inside the system, we can trace each step of internal processes, correlating inputs, behaviors, and outputs. This enables us to design more precise and effective tests, improving software reliability in AI-driven applications.

Ready to boost your productivity by 30%? Revolutionize Your Testing with OUR AI-powered assistant Abstracta Copilot! Request a Demo here.

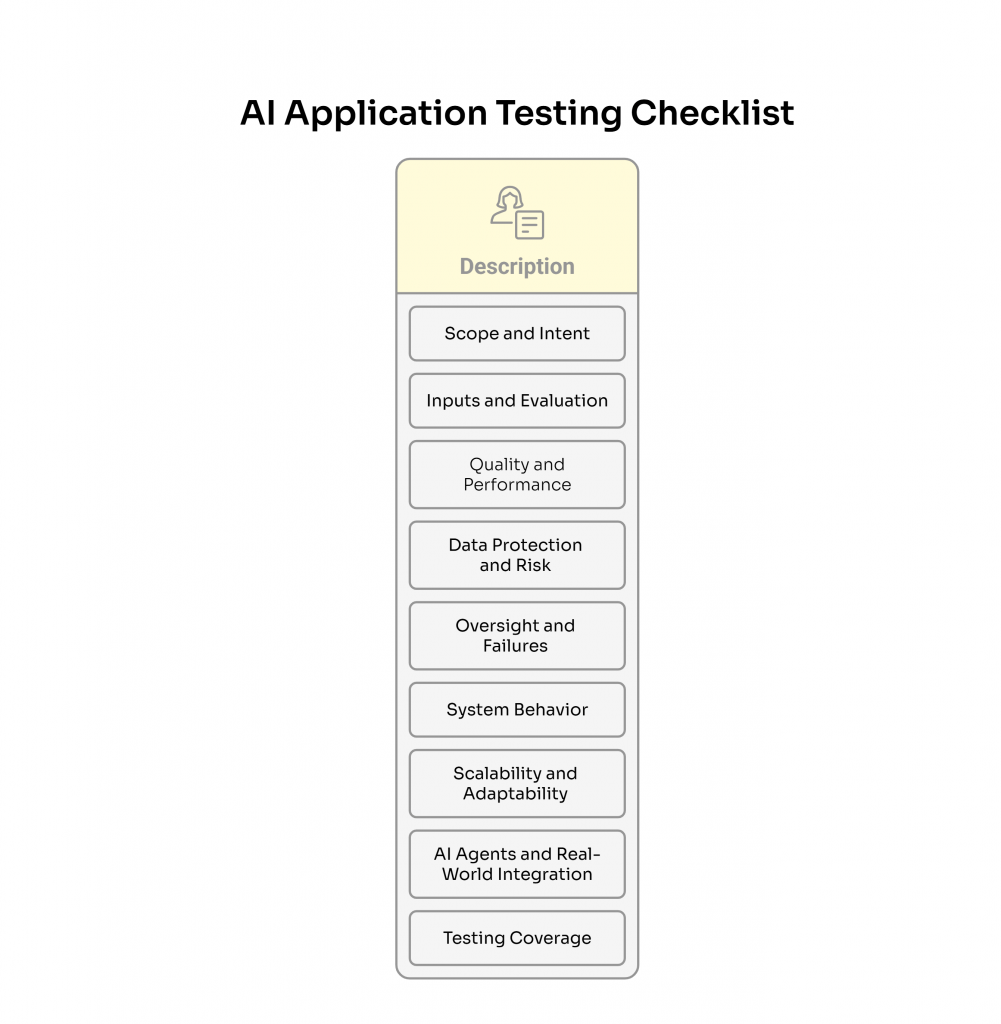

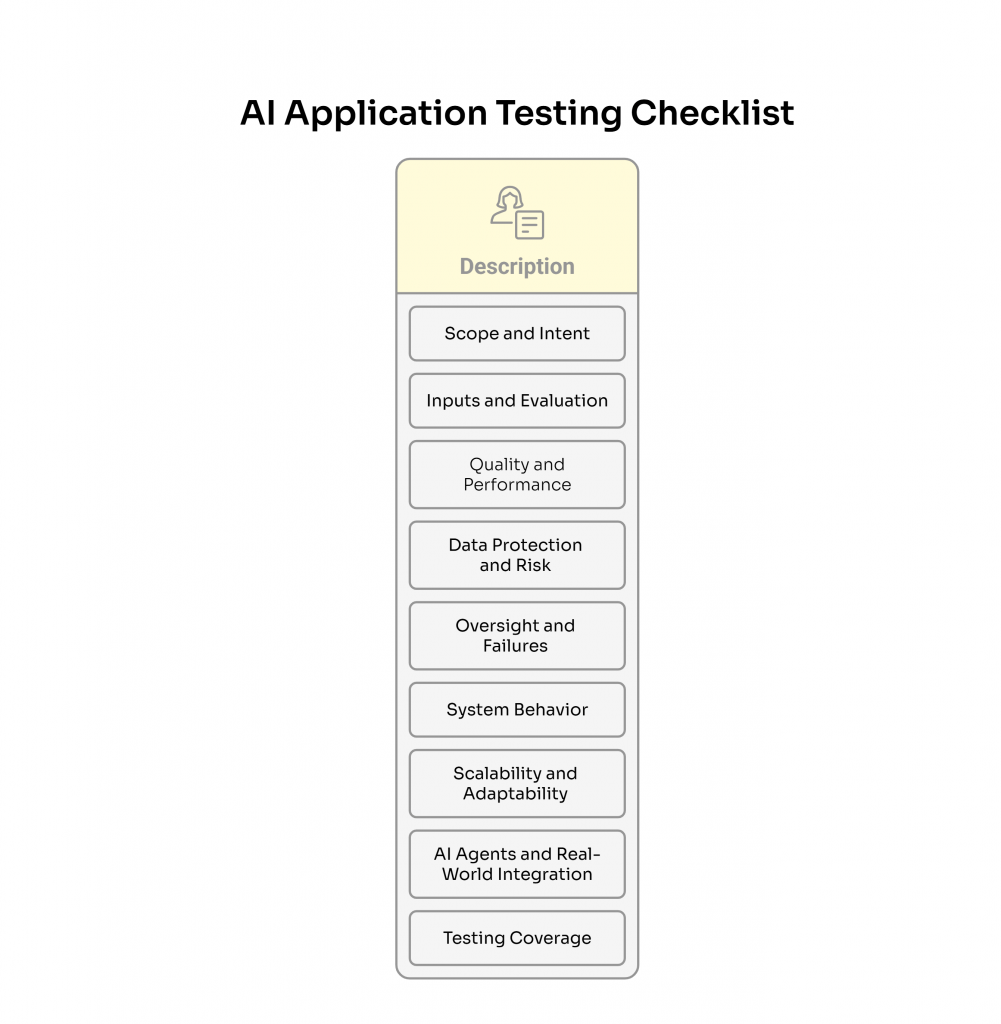

Key Considerations for Testing AI Applications at Scale

Testing AI applications involves much more than checking if an output is right or wrong. It requires a strategic view that aligns with quality goals, data sensitivity, performance expectations, and scalability. As these systems become deeply integrated into products and services, it’s crucial to cover the most critical areas.

AI Testing Checklist

Use this list to assess whether your testing strategy addresses the essential aspects of building reliable, secure, and responsible AI systems.

1. Scope and Intent

- Have you validated the system’s intended capabilities?

- Are the specific tasks the AI must perform clearly defined?

- Has testing been integrated early in the design process?

2. Inputs and Evaluation

- Are you using diverse prompts and structured data?

- Have you tested across realistic real-world scenarios?

- Are you accounting for a wide range of responses?

- Do you continuously monitor and log the AI’s responses?

3. Quality and Performance

- Are you running automated benchmarking tests?

- Are you establishing metrics and defining benchmarks?

- Do your quality metrics reflect accuracy, context, and stability?

- Have you verified the system’s ability to perform under different loads?

- Are you using external tools to monitor performance?

4. Data Protection and Risk

- Have you prevented data leaks and exposure of sensitive data?

- Have you tested for risks such as:

- Divulging personal user information

- Revealing financial records

- Extracting sensitive data

- Leaking customer-sensitive data

- Is the system restricted from accessing prohibited sites or downloading large files?

- Are integrations with external systems secure?

5. Oversight and Failures

- Are human testers involved in key evaluations?

- Are you identifying and analyzing test failures?

- Have you implemented effective AI safeguards?

6. System Behavior and Inputs

- Are you evaluating the model’s behavior when faced with incomplete or ambiguous prompts?

- Are you testing edge cases where the model may hallucinate, bypass rules, or respond inconsistently across contexts?

- How do seed and temperature parameters affect your ability to reproduce bugs?

7. Scalability and Adaptability

- Is your testing approach dynamic enough to adapt to rapid changes in model behavior after updates?

- Are you monitoring token usage and its cost/performance tradeoffs?

8. AI Agents and Real-World Integration

- Have you tested how agents or specialized copilots interact with external APIs and systems in real-world flows?

- Can you trace, through observability tools, the full journey of each input, from user prompt to system response?

9. Testing Coverage

- Are you applying different types of testing (functional, performance, accessibility, security, observability) according to the role of the AI system?

Testing practice involves learning, anticipating, and evolving with the technology to deliver higher-quality software. At Abstracta, we help teams transform these insights into concrete, scalable testing strategies.

Why Choose Abstracta for AI Transformation Services?

With nearly 2 decades of experience and a global presence, Abstracta is a leading technology solutions company with offices in the United States, Chile, Colombia, and Uruguay. We specialize in AI-driven solutions, and end-to-end software testing services.

Our expertise spans across industries. We believe that actively bonding ties propels us further. That’s why we’ve forged robust partnerships with industry leaders like Microsoft, Datadog, Tricentis, Perforce BlazeMeter, Saucelabs, and PractiTest, empowering us to incorporate cutting-edge technologies.

Gen AI has huge potential, but integrating generative AI and defining where & how to do it requires several considerations and expertise.

We’ve deployed successful Generative AI solutions for real use cases, and we would love to support you in this journey.

Find the Best Way to Implement GenAl

Easily Add Your Own AI Assistant

We’ve developed an open-source tool that helps teams build their own web-based assistants, integrating private data over any existing web application just by using a browser extension.

This enables your users to perform different cognitive tasks with either voice commands, writing messages, or sending images without touching your own app’s code! Check it out at Github.

Empower Your Team with AI Agents

Worried about your data? Or about how to tackle the challenges and changes that AI will bring to your organization? Unsure how to onboard your leaders into digital transformation with AI? We’ve got you covered.

We create tailor-made AI agents that adapt to your organization’s specific tools, workflows, and industry needs—and we accompany your teams throughout their adoption.

Take a closer look at our AI Agent Development Services.

Craft Custom Solutions with the Support of an Experienced Partner

We’ve created AI-enabled tools and copilots for different technologies. These solutions aim to boost productivity and overcome challenges such as recruitment screening processes, supporting the team’s career path, and helping business experts with AI-based copilots, among others.

Turn GenAI into enterprise-ready systems.

Schedule a strategy call and accelerate quality results with Abstracta

Follow us on Linkedin & X to be part of our community!

Recommended for You

Disaster Recovery Software Testing Plan: 7 Key Steps

Tags In

Natalie Rodgers, Content Manager at Abstracta

Related Posts

Interfaces for All: Artificial Intelligence and Accessibility Testing

Learn how AI is revolutionizing accessibility testing with smarter analysis, realistic user simulations, and automated fixes. Explore key AI-powered tools for inclusive design.

Artificial Intelligence Business Ideas: Bring your Projects to Life

Discover how to bring your Artificial Intelligence Business ideas to life using Generative AI as a core of your solutions. Join us to learn all about where to gather information, how to create a custom GPT, develop and evolve rapid prototypes, and leverage Microsoft AI…

Search

Contents

Categories

- Acceptance testing

- Accessibility Testing

- AI

- API Testing

- Development

- DevOps

- Fintech

- Functional Software Testing

- Healthtech

- Mobile Testing

- Observability Testing

- Partners

- Performance Testing

- Press

- Security Testing

- Software Quality

- Software Testing

- Test Automation

- Testing Strategy

- Testing Tools

- Work Culture