Unlock API bottlenecks with testing approaches tailored to endurance, load, and traffic spikes. Strengthen API performance testing—with Abstracta’s expertise.

Behind every seamless digital experience lies a high-performing API. Whether you’re powering a checkout flow, a healthcare dashboard, or a streaming platform, performance issues at the API layer can ripple across your entire system—affecting speed, reliability, and user trust.

At Abstracta, we help organizations unlock the full potential of their APIs by revealing hidden bottlenecks and fine-tuning performance where it matters most.

In this guide, we explore the types of API performance tests, key problems, and our suggested solutions, and how to turn performance metrics into meaningful decisions.

Looking to challenge your assumptions about your system’s breaking point? Explore our performance testing services and contact us!

What Is API Performance Testing and Why It Matters

As a focused approach within performance testing, API performance testing evaluates how well your APIs perform under different conditions—load, stress, or spike. It provides the data needed to identify bottlenecks, fine-tune infrastructure, and improve user experience.

As APIs act as bridges between platforms, tools, and users, their performance directly impacts business-critical applications. The better we understand how an API behaves under pressure, the better we can prepare systems for growth, change, and unexpected surges in user activity.

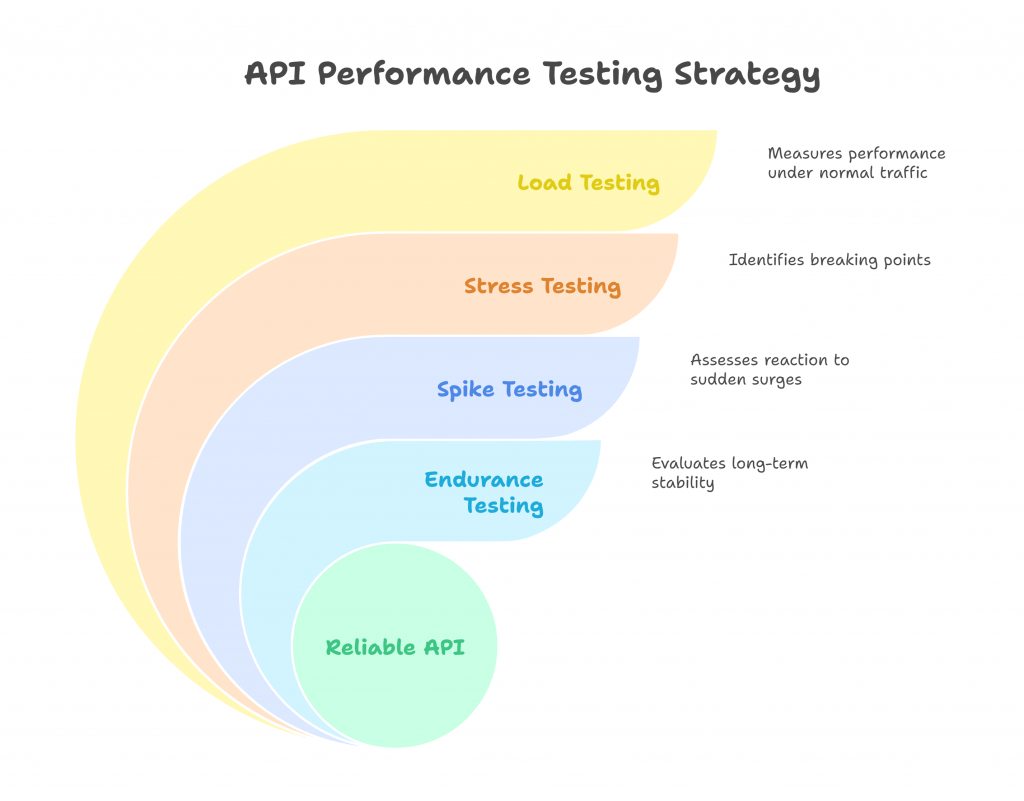

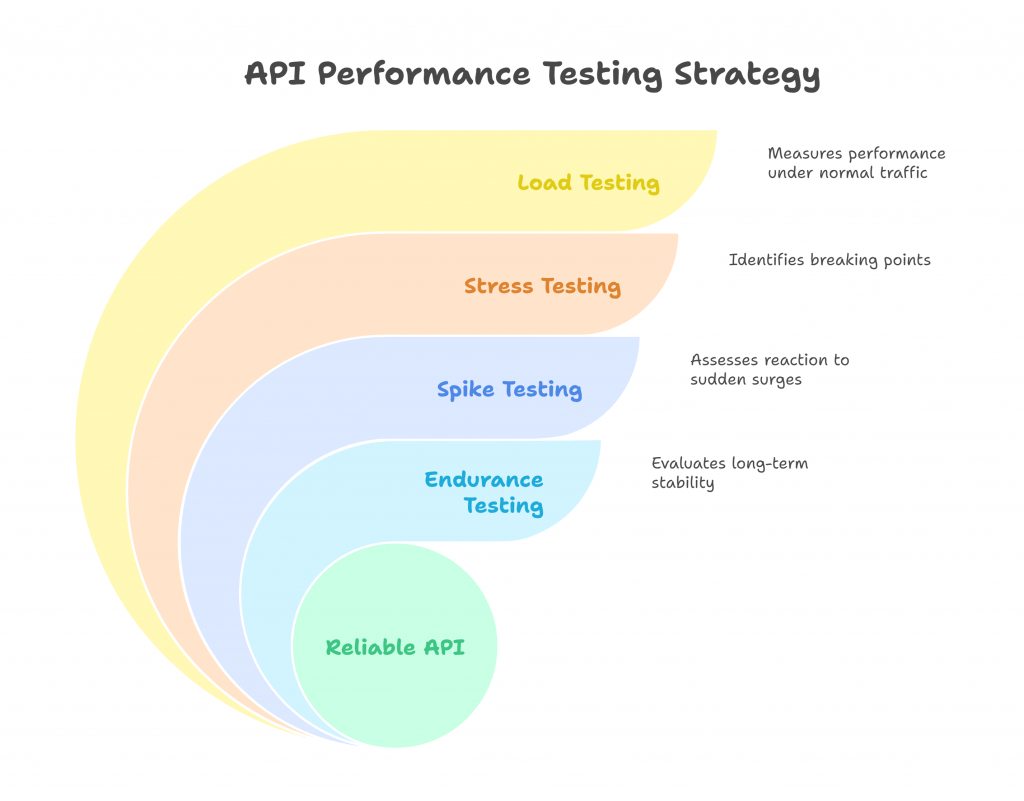

Types of API Performance Testing

To accurately evaluate how an API will perform in production, it’s essential to run different types of performance tests. Each one offers specific insights that help teams anticipate issues, fine-tune scalability, and optimize for user experience.

Let’s break them down:

Load Testing

Purpose: To see how your API performs under normal traffic levels.

What it’s about:

Load Testing is like putting your API through its daily routine. It simulates the amount of traffic you expect in production to check how well it holds up. You’ll look at metrics like response time, error rates, and throughput to make sure everything runs smoothly under typical conditions.

When to use it:

You’ll want to run load tests throughout development and before launching to confirm your API can handle the workload it was designed for.

Example:

If your e-commerce API handles 1,000 requests per minute on average, a load test will show how well it performs under this regular traffic.

Stress Testing

Purpose: To push your API to its limits and find its breaking point.

What it’s about:

With stress testing, you intentionally overload your API to see how much it can take before it starts failing. This helps you understand its limits and how it behaves when things get intense. It’s also a great way to check how quickly the system recovers after a crash.

When to use it:

Stress testing is key when you’re preparing for high-demand periods or unpredictable spikes, like a holiday sale or a viral marketing campaign.

Example:

A banking API might face a surge during Black Friday. Stress testing helps you understand how it will handle the overload and whether it can recover gracefully after hitting its limit.

Spike Testing

Purpose: To see how your API reacts to sudden traffic surges.

What it’s about:

Spike testing creates short, sharp bursts of traffic to mimic real-world scenarios where usage suddenly skyrockets. It’s all about testing how quickly your API can adapt and whether it can stay stable during these spikes.

When to use it:

If your API supports systems that experience sudden peaks—like a ticketing platform or live-streaming app—Spike testing is a must.

Example:

When concert tickets go on sale, traffic on a ticketing API can spike dramatically. Spike testing helps validate whether the API can handle those bursts without crashing.

Endurance Testing

Purpose: To check how stable your API is over long periods of use.

What it’s about:

Endurance testing, also known as soak testing, keeps your API under moderate, steady traffic for hours—or even days. The goal is to spot issues that only show up over time, like memory leaks, error accumulation, or performance slowdowns.

When to use it:

This type of testing is ideal for APIs with constant use, like streaming services or financial platforms, where reliability over time is critical.

Example:

A streaming API used during a live marathon can be tested for hours to verify it doesn’t degrade in performance as time goes on.

When to Combine All These Tests

Using these tests together gives you a full picture of your API’s performance. Load testing shows how it handles regular traffic, whereas stress testing reveals its limits, spike testing checks how it reacts to sudden bursts, and endurance testing aims to ensure that it is stable over time.

Together, they reduce risks and help you deliver a reliable, high-performing API.

Want to simulate user behavior that actually reflects how people use your product? Explore our performance testing services and contact us!

Common Challenges in API Performance Testing and How to Address Them

API performance testing can be tricky, especially when aiming for results that truly reflect production conditions. Below, we explore common challenges and practical strategies to overcome them.

#1 Creating Realistic Test Environments

The Challenge: Reproducing a production-like setup during testing is easier said than done. It requires accurate replication of hardware, software configurations, network conditions, and user interactions. A poorly designed test environment can lead to misleading results that fail to represent real-world behavior.

How to Solve It:

- Mirror production infrastructure: Use the same servers, databases, and configurations where possible. If that’s impractical, emulate key aspects like latency or bandwidth limits.

- Generate meaningful test data: Create datasets that match the scale, variety, and patterns of real user inputs. Avoid generic or static data that doesn’t reflect actual usage.

- Leverage monitoring tools: Implement tools to track the environment during testing, checking whether it stays consistent and comparable to production.

#2 Handling API Rate Limits

The Challenge: Many APIs impose rate limits to control traffic, restricting the number of requests allowed within a specific timeframe. These limits can disrupt load or stress tests, preventing testers from evaluating high-volume scenarios.

How to Solve It:

- Request temporary rate limit adjustments: Collaborate with the API provider or development team to temporarily increase rate limits for testing purposes.

- Use staggered or phased requests: Simulate high-volume traffic gradually instead of all at once, working within the rate constraints.

- Test with sandbox environments: If available, use a sandbox or staging version of the API that has relaxed rate limits specifically for testing.

#3 Simulating Diverse User Behavior

The Challenge: Real users interact with APIs in varied ways—some make frequent requests, while others interact sporadically. Reproducing this diversity during testing is crucial but challenging to implement accurately.

How to Solve It:

- Segment user profiles: Analyze historical usage data to identify common user patterns and create profiles (e.g., high-frequency users, occasional users).

- Randomize request patterns: Add variability to testing scripts by randomizing request timing, frequency, and types of actions.

- Incorporate real-world scenarios: Simulate events like spikes caused by promotions or seasonal usage shifts to reflect actual user behavior more closely.

#4 Adapting to Changes in Production

The Challenge: Production environments are rarely static. Software updates, infrastructure upgrades, and shifting usage patterns can alter API performance, rendering past testing results obsolete.

How to Solve It:

- Run tests regularly: Integrate performance testing into CI/CD pipelines to validate each new update or release.

- Monitor production metrics: Use real-time analytics to track changes in user behavior or infrastructure and adjust tests accordingly.

- Automate regression testing: Automatically rerun tests after each change to identify potential performance regressions before deployment.

Now that we’ve identified the key challenges, it’s time to focus on designing test scenarios that truly reflect how APIs are used in the real world. This is where we turn knowledge into action, uncovering critical insights and setting your APIs up for success.

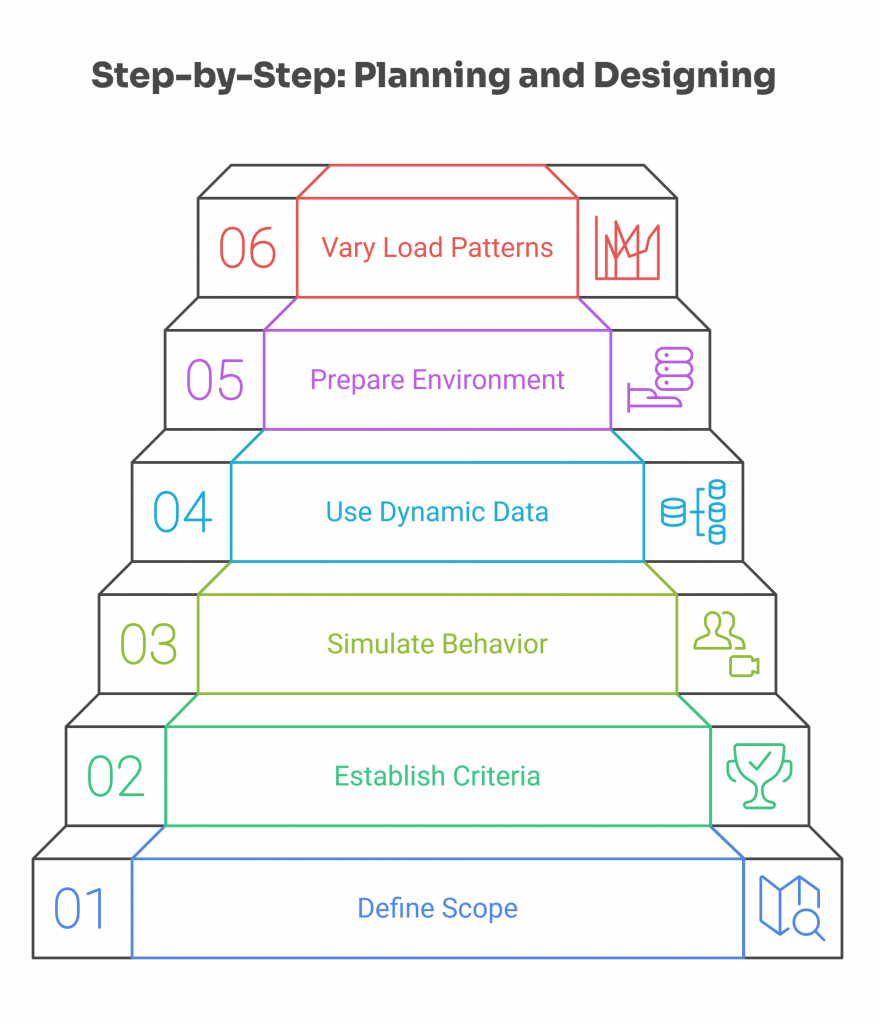

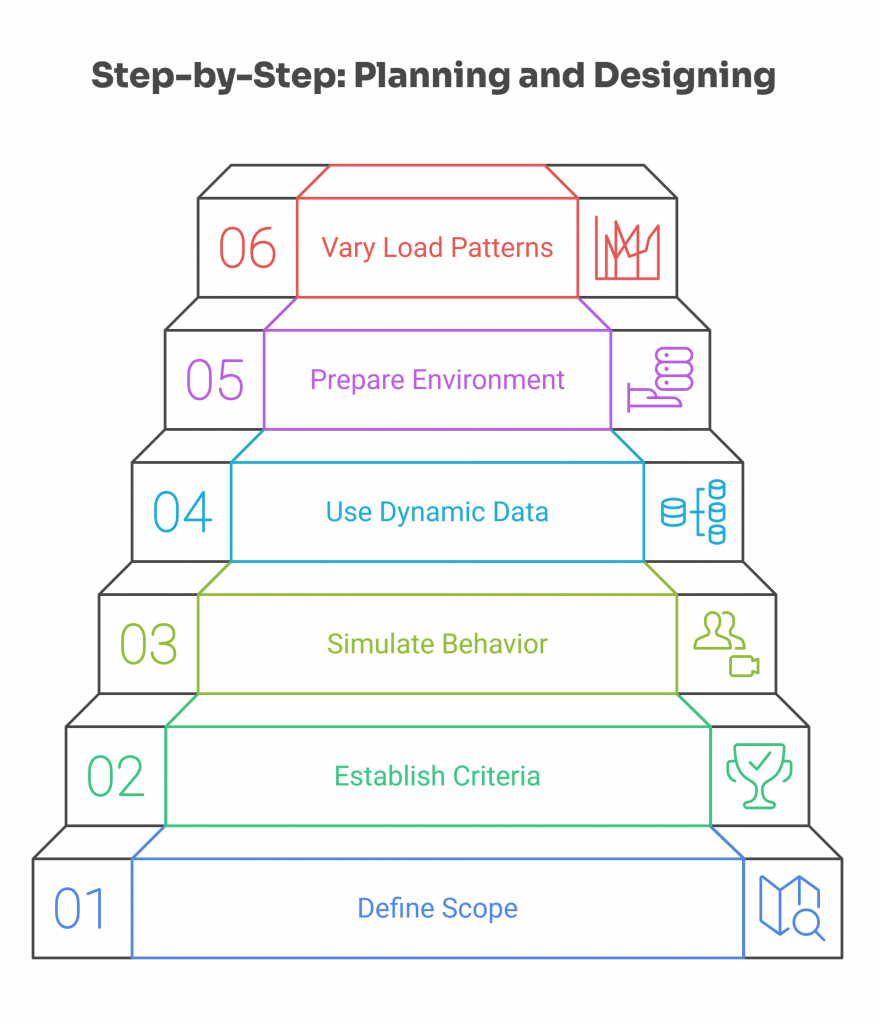

Key Stages for Designing API Performance Test Scenarios

How can you create test scenarios that truly reflect real-world API usage? Here, we present actionable strategies to help you uncover valuable insights and validate that your APIs perform when it matters most.

1. Define the Scope and Objectives

Clarify what you want to evaluate: response time under typical usage, stability over time, or resilience during traffic surges. Identify the key endpoints and transactions that users rely on most.

Example: In an e-commerce API, testing cart operations and checkout flows is more meaningful than testing the contact form.

2. Establish Success Criteria

Set clear performance goals, such as acceptable response time (e.g., under 500ms), error thresholds (e.g., below 1%), or throughput targets (e.g., 100 transactions per second). These targets guide the test and help you interpret results with context.

Example: For a payment gateway API, a response time over 1 second during checkout may be unacceptable, even under moderate load.

3. Simulate Realistic User Behavior

User traffic isn’t uniform. Some users send bursts of requests, others interact slowly or sporadically. Your tests should reflect this range.

Example: In a healthcare API, a doctor might request dozens of patient records in quick succession, while a patient may just log in and check one lab result.

Include:

- Varied request sequences

- Different data sets

- Pauses between calls (simulating human interaction time)

4. Use Dynamic and Varied Test Data

Test inputs should reflect actual usage, not static samples. Incorporate diversity in parameters, payloads, and user states. This reveals how the API handles different scenarios and avoids false confidence based on idealized conditions.

Example: A search API should be tested with common queries (“shoes”) and edge cases (empty query, long strings, special characters).

Use anonymized production-like data or generate synthetic data that reflects real-world patterns—without exposing sensitive information.

5. Prepare a Realistic Test Environment

Testing on a simplified setup limits insight. Your environment should match production as closely as possible in architecture, network latency, authentication flows, and rate limits.

Example: If your live API enforces token-based authentication and has a 1000 req/min rate limit, the test environment should mimic that behavior to expose real constraints.

Set up monitoring to track performance during the test. Collect logs and metrics to support later analysis.

6. Vary Load Patterns Across Scenarios

Not all tests need to simulate maximum pressure. Plan for different usage patterns:

- Gradual ramp-up

- Sudden spikes

- Long-duration activity

Each pattern helps you uncover different types of performance issues.

Example: In a social media API, a spike may simulate the traffic surge when a post goes viral, while endurance testing might reflect usage during overnight background processes.

How API Architecture Impacts Performance Testing

The architecture behind an API shapes its behavior under stress, and by extension, how it should be tested. Whether stateless or stateful, synchronous or event-driven, the underlying structure affects scalability, response time, and overall resilience.

Stateless vs. Stateful APIs

- Stateless APIs, like REST, treat each request independently. This simplicity often makes them easier to scale and test, since no session data needs to persist.

Example: When testing a RESTful product search API, each request can be simulated in isolation—no need to maintain user session or order history between calls.

- Stateful APIs, such as many SOAP implementations, retain context between requests. This adds complexity, requiring test scenarios that replicate multi-step flows and preserve user or transaction state.

Example: A SOAP-based insurance claims API might require multiple sequential calls: submitting a form, waiting for approval, and receiving claim status—each dependent on the last.

Synchronous vs. Asynchronous APIs

- Synchronous APIs expect a direct response after a request. Testing these typically involves measuring latency, throughput, and error rates in real time.

Example: A login API should return a success or failure instantly. If it exceeds a 300ms threshold under typical usage, it may impact user satisfaction.

- Asynchronous APIs (common in event-driven or messaging-based architectures) involve delayed or callback-based responses. Here, performance testing must simulate message queues, delayed processing, and observe how the system handles message volume over time.

Example: In a ticketing system, submitting a booking request may not immediately confirm availability. Performance testing needs to evaluate not just the API call, but how background services process the request and return a final state.

Microservices and Distributed Architectures

In a microservices-based system, APIs interact with multiple services to fulfill a single request. This distributed nature introduces more points of failure—and more opportunities for performance degradation.

Example: A checkout API might depend on services for inventory, pricing, discounts, payment, and user authentication. Testing only the surface endpoint would miss slowdowns in any of those dependencies.

To test effectively in these setups:

- Replicate inter-service communication: Use contract testing or service virtualization where necessary.

- Monitor individual service metrics: Don’t rely solely on high-level KPIs—dig into latency, retries, and failure rates per service.

- Simulate failure conditions: Test how the API behaves if a dependent service is slow or unavailable.

Already exploring tools? We’ve broken down the most relevant performance testing tools in a dedicated guide here, with pros, cons, and real-world use cases.

Best Practices and Tools for API Performance Testing

Want to boost your API’s reliability and efficiency? In this section, we share actionable best practices and tools to help you design smarter tests, identify bottlenecks faster, and deliver APIs that consistently perform under pressure.

Effective Test Creation

At Abstracta, effective API performance testing begins with precise test creation, considering realistic user loads and actual usage patterns. Carefully designed test scenarios simulate real-world usage patterns to accurately test APIs, enabling developers and quality engineers to swiftly identify performance issues, including excessive resource utilization or increased network usage.

Leveraging Performance Testing Tools

Selecting appropriate API performance testing tools enhances test accuracy. Tools such as JMeter, Postman, Gatling, or LoadRunner automate test scripts, capturing essential performance metrics like response time, error rate, and status codes. These insights highlight potential performance degradation and help teams define precise performance benchmarks.

Continuous Monitoring and Detailed Analysis

Continuous API monitoring enhances observability into system performance over time. At Abstracta, we emphasize performing a detailed analysis of this data to proactively spot performance bottlenecks, maintain API’s speed, and meet defined service level objectives, ultimately preserving user satisfaction.

Accelerate your cloud journey with Abstracta & Datadog Professional Services! We joined forces with Datadog to leverage real-time infrastructure monitoring and security analysis solutions.

Integrating Performance Testing into CI/CD

Integrating API performance testing within continuous Integration (CI) and continuous Delivery (CD) pipelines helps identify performance issues early, significantly enhancing system reliability. Running automated api tests on every code change gives immediate feedback, reducing risk and enabling rapid adjustments to meet established performance benchmarks.

Simulating Complex Architectures and Dependencies

Our approach includes simulating interactions among different software components and API dependencies. By accurately replicating complex environments, including dynamic data, multiple requests, and various load conditions, our teams effectively uncover subtle performance limitations or issues linked to data migration, inter-service latency, or unexpected behavior under increased user traffic.

Don’t miss this article! API Testing Guide: A Step-by-Step Approach for Robust APIs

Wrapping Up – API Performance Testing

API performance testing is essential to deliver reliable digital services for all industries. From initial API development through all stages of software development, at Abstracta we apply strategies ranging from basic performance testing to specialized techniques such as load testing, stress testing, spike testing, and endurance testing.

By leveraging multiple tools to effectively handle diverse API requests, our teams accurately determine each application programming interface’s breaking point. This empowers us to thoroughly measure API performance and perform detailed API load testing.

Overall, continuous performance validation helps us proactively manage issues that affect performance, consistently meeting user expectations across all expected traffic levels.

How We Can Help You

With over 16 years of experience and a global presence, Abstracta is a leading technology solutions company with offices in the United States, Chile, Colombia, and Uruguay. We specialize in software development, AI-driven innovations & copilots, and end-to-end software testing services.

We believe that actively bonding ties propels us further. That’s why we’ve forged robust partnerships with industry leaders like Microsoft, Datadog, Tricentis, Perforce, and Saucelabs, empowering us to incorporate cutting-edge technologies.

Our holistic approach enables us to support you across the entire software development life cycle.

Visit our solutions page and partner with us for API performance testing services!

Follow us on Linkedin & X to be part of our community!

Recommended for You

Salesforce Performance Testing Strategies

Natalie Rodgers, Content Manager at Abstracta

Related Posts

Black Friday 2020: Avoid E-Commerce Site Crashes and Slowness

Everything you need to know to technically prepare your website for Black Friday and Cyber Monday While it’s coming down to the wire for retailers to get their websites fully prepared for a successful holiday season, it’s not too late to optimize them in order…

Designing Performance Tests With a Little Bit of Futurology

Futurology: noun, systematically forecasting the future, especially from present trends in society I’m not going to lie, I can’t predict the future. I don’t even know what I am going to eat for dinner most days, but in testing, sometimes we have to put our…

Search

Contents

Categories

- Acceptance testing

- Accessibility Testing

- AI

- API Testing

- Development

- DevOps

- Fintech

- Functional Software Testing

- Healthtech

- Mobile Testing

- Observability Testing

- Partners

- Performance Testing

- Press

- Quallity Engineering

- Security Testing

- Software Quality

- Software Testing

- Test Automation

- Testing Strategy

- Testing Tools

- Work Culture