Understand how volume testing reveals real performance risks—beyond user load—by validating data flows, storage, and system behavior under continuous scale.

When a system starts to fail under pressure, the cause is often not the number of users, but the volume of data. Unstable processing, slow queries, corrupted records: these issues don’t appear with small inputs or perfect test scripts. They surface when the system operates at scale, with real datasets and full environments.

This article goes beyond definitions to explore how volume testing works in practice. You’ll find concrete use cases, testing dimensions that actually matter, and how to approach it not as a one-off task, but as part of your QA strategy across development, releases, and maintenance.

Let’s talk if your system needs to survive data pressure before users feel it.

What is Volume Testing?

Volume testing, sometimes referred to as flood testing, is a non-functional testing method that assesses how a system behaves when subjected to large amounts of data. Unlike load testing, which focuses on user concurrency, volume testing concentrates on the amount of data the system can handle without errors or slowdowns.

Why Use Volume Testing in Your Projects?

Incorporating it into the software development process is essential for identifying performance issues and maintaining system behavior under high data loads. This is particularly important for systems that manage financial transactions, user-generated content, or real-time data streams, where handling capacity can directly impact the user experience.

Benefits of Volume Testing

- Identify performance bottlenecks: This type of testing helps pinpoint where slow response times or system failures might occur under heavy data loads.

- Validate data integrity: It verifies that information remains consistent and accurate, even when data handling capacity is pushed to the limit.

- Measure system response time: Understanding how the system’s response time changes under varying data volumes helps prioritize performance improvements.

- Test realistic scenarios: It focuses on real-world scenarios where data flow spikes or batch processing becomes critical.

- Support production-readiness: By replicating the production environment, testers can evaluate system components in conditions close to actual usage patterns.

Key Aspects of Volume Testing

Volume testing involves much more than generating massive datasets. These are the key aspects that guide our approach throughout the development lifecycle:

- Data Volume Scope: Focuses on the scale and diversity of data the system must support without failures or degradation.

- System Behavior Monitoring: Tracks how the application processes data over time—across services, APIs, and internal components.

- Data Integrity Validation: Verifies that data is consistently stored and handled under load, without corruption, duplication, or loss.

- Test Environment Consistency: Checks if testing is run in environments closely aligned with production to reflect real operational risks.

- Ongoing Metrics Visibility: Collects insights across sprints—on response time, throughput, and system stability—to support continuous improvement.

These aspects are not tied to a single phase. They are woven into every sprint, reinforcing quality from early development through post-release performance monitoring.

Mini-Glossary: Main Concepts in Volume Testing

- Data Integrity: Accuracy and consistency of stored and processed data.

- Batch Processing Volume Testing: Verifies how bulk data operations perform under volume.

- System Behavior: How software reacts under changing data conditions or stress.

- High Data Loads: Large inflow or processing of data over short periods.

- System’s Response Time: Time taken by the system to respond under varying volumes.

How Volume Testing Works: Strategy, Setup, and Execution

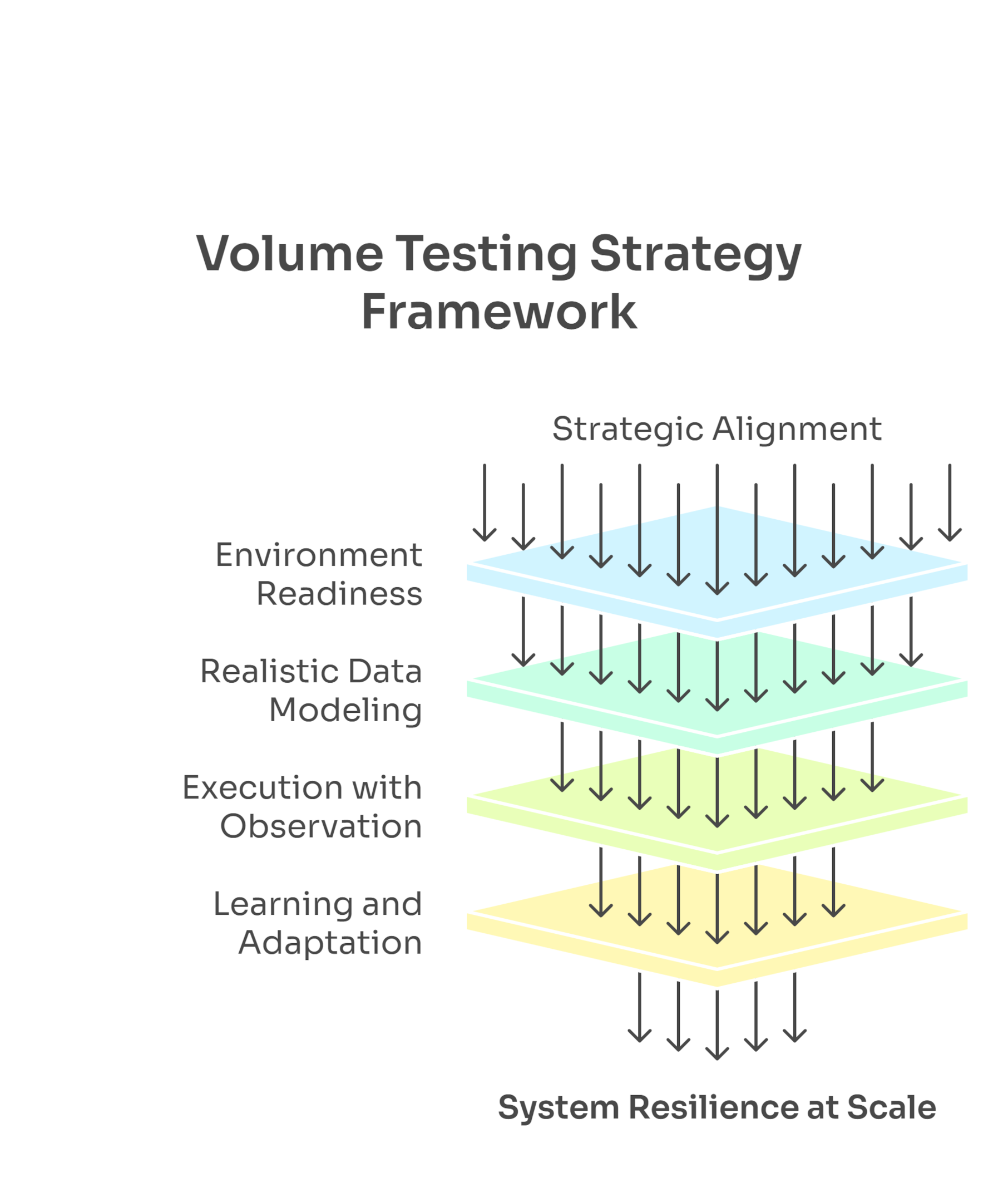

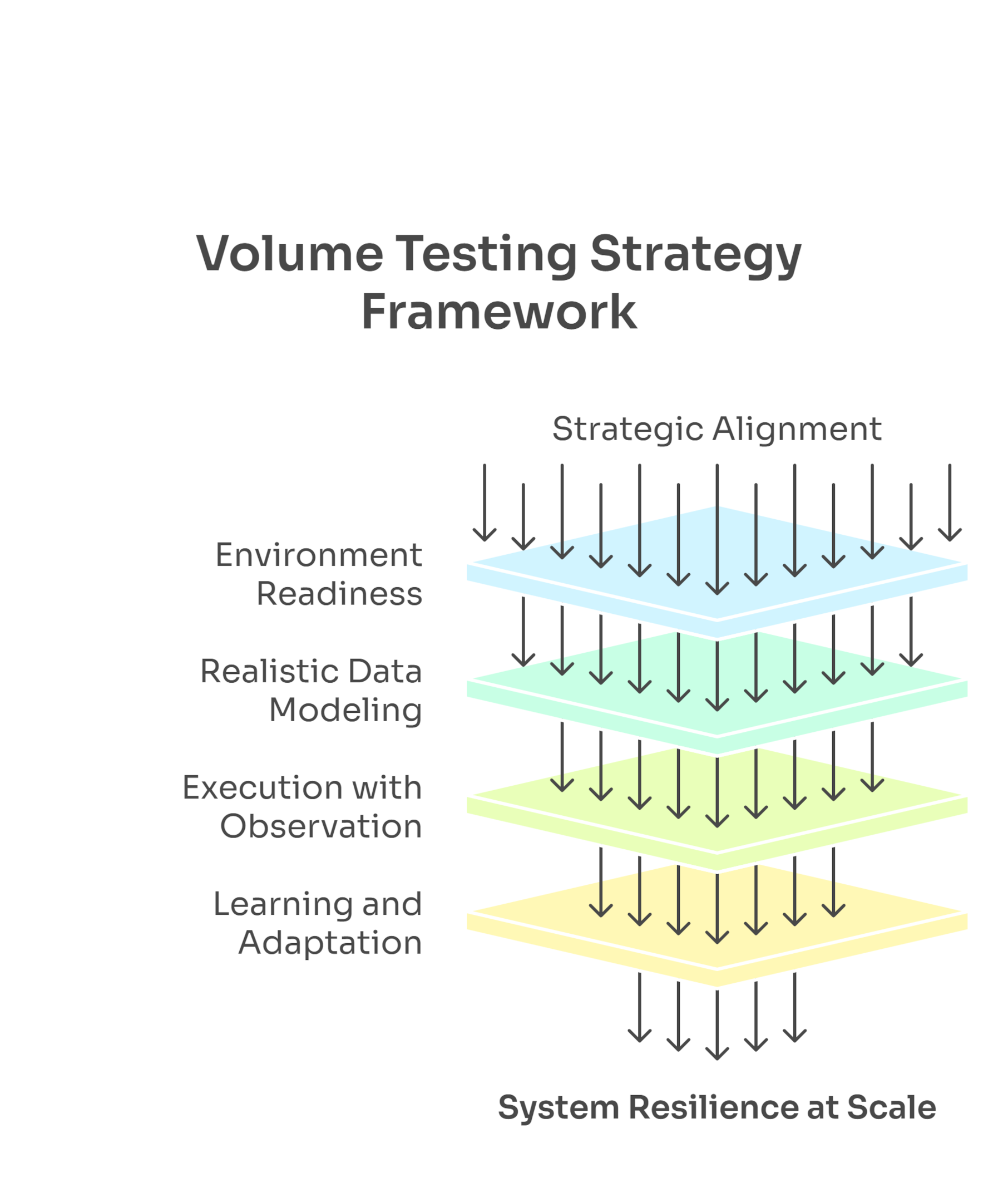

Volume testing operates as a continuous layer within your testing strategy. Rather than being isolated in late-stage phases, it evolves iteratively, across development and maintenance:

- Strategic Alignment: Start by identifying high-impact data flows and aligning them with business priorities and performance expectations.

- Environment Readiness: Create a stable, production-like environment to surface real constraints and validate behavior accurately.

- Realistic Data Modeling: Design and generate datasets that simulate real usage, aligned with expected traffic, formats, and storage behavior.

- Execution with Observation: Introduce growing data volumes progressively, tracking system behavior, data flow stability, and user-impact metrics.

- Learning and Adaptation: Treat each test run as a source of insights, feeding improvements into future sprints and long-term scalability planning.

When treated as a continuous discipline, this type of testing becomes a powerful mechanism for early risk detection and system resilience at scale.

Need clarity on your system’s capacity to handle real-world data volumes? Book a meeting!

When to Perform Volume Testing

- Before launching data-intensive applications.

- Ahead of expected user traffic peaks (e.g., sales events).

- When scaling infrastructure or migrating to cloud environments.

- In systems that require guaranteed data storage and retention.

Best Practices

- Simulate real-world conditions: Replicate the test environment setup to mirror production as closely as possible.

- Generate realistic test data: Use tools or scripts to generate realistic test data and simulate actual usage patterns.

- Monitor system performance: Track response time, data processing efficiency, and resource utilization.

- Prioritize test cases: Focus on test scenarios with the most impact on business-critical flows.

- Leverage automated tools: Automated tools speed up the testing process and enhance accuracy.

Industry Use Cases for Volume Testing

While the concept of volume testing applies broadly, its impact becomes especially clear in industries where data volume isn’t just high—it’s continuous, sensitive, or business-critical. These examples show how it addresses specific risks in real-world contexts.

- Banking & Fintech: Validate the system’s ability to process large batches of financial transactions without data corruption.

- E-commerce: Enable accurate handling of large product catalogs, order histories, and customer data.

- Healthcare: Verify that electronic health record systems maintain data integrity under high patient volume.

- Telecommunications: Test how infrastructure manages high message and call data loads during peak hours.

- Education Platforms: Confirm performance with thousands of concurrent exam or lesson submissions.

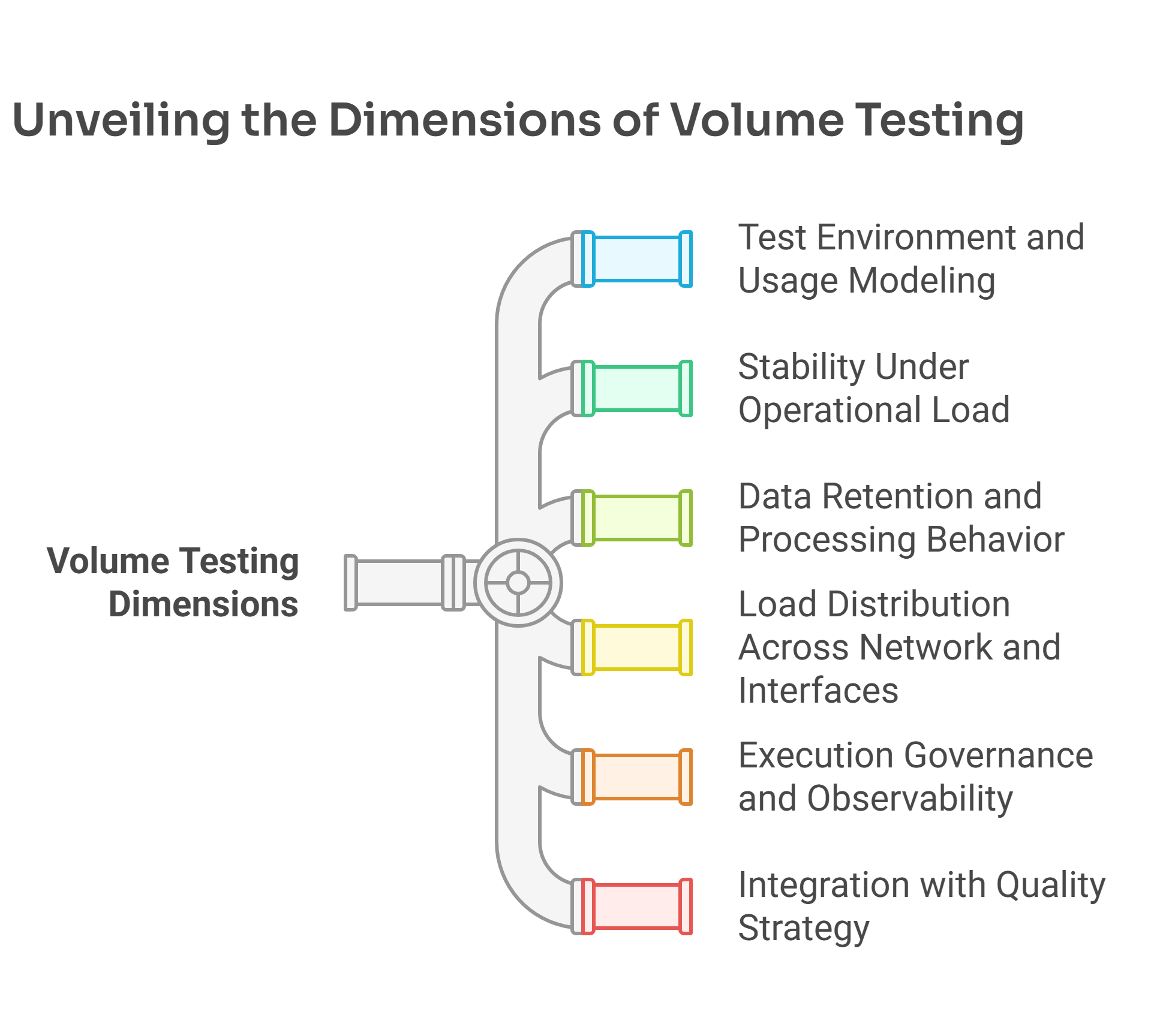

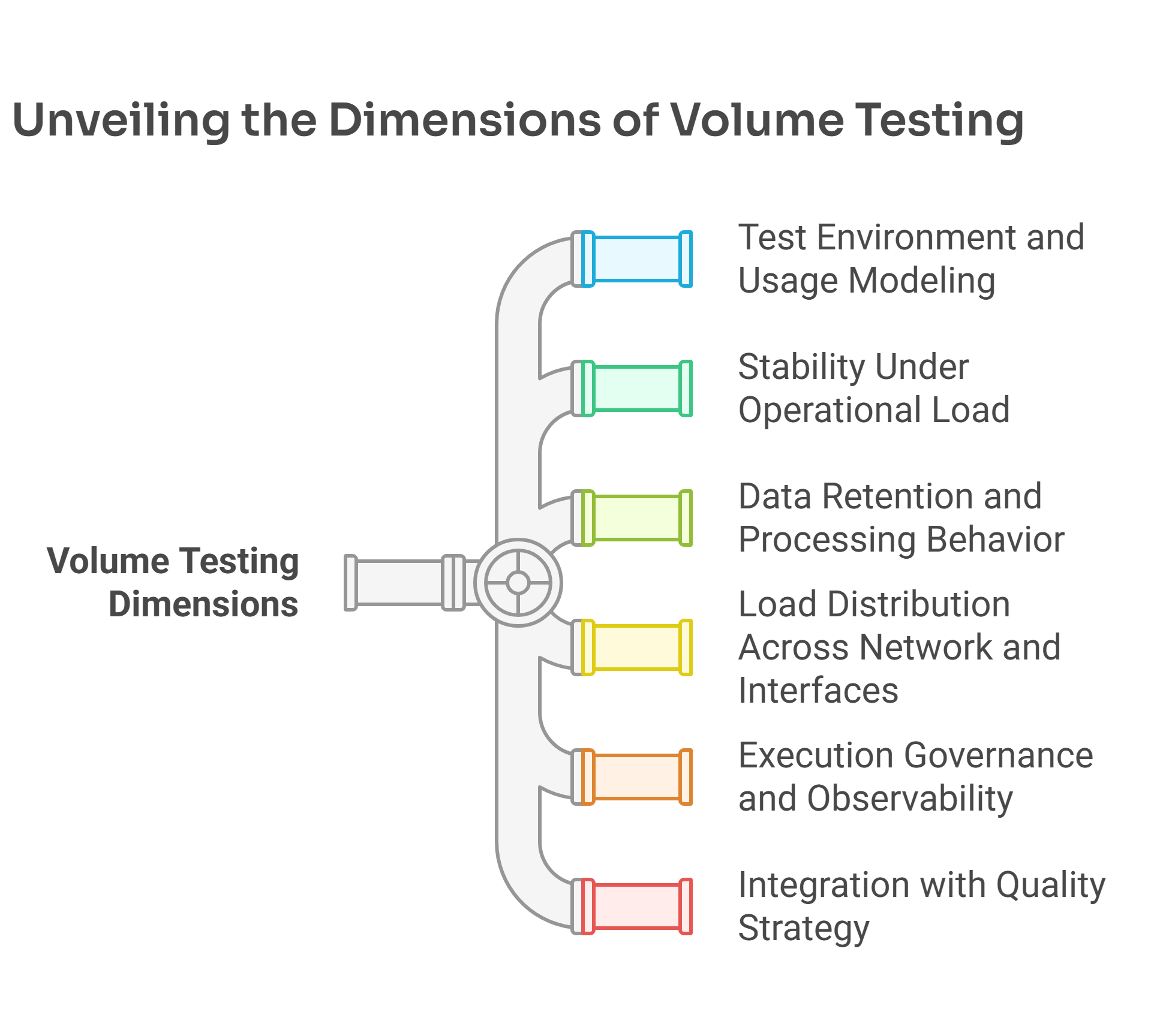

Advanced Dimensions of Volume Testing

Volume testing becomes significantly more valuable when adapted to the system’s architecture, data flows, and operational risk profile. Below, we explore six dimensions that deepen its effectiveness and allow teams to detect performance and data integrity issues under real operating conditions.

Test Environment and Usage Modeling

A test is only as reliable as the test environment setup it runs in. To produce useful insights, the environment must reflect production systems, including data stores, integrations, and network behavior. Combined with an understanding of actual usage patterns, teams can simulate conditions that mirror real-world volume and timing, observing how the system performs across critical operations.

Stability Under Operational Load

Volume testing provides evidence of whether the system remains stable under sustained or peak processing conditions. This includes transaction volume testing, where business-critical operations must complete without error, and batch processing volume testing, which checks how large record groups are handled in scheduled jobs. These tests confirm whether the platform can handle large volumes of data consistently.

Data Retention and Processing Behavior

As data scales, systems must maintain structure, not just speed. Testing should include single system volume testing and distributed cases, validating how data storage mechanisms retain and organize information. Failures in retention, indexing, or access latency often signal structural limits. These scenarios help teams catch breakdowns before they compromise real operations or performance becomes visible to users.

Load Distribution Across Network and Interfaces

When testing across systems, volume must move reliably. Network volume testing assesses infrastructure capacity under simultaneous transfers, while data transfer volume testing verifies whether communication layers handle throughput without message loss or latency. These tests are critical in microservices and API-driven architectures where volume doesn’t stay local.

Execution Governance and Observability

Testing at this scale requires control. Structured volume testing checklists help teams plan, execute, and analyze results consistently. They cover what to validate, how to measure it, and when to escalate. These checklists are especially useful during the conclusion volume testing phase, where the decision to release or remediate hinges on data-backed findings.

Integration with Quality Strategy

In high-stakes systems, this type of testing enables long-term resilience. It’s a volume essential that influences architecture, backlog prioritization, and operational readiness. Treating it as a continuous activity—not an isolated task—helps detect system-level risks early and align quality efforts with actual system growth.

Conclusion

Volume testing is a vital component of modern software testing. It provides clear insight into how systems behave under real data stress, helping teams reduce the risk of performance degradation, data loss, or system instability. Proper implementation leads to more scalable, reliable applications ready for real-world conditions.

Frequently Asked Questions (FAQs) about Volume Testing

What Is an Example of Volume Testing?

A common example of volume testing is uploading and processing a large CSV file with thousands of user records into a system’s database to verify data integrity and performance stability. The goal is to confirm that the system behaves correctly and that the data is stored without loss or corruption.

What Is the Difference Between Volume and Stress Testing?

Volume testing evaluates system performance when handling large amounts of data, focusing on storage, response time, and data processing. Stress testing, on the other hand, pushes the system beyond its capacity to observe when and how it fails under extreme conditions.

What Is the Primary Purpose of Volume Testing?

The primary purpose is to assess how well a system processes high data volumes without affecting performance, losing data, or compromising accuracy. It helps validate the system’s capacity and stability during data-heavy operations.

What Is Another Name for Volume Testing?

Another name for it is flood testing. This alternative term emphasizes the simulation of data floods to evaluate how the system manages large data inflows and storage loads.

When Should I Use Volume Testing?

You should conduct it before product launches, during infrastructure scaling, or when introducing features that involve significant data processing. It helps uncover performance issues early in the development cycle.

Can Volume Testing Be Automated?

Yes. Automated tools such as Apache JMeter, K6, or LoadRunner can simulate high data volumes efficiently, reducing manual effort and improving repeatability in the testing process.

What Signs Indicate a Need for Volume Testing?

You may need it if your system experiences slow response times, data corruption, or crashes under large data loads. It’s also essential when dealing with batch processing or real-time analytics.

Does Volume Testing Apply to Microservices?

Definitely. Distributed system volume testing is critical in microservices architectures, where each component may handle different volumes of data. Testing them under realistic data conditions prevents performance bottlenecks and data inconsistencies.

How We Can Help You

With over 16 years of experience and a global presence, Abstracta is a leading technology solutions company with offices in the United States, Chile, Colombia, and Uruguay. We specialize in software development, AI-driven solutions, and end-to-end software testing services.

Our expertise spans across industries. We believe that actively bonding ties propels us further and helps us enhance our clients’ software. That’s why we’ve built robust partnerships with industry leaders like Microsoft, Datadog, Tricentis, Perforce BlazeMeter, and Saucelabs to provide the latest in cutting-edge technology.

At Abstracta, we partner with teams worldwide to deliver reliable, scalable testing solutions. Our experts design and run volume testing strategies tailored to your system’s needs, helping you identify performance bottlenecks, protect data integrity, and validate the system’s response time under pressure.

Let’s talk about how we can support and enhance testing process.

Contact us to schedule a consultation.

Follow us on LinkedIn & X to be part of our community!

Recommended for You

Better Your Strategy with This Software Testing Maturity Model

Tags In

Sofía Palamarchuk, Co-CEO at Abstracta

Related Posts

New Chief Operations Officer and Chief Quality Officer at Abstracta

Alejandra Viglietti, Operations Manager for almost 2 years, is the new Chief Operations Officer (COO) of Abstracta, replacing Federico Toledo, co-founder and partner of the company. Thus, Viglietti joined the company’s C-Suite. Federico held the position for more than 5 years and has now taken…

Functional and Non-Functional Requirements

11 insights on functional and non-functional requirements in enterprise software, covering risks, AI, performance, compliance, and scalability for leaders.

Search

Contents

Categories

- Acceptance testing

- Accessibility Testing

- AI

- API Testing

- Development

- DevOps

- Fintech

- Functional Software Testing

- Healthtech

- Mobile Testing

- Observability Testing

- Partners

- Performance Testing

- Press

- Quallity Engineering

- Security Testing

- Software Quality

- Software Testing

- Test Automation

- Testing Strategy

- Testing Tools

- Work Culture