What are the different factors of software quality and how do we test them?

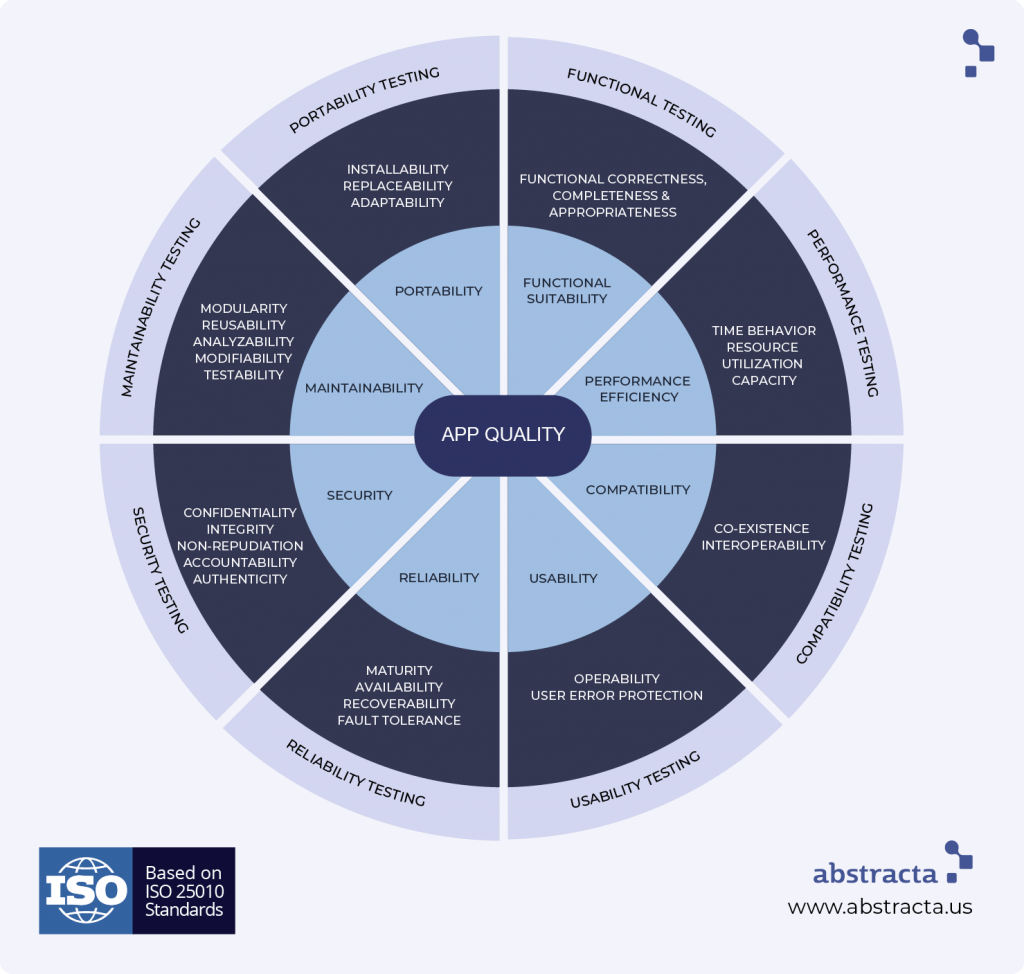

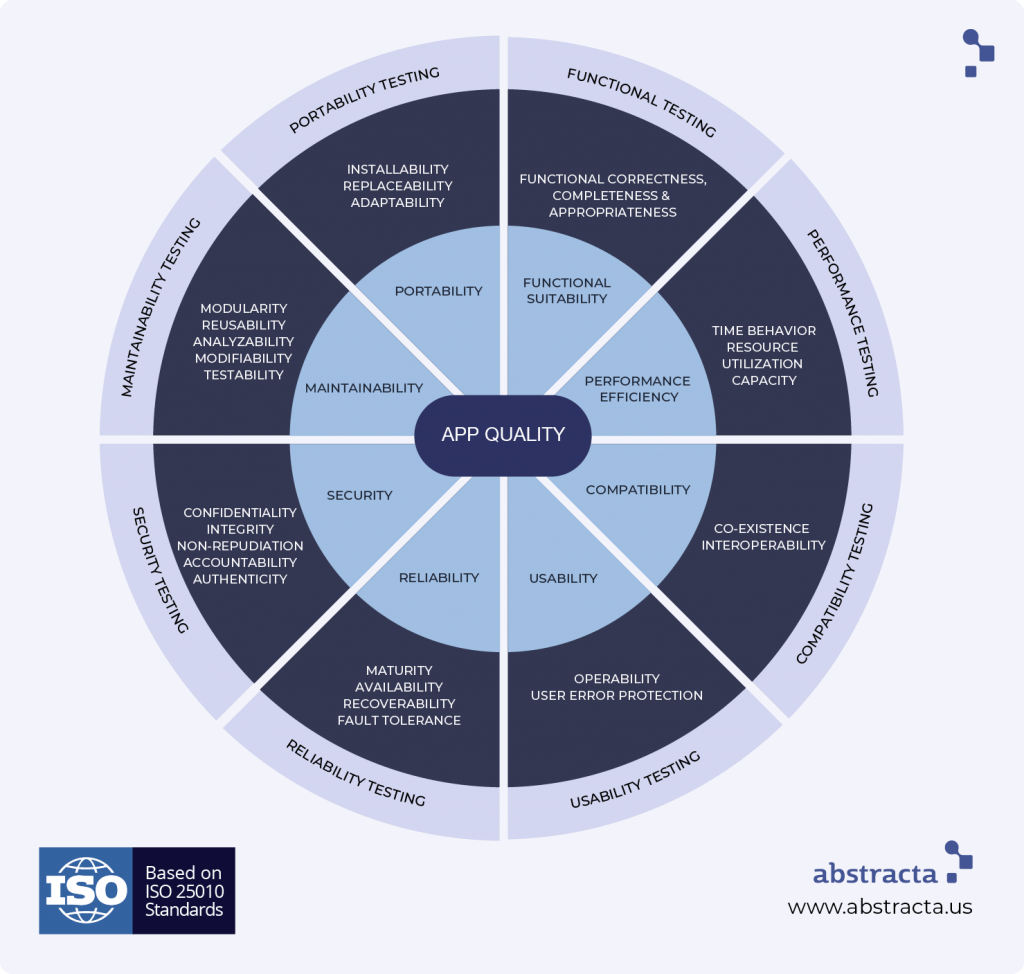

Software quality… sounds vague, doesn’t it? We made a fun software testing wheel to depict all of the parts that make up the “whole” when we mean software quality.

According to ISO standards, the quality of a software product is the weighted sum of different quality attributes which are grouped up into several quality factors. For example, performance efficiency is a factor that is composed of time behavior, capacity, resource consumption, etc.

Internal vs. External Quality Factors

There is also an internal and external classification of factors. Internal would be for example, maintainability, and everything that has to do with code quality. We call them internal factors because they have to do with the inner workings of the product that the customer cannot perceive at first glance.

External factors are the ones the user can benefit or suffer from such as performance (users suffer when the application runs slowly), functionality (if there are too many bugs, the user suffers), and usability (users also suffer when it’s not user-friendly enough).

On the other hand, these external and internal factors are not always so black and white. There are many that are partially internal and external like security.

Something else that’s really interesting is that internal factors like maintainability can become external at any moment. When? Well, when the customer requests a small change and the development team takes months to finally implement it. That is when an internal problem can become external. This could be due to poor code quality, poor system design, high coupling and low cohesion, bad architecture, code that’s not easy to read nor understand, etc. These things make it very difficult to make changes, negatively impacting the customer’s experience.

It’s well known that every quality attribute has a software test associated with it that verifies whether or not the quality meets customer and user expectations. We decided to put all of this into a “software testing wheel” thanks to one of our performance experts, Sebastian Lorenzo, who came up with the idea!

Here you can see that testing can be seen as something that encircles and “protects” the quality of software.

Now, let’s break down the wheel, examining each quality factor (inner layer), their attributes (middle layer), and the tests associated with each factor (outer layer).

Functional Suitability

Functional suitability is achieved when the system provides functions that meet stated and implied needs when used under specified conditions. Basically, it asks, does the system do what we want it to do? The three attributes listed are functional completeness, correctness, and appropriateness.

In other words, it is functionally suitable if it meets all of the requirements, covers them correctly, and only does things that are necessary and appropriate to complete tasks.

Several tests fall into the umbrella of functional testing such as regression testing, system testing, unit testing, procedure testing, etc.

Performance Efficiency

Performance efficiency deals with how the system behaves in certain situations with various load sizes. A system with high performance is fast, scalable, and stable even when there is a large number of concurrent users.

The three attributes listed are time behavior, resource utilization, and capacity. Time behavior is the degree to which the response and processing times and throughput rates meet requirements. Resource utilization refers to the number of resources it uses while functioning. Capacity refers to the degree to which the maximum limits of a product or system parameter meet requirements.

To analyze these attributes, there are several kinds of performance tests that we can carry out such as load, endurance, peak, volume testing, etc. Read more about the different types of performance tests.

Compatibility

Compatibility refers to the degree to which the software is compatible with the intended hardware, browser, operating system, etc.

The attributes listed include co-existence and interoperability. Co-existence means the degree to which it can perform efficiently while sharing a common environment and resources with other products, without a detrimental impact on any other product. Interoperability means the degree to which two or more systems, products or components can exchange information and use said information.

The tests that can be performed to ensure these quality attributes are compliance, compatibility, interoperability, and conversion tests.

Usability

Usability refers to the ease with which the user interacts with the interface.

Attributes of this quality include operability (the degree to which the attributes make it easy to operate and control) and user-error protection (how well the interface protects and prevents the user from making mistakes). A system is highly usable if users tend to not make many mistakes, learn how to use it quickly, perform tasks in a timely manner, and are satisfied with it overall.

Usability tests include UX testing and accessibility tests (like localization and internationalization), among others.

Reliability

Reliability refers to the probability of failure-free operation for a specified period of time in a specified environment.

Its quality attributes include maturity (degree to which it meets the reliability needs), availability (how accessible it is), recoverability (how well can it return to its desired previous state after an interruption or failure), and fault tolerance (how well it can operate and cope with the presence of hardware or software faults).

Some tests within reliability testing include backup/recovery testing and disaster recovery testing.

Security

Security in software is the degree to which it protects information and data so that users or other entities have the degree of data access appropriate to their authorization. In other words, only the people who are authorized to access data, access it, thwarting potential hacks.

Attributes of security include confidentiality, integrity, non-repudiation, accountability, and authenticity. Confidentiality refers to the degree to which the data is accessible only to those with the authority to access it. Integrity is the degree to which it protects said confidentiality. Non-repudiation is how well it can prove when and where an action or event took place so that it can’t be repudiated (denied) later on. Accountability is the degree to which the actions of an entity can be traced uniquely to the entity. Lastly, authenticity is the degree to which a subject or resource can be proved to be what it claims to be.

Security is a hot topic today as more and more companies are taking measures to prevent breaches. Some of the tests that analyze these security attributes are penetration tests, vulnerability tests, ethical hacking, and static analysis.

Maintainability

This quality factor is pretty straightforward. It refers to how easy it is to maintain the system including analyzing, changing, and testing it.

The attributes associated with maintainability are modularity, reusability, analyzability, and modifiability, and testability. Modularity refers to the degree to which it is composed of discrete components such that a change to one component has minimal impact on other components. Reusability refers to the degree to which an asset can be used in more than one system, or in building other assets. Analyzability refers to how well we can assess the impact of an intended change, to diagnose deficiencies, or to identify parts we should modify. Modifiability is the degree to which it can be modified without introducing defects or degrading existing quality. Lastly, testability is how well test criteria can be established and tests can be performed to determine whether those criteria have been met (AKA, how easy is it for us testers to do our jobs with it!).

Tests that are associated with maintainability include pair review, static analysis, for which as an example, we could use the tool, sonarqube, and alike checking.

Portability

Portability refers to the ease of which an application can be moved from one environment to another, such as moving from Android KitKat to Lollipop.

The three attributes listed are installability, replaceability, and adaptability. Installability tests are conducted to see how well it can be installed or uninstalled in a specific environment. Replaceability is the capability of the application to be used in place of another for the same purpose and in the same environment. Adaptability refers to its ability to adapt to different environments.

There are tests for each of these attributes of the same name.

In Conclusion

And there you have it, that is the software testing wheel! Something very important to note, we said at the beginning that quality is the weighted sum of quality factors, but one must not forget that quality is subjective.

The subjectivity is in defining the weight of each of the factors. Each user, user group, target market, etc will weigh each factor differently. For example, for one user, it may be fundamental that it’s easy to understand the application (simple, user-friendly) and as long as it satisfies that requirement, he or she might not mind if it runs a bit slow. Then there may be other users who might not even consider using an application if it doesn’t run fast enough nor use minimal resource consumption.

There will always be variation within the types of users, who we must keep in mind when developing and testing software. For example, we will care about different quality aspects when designing for kids, teens, the general public, the elderly, etc and in which contexts it will be used: on the street, in the office, at home, etc.

As testers, we have the challenge of looking at the testing wheel and determining which tests to focus on the most, according to the weight of the different factors that the users will assign them.

We hope you enjoyed the software testing wheel! Please feel free to share and add your comments.

Do you think the wheel is a good representation of software quality testing?

Recommended for You

[Infographic] Why Should I Become a Performance Tester?

The Ultimate List of 100 Software Testing Quotes

Federico Toledo, Chief Quality Officer at Abstracta

Related Posts

Quality Sense Conf 2023, A Celebration of Technology and Quality in Latin America

Would you like to know how Quality Sense Conf 2023 is redefining the IT industry? Dive into the stories of our speakers and discover how this event is helping to position Latin America as a leading digital hub in the world. In the wake of…

A leader for each project, key for the creation of quality software

There are many companies that wish to avoid the costs involved in having a testing leader in their projects. In this article, Federico Toledo, Alejandra Viglietti, and Alejandro Berardinelli explain why this role is a priority for the creation of quality software and the economic…

Search

Contents

Categories

- Acceptance testing

- Accessibility Testing

- AI

- API Testing

- Development

- DevOps

- Fintech

- Functional Software Testing

- Healthtech

- Mobile Testing

- Observability Testing

- Partners

- Performance Testing

- Press

- Quallity Engineering

- Security Testing

- Software Quality

- Software Testing

- Test Automation

- Testing Strategy

- Testing Tools

- Work Culture