AI, Generative AI, LLMs, vector databases, RAG, copilots, AI agents, MCP, security, and costs—explained simply! Start your journey with this AI for Dummies guide by Abstracta.

With technology developing at a breathtaking rate, Generative AI (Artificial Intelligence) stands at the forefront of innovation, offering lightning-fast expansion in natural language and image, video, audio, and processing.

Whether you’re a seasoned developer or business person, understanding the fundamentals of Generative AI and machine learnin,g or its applications can significantly enhance your projects and solutions.

Let’s embark on a journey to demystify Generative AI and explore its potential together.

Explore our tailored AI Software Development & Agents services and reach out to us.

First Things First: AI for Dummies

Before diving deep into Generative AI, it’s important to briefly understand Artificial Intelligence (AI) as a whole. Artificial intelligence works by simulating human intelligence in machines, enabling systems to learn, recognize patterns, and make decisions or predictions.

AI is already present in our daily lives—powering features like voice assistants, search engines, and recommendation systems. At its core, AI continues to evolve, with technologies such as machine learning, natural language processing, and computer vision, which allow machines to process and interpret large amounts of data.

Understanding these basics will provide the foundation to fully grasp the power and possibilities of Generative AI. Now, let’s dive into Generative AI in everyday life to see how it builds on these foundations and opens up exciting new opportunities.

Key Concepts About Generative Artificial Intelligence

This section is meant as an ideal starting point for anyone curious about how AI can impact their life or business.

What Is Generative Artificial Intelligence?

Generative AI is a groundbreaking subset of Artificial Intelligence that uses deep learning to create new content. Unlike traditional AI, which analyzes data to support decisions, Generative AI today produces entirely new content, such as text, images, music, videos, and even complex designs.

What Makes Generative AI Unique?

From composing realistic art to generating human-like conversations, Generative AI is unlocking creative possibilities across industries. Whether you’re interacting with chatbots, personalizing marketing campaigns, or designing products, its ability to generate content from learned patterns is reshaping how we solve problems and innovate.

Not only does it offer powerful new tools to marketing professionals, but also to people with all kinds of roles across industres. Its potential is evident in several key areas, including:

- Versatility: It spans multiple media formats, from written content to audiovisual production.

- Innovation: Businesses are using it to develop new ideas, prototypes, and user experiences.

- Efficiency: Automating creative and repetitive tasks saves time and resources.

This transformative technology opens a world of opportunities for developers, business leaders, and creatives alike, providing insights that demystify how artificial intelligence is reshaping workflows and decision-making processes.

Latest AI Trends to Watch in 2025

Understanding the latest AI trends is key to making sense of how AI is transforming our tools, workflows, and business logic. In the next sections, we’ll break down these trends—from typical multimodal LLMs and AI agents to real-time copilots, open standards like MCP, Tokens, RAG, and more—and explain what they mean for you.

1. LLMs: GPT-5, OpenAI, Gemini, and More

Large Language Models (LLMs) are advanced AI systems designed to understand and generate human-like content, including text, images, video, and audio.

GPT-5 and Gemini are specific LLM models, developed by OpenAI (GPT-5) and Google (Gemini). OpenAI is a leading AI research organization responsible for creating GPT-5 and other groundbreaking AI technologies and has a strong partnership with Microsoft. GPT-5 represents a leap forward in both capabilities and efficiency compared to previous iterations. These models are built to handle more complex tasks, provide more nuanced outputs, and have an expanded understanding of context across various applications.

Generative AI (GenAI) refers to a specific type of AI that can create new content, such as text, images, and music. All these concepts fall under the broader umbrella of AI, which encompasses various technologies that simulate human intelligence processes in machines. The goal? Creating intelligence that can adapt and evolve in real-world applications.

2. Assistants, GPTs, Copilots, and AI Agents – Generative AI for Dummies

Assistants, GPTs, Copilots, and Agents are all AI tools leveraging advanced AI to enhance productivity and efficiency in business.

Assistants

Assistants, such as virtual or digital assistants, are AI-powered programs that help manage schedules, handle communications, and perform various tasks, similar to having an intelligent secretary.

GPTs

GPTs are custom versions of ChatGPT that combine instructions, extra knowledge, and any combination of skills like code interpretation, web browsing, and external APIs. You can create your own GPTs and share them via private links, or submit them for review to appear in the GPT Store.

These GPTs are specialized AI Assistants for different tasks. OpenAI decided to call Assistants to a similar concept of GPTs, but with the difference that you can only call these assistants by API instead of using them in the ChatGPT front end.

To create a GPT, a Plus account is required. However, many public GPTs are accessible from free accounts. Also, when using a GPT, it’s possible to switch models dynamically, depending on the task’s needs.

One limitation of GPTs is that they don’t support saving prompt sequences or workflows across sessions, unlike other agent platforms with persistent configurations.

Also, GPTs process data through OpenAI’s infrastructure, so it’s best to avoid uploading private or sensitive information unless your use case meets the necessary security standards.

Copilots

Copilots are AI-driven systems designed to support professionals by automating tasks, providing suggestions, and streamlining workflows, acting like a second pair of hands. Copilots share capabilities with GPTs or Assistance, with the difference that the copilot shares “the context” with the pilot (the pilot is you!).

What exactly is ‘the context’? It could be anything from your screen, your audio stream, your camera, or any other information that you, as a user or pilot, are receiving. This information is submitted simultaneously to the copilot.

This allows the copilot to help in a very smart and efficient way, working together with the pilot. During heads-down programming sessions, this support is crucial—it reduces interruptions and boosts flow. The copilot can access this context and, with the pilot’s agreement, can also execute actions within this context (which is recommended).

Microsoft is leading the development of copilots, integrating these copilots into each product.

AI Agents

AI Agents are autonomous systems that can plan, decide, and act on their own to achieve specific goals. Unlike copilots or assistants, they operate independently—chaining tasks, using tools, and adapting to new conditions with little or no human input.

In practice, this means an agent can take a high-level instruction—like “analyze this report, summarize key insights, and send it to the team”—and carry out the entire workflow from start to finish. It can access APIs, use external tools, and rely on memory to manage tasks over time and across sessions.

We’re seeing agents become increasingly relevant in areas like testing, operations, research, and customer support—helping teams reduce manual work, gain speed, and focus on higher-value problems.

While copilots work alongside you using your context, agents act on your behalf. However, many systems still benefit from some level of human intervention to validate outputs or guide decision-making in complex environments. In these cases, AI behaves more like an expert computer system, bringing specialized capabilities to support and enhance human expertise.

3. Model Context Protocol (MCP)

The Model Context Protocol (MCP) is an open standard introduced by Anthropic in 2024 that allows AI agents and copilots to interact with external tools, data sources, and software systems in a structured way—without requiring custom integrations.

Often referred to as the “USB‑C of AI,” MCP enables models to understand and control web applications, APIs, databases, CRMs, and internal business tools. It standardizes how agents observe UI elements, retrieve context, and perform actions securely.

MCP is already supported by platforms like Microsoft Copilot Studio, GitHub Copilot, Windows, and various LLM providers. It plays a critical role in browser copilots and agentic automation by enabling AI to work across real-world enterprise software environments.

4. Vector Databases and Embeddings

Vector databases and embeddings are crucial tools for handling and utilizing complex data in business. Embeddings are a way to represent data, such as text or images, as numerical vectors in a high-dimensional space, capturing the essence and relationships of the data points.

For instance, in a customer review, the embedding would convert the text into a vector that represents the review sentiment and context. Vector databases store these embeddings efficiently, allowing for fast retrieval and similarity/semantic searches.

This allows businesses to rapidly identify patterns, cluster-related data, and surface insights that matter most—enhancing strategic decisions and personalized user experiences. These technologies are essential for organizing big data in a way that makes it actionable and valuable.

5. Tokens: What Is This?

In the context of AI and LLMs, tokens are the fundamental units of information (text/image) that the model processes.

Think of tokens as pieces of words or characters. For example, in the sentence “Artificial intelligence is transforming business,” each word might be considered a token. LLMs like GPT-5 break down text into these tokens to understand and generate language.

The number of tokens impacts the model’s processing time and cost; more tokens mean more complexity and computation. For businesses, understanding tokens helps manage the efficiency and cost of using AI models for tasks like content generation, data analysis, and customer interactions.

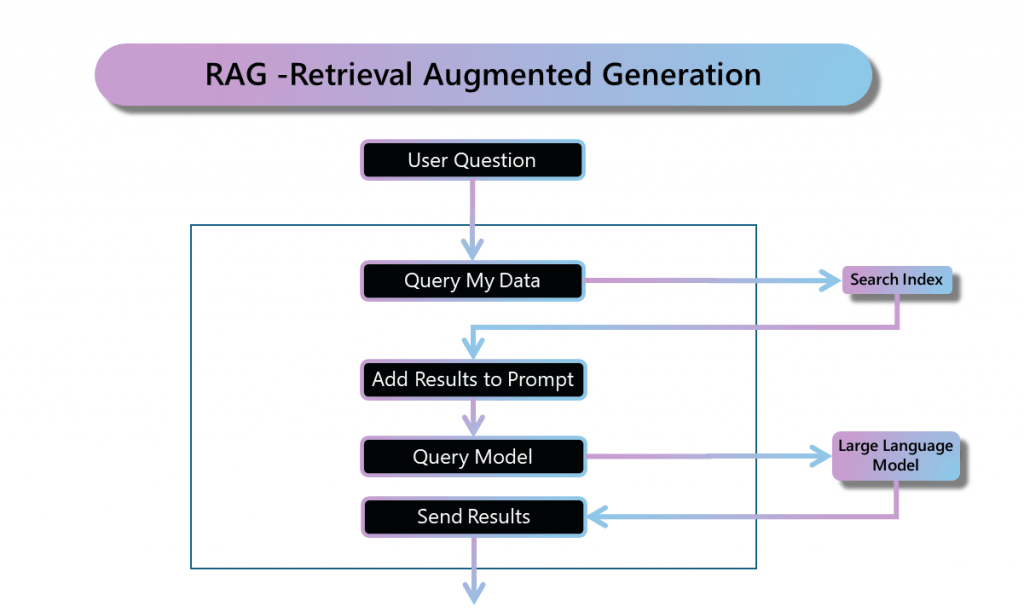

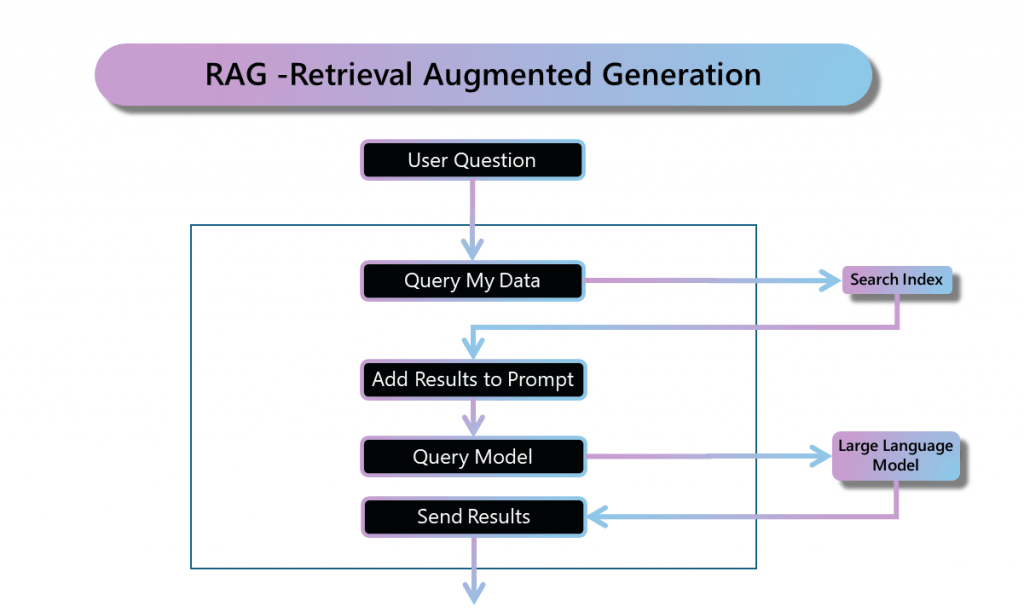

6. Retrieval Augmented Generation (RAG)

At the heart of Generative AI lies the concept of Retrieval Augmented Generation (RAG). This innovative approach combines information retrieval techniques with generative language models (LLMs), such as those capable of understanding and generating over a dozen languages, to improve the quality and relevance of generated text.

By accessing external knowledge sources (usually but not only stored in vector databases), RAG enables models to produce more accurate, informative, and contextually relevant content.

RAG operates through a series of steps, starting with the retrieval of relevant information from vast databases. This information is then pre-processed and integrated into the LLM, enhancing its understanding of the topic.

Image: RAG Retrieval Augmented Generation.

The result? A generative model that can access the most up-to-date information, grounded in factual knowledge, and capable of generating responses with unparalleled contextual relevance and factual consistency.

7. Specific Tools for Specific Tasks

It’s important to point out that not all queries or requirements can be effectively solved using a one-size-fits-all LLM approach.

Often, tasks that need data from other systems or involve complicated math calculations are better off with specialized AI tools, as described in this article by LangChain. This approach helps make Generative AI applications efficient and customized to meet the unique requirements of each project. The LLM-based system can answer the prompt using specific tools or RAG information to complete the response. These tools could execute your existing code or connect to existing APIs in your backend services, like CRM or ERP.

Don’t miss this article! Testing Applications Powered by Generative Artificial Intelligence.

Train, Fine-Tune, or Just Use a Pre-Trained Model?

Generative AI offers flexibility in how to utilize models. Companies often face the decision of whether to train their own models, leverage existing pre-trained models or just fine-tune a pre-trained model.

This choice depends on several factors, including the use case-specific requirements, the data availability, and the resources at hand.

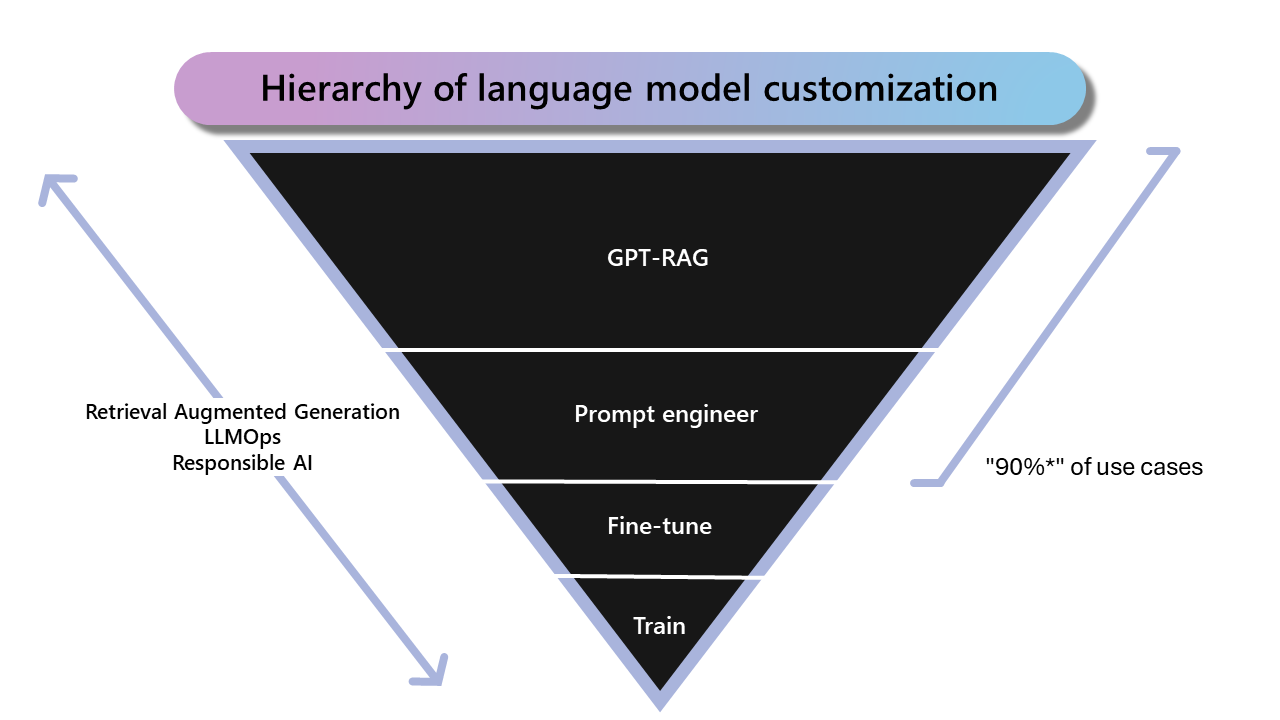

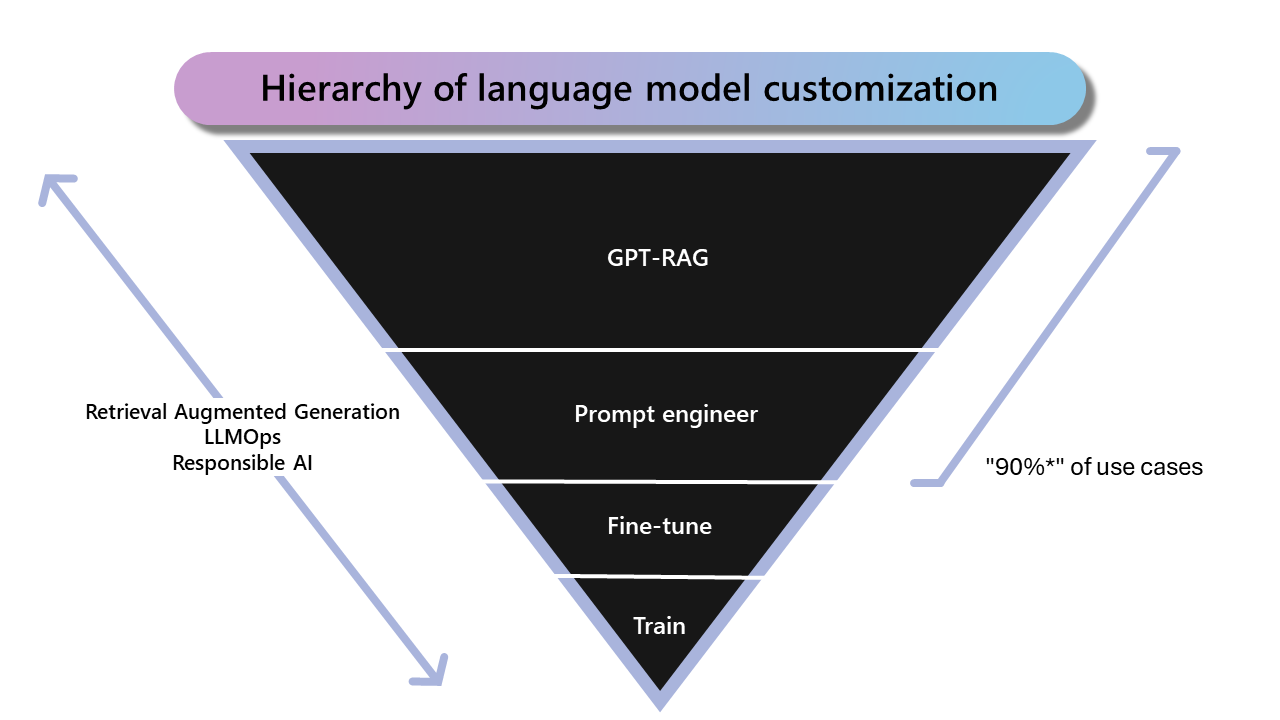

Graph: Hierarchy of language model customization

Based on our experience and information from Microsoft, 90% of the use cases can be resolved with RAG and prompt engineering. This is good news! Because fine-tuning requires a lot of data and the training process not only demands quality data but also consumes significant hardware resources, such as GPU capacity.

When considering whether to train specific models from scratch or to fine-tune an existing model, different scenarios might lead to different recommendations. Here’s how your scenarios align with these approaches:

Low Latency Requirements

- Fine-tuning a Model: We often recommend fine-tuning because it adjusts only the final layers. This can be quicker and less computationally intensive than training a model.

Recommended for “Small Models”

- Training Specific Models: If the task is narrow and well-defined, starting from scratch might be better. You can design the architecture to be compact.

- Fine-tuning a Model: Alternatively, fine-tuning a small pre-trained model for your needs is also an option. The choice depends on the project’s constraints.

Hardware Efficiency Requirements

- Fine-tuning a Model: Starting with a pre-trained model usually requires less computation. Thus, it’s generally more hardware-efficient.

- Training Specific Models: If the task is highly specialized, designing an efficient model from scratch might be considered. This approach requires careful planning.

In summary, the choice between training specific models from scratch or fine-tuning an existing model depends on the specific requirements of the project, including latency, model size, and hardware constraints.

Note: when we train or fine-tune a model, the data used in this process is incorporated into the model and we can not control who can access this data. All the people using these models could access this data.

Open Source vs. Proprietary Models

The Generative AI landscape is populated with both open-source and proprietary models. The open-source models (Llama3, Vicuña, Alpaca) allow us to run these models in our own infrastructure. The proprietary models (GPT-4o, GPT-5, Claude, Gemini) are only available in the cloud providers like Azure or OpenAI.

Running the models in our own infrastructure could reduce the cost (in some scenarios with heavy usage of the models) and give us more control over the sensitive data.

Choosing between these options involves considering the project’s security requirements, budget, and desired level of customization.

Costs: Managing the Budget

When we encourage a new LLM initiative, we need to understand the relative costs associated with this kind of system. We have similar costs regarding the teams who develop and maintain the system and also need to contemplate the costs associated with using the LLMs.

While we anticipate that Large Language Model costs will continue to decrease, we need to be sure about the ROI of the new AI system.

The Large Language Model cost depends on how many tokens we are using, the LLM model we are employing, the provider of the model, and if we are hosting the model in our infrastructure (open source model). For this reason, one important feature that all LLM systems have to implement is the observability of the usage and costs for each model.

Some important features to control the budget in your project with LLMs are:

- Limit the budget for each user

- Caching queries and results

- Evaluate for the specific tasks if you can use a reduced model

- Evaluate the cost and benefits of hosting an open-source model in your infrastructure

If managing budget and security are a priority for your business, we invite you to take a look at solutions like PrivateGPT.

Security: Handling Data with Care

In the realm of Generative AI, data security and privacy are paramount.

We can use LLMs through APIs offered by Azure (they make available new models from OpenAI very fast!) or Google or Amazon. In these scenarios, we will have control of our data in this cloud provider. All these providers have security standards and certifications.

If this is not an option for our project (for example, we can’t send information outside a country or our own infrastructure), then we could use an open source model and have total control of our data.

Another important consideration is that, when we train a model, the data that was used in training can later be accessed by anyone. This does not allow us to define “permissions” for each role and person in the organization. That is why, for most cases, the RAG approach is the best option, giving us total control over who accesses what information.

Explore our enhanced security solutions to improve your overall system robustness.

Developing Systems Based on Large Language Models

What is Langchain?

Langchain represents a cutting-edge open-source framework designed for building applications powered by LLMs. It simplifies the development process, enabling developers to create context-aware, reasoning applications that leverage company data and APIs.

Langchain not only facilitates the building and deployment of AI applications but also provides tools for monitoring and improving their performance, enabling developers to deliver high-quality, reliable solutions.

Microsoft Copilot Studio

Microsoft Copilot Studio introduces a new era of personalized AI copilots, allowing for the customization of Microsoft 365 experiences or the creation of unique copilot applications. It leverages the core technologies of AI conversational models to transform customer and employee experiences.

With Copilot Studio, developers can design, build, and deploy AI copilots that cater to specific scenarios, fostering compliance and enhancing efficiency.

GeneXus Enterprise AI: A Proprietary Framework for Open Source Architectures

GeneXus Enterprise AI offers a unique solution for companies looking to harness the power of LLMs in a secure, monitored, and cost-effective manner. It boosts the creation of AI assistants that can seamlessly integrate with existing operations, enhancing productivity and innovation.

By focusing on data privacy and optimizing AI spending, GeneXus empowers users to solve complex problems using natural language, making advanced AI more accessible than ever.

Abstracta Copilot

Abstracta Copilot is an AI-powered tool designed to enhance software testing, developed by Abstracta in collaboration with Co-Innovation Lab de Microsoft. Launched in October 2024 at Quality Sense Conf 2024, this tool leverages AI to optimize software testing and documentation, increase efficiency, enhance productivity by up to 30%, and cut costs by about 25%.

- Knowledge Base Access: Searches product documentation and Abstracta’s 16 years of expertise for quick insights.

- AI-Powered Assistance: Provides real-time guidance for application development and testing. It allows testing and analysis teams to interact directly with systems using natural language.

- Business Analysis: Quickly interprets functional requirements and generates user stories.

- Test Case Generation: Creates manual and automated test cases from user stories, focusing on maximizing coverage from the end-user perspective.

- Performance and Security Monitoring: Identifies issues during manual testing in real-time, enabling teams to respond faster.

- Seamless Integration: Operates within the system under test.

- Custom Workflows: Tailored to specific organizational needs, allowing for greater customization and productivity.

At Abstracta, we include Abstracta Copilot as part of our services. Contact us to get started!

Browser Copilot: Simplifying Web Interactions

Developed by our experts at Abstracta, Browser Copilot is an open-source and revolutionary framework that integrates AI assistants into your browser. It supports a wide range of applications, from SAP to Salesforce, providing personalized assistance across various web applications.

Browser Copilot enhances user experience by offering contextual automation and interoperability, making it an invaluable tool for developers and users alike.

We invite you to read our Bantotal’s case study.

FAQs – Artificial Intelligence (AI) for Dummies

How Do You Explain AI to Beginners?

Artificial Intelligence (AI) is the ability of machines to mimic human thinking and decision-making. It’s like teaching a computer to learn, reason, and solve problems. For example, AI helps your phone recognize your voice (thanks to natural language processing), your emails filter out spam, and even recommend movies or products you might like.

Can You Explain AI in Simple Terms?

AI allows computers to simulate human learning and decision-making. Imagine a robot that improves by practicing—just like a child learning to walk. Many artificial intelligence systems operate machines in ways that improve efficiency, such as in robotics or autonomous vehicles.

Is There an AI for Dummies?

Yes, many beginner-friendly tools explain AI step by step. You can start with guides like this one, or take free intro courses on platforms like Coursera or edX. These cover key areas like machine learning, interpreting big data, natural language processing, and how to experiment with AI tools. Study the basics and develop a deeper technological understanding.

What’s The Difference Between AI and Generative AI?

AI focuses on tasks like decision-making, predictions, and analyzing data to solve problems. Generative AI, a subset of AI, goes further by creating new content such as text, images, music, or code. While traditional AI processes and interprets information, Generative AI produces original outputs, enabling creative and personalized applications.

What Are Large Language Models (LLMs)?

Large Language Models (LLMs) are advanced AI systems trained on huge datasets to understand and generate human-like content. They do this by learning the statistical patterns and inner workings of language itself. These models power tools like ChatGPT, copilots, and AI agents, processing language in a context-aware way.

What Are AI Agents and How Do They Work?

AI Agents are autonomous systems that complete tasks without human input. Unlike copilots, agents plan actions, chain tools, and adapt to changing goals. They’re useful for workflows like analyzing data, summarizing content, or automating business operations across apps and APIs.

What Is Model Context Protocol (MCP)?

Model Context Protocol (MCP) is an open standard that lets AI systems interact with real applications—like web apps or CRMs—without custom integrations. It allows agents and copilots to understand UI elements, retrieve context, and perform actions across enterprise tools securely.

What Is Retrieval Augmented Generation (RAG)?

Retrieval Augmented Generation (RAG) is a method where AI combines external data—like from vector databases—with generative models. This improves accuracy by grounding outputs in real, up-to-date information.

What Role Does Smart Data Play in Generative AI?

Smart data is crucial in generative AI because it ensures that the models are trained on high-quality, relevant, and actionable information. Unlike raw data, smart data has been processed, cleaned, and enriched to remove noise and inaccuracies.

How Does Database Management Support Generative AI?

Database management helps store, access, and maintain the vast amounts of data used for training and retrieval in generative models efficiently. It is especially critical when working with vector databases and embeddings to support Retrieval Augmented Generation (RAG) workflows.

What Does a Data Scientist Do in AI Projects?

A data scientist applies data science methods to train and evaluate models. Their work starts with collecting data that’s relevant and clean, enabling effective AI outcomes.

How to Use Generative AI Ethically?

Using generative AI ethically involves promoting transparency, addressing biases, and safeguarding privacy. Companies should adhere to ethical AI frameworks and design systems that align with fairness and inclusivity, fostering trust in AI-driven solutions. Understanding AI revolution means adapting to new challenges and opportunities while prioritizing responsibility and societal impact in AI applications.

What is AI Hardware?

AI hardware refers to specialized devices like GPUs, TPUs, and custom chips designed to efficiently handle the processing demands of AI systems. These tools provide the necessary balance between speed, scalability, and energy efficiency, enabling advancements in AI applications across industries

What Are Some Possible AI-Driven Futures for Businesses?

The AI-driven future holds immense potential for innovation across industries, from automating repetitive tasks to creating personalized customer experiences. With the growing adoption of generative AI, businesses can explore solutions like natural language processing, advanced predictive analytics, and AI copilots tailored to specific industries.

How Can I Improve at Writing AI Prompts?

Learning to craft clear and structured instructions is key to getting quality outputs from generative models. Start experimenting, and you’ll quickly develop better results. You can discover tips and examples online to improve your skills in writing AI prompts.

How We Can Help You

With nearly 2 decades of experience and a global presence, Abstracta is a leading technology solutions company with offices in the United States, Canada, the United Kingdom, Chile, Colombia, and Uruguay. Our team specializes in AI-driven solutions development and end-to-end software testing services, and helps enterprises to adopt artificial intelligence effectively.

We believe that actively bonding ties propels us further and helps us enhance our clients’ software. That’s why we’ve forged robust partnerships with industry leaders like Microsoft, Datadog, Tricentis, Perforce BlazeMeter, Saucelabs, and PractiTest, to create and implement customized solutions for your business applications and empower companies with AI.

Explore our AI software development services!

Contact us and join us in shaping the future of technology.

Follow us on Linkedin & X to be part of our community!

Recommended for You

Testing Generative AI Applications

Uruguay: The Best Hub for Software QA Engineers in Latin America?

Matías Reina, Co-CEO at Abstracta

Related Posts

ETL Testing for AI-Driven Business Confidence

ETL testing redefined for enterprise data leaders—integrating AI, automation, and shift-left validation to enable data reliability, operational confidence, and real business impact.

How to Modernize IBM Systems in Banking with AI Without Migrations

How Can You Bring AI to the Banking Core Without Replacing IBM Systems? Discover how to integrate intelligent agents with AS400 and iSeries safely.

Search

Contents

Categories

- Acceptance testing

- Accessibility Testing

- AI

- API Testing

- Development

- DevOps

- Fintech

- Functional Software Testing

- Healthtech

- Mobile Testing

- Observability Testing

- Partners

- Performance Testing

- Press

- Quallity Engineering

- Security Testing

- Software Quality

- Software Testing

- Test Automation

- Testing Strategy

- Testing Tools

- Work Culture