LLMs, vector databases, RAG, copilots, security, and costs—explained simply! Dive into Generative AI for Dummies and start your AI journey with Abstracta!

By Matías Reina

With technology developing at a breathtaking rate, Generative AI stands at the forefront of innovation, offering unprecedented capabilities in natural language and image, video, audio, and processing.

Whether you’re a seasoned developer or business person, understanding the fundamentals of Generative AI and its applications can significantly enhance your projects and solutions.

Let’s embark on a journey to demystify Generative AI and explore its potential together.

Explore our tailored AI Software Development & Copilots service!

Key Concepts About Generative Artificial Intelligence

LLMs, GPT4, OpenAI, Gemini, AI, GenAI and More

We have a lot of players, new concepts, and more, and we have to understand the general landscape.

Large Language Models (LLMs) are advanced AI systems designed to understand and generate human-like text, images, video, and audio.

GPT-4 and Gemini are specific LLM models, developed by OpenAI (GPT4) and Google (Gemini). OpenAI is a leading AI research organization responsible for creating GPT-4 and other groundbreaking AI technologies and has a strong partnership with Microsoft.

Generative AI (GenAI) refers to a specific type of AI that can create new content, such as text, images, and music. All these concepts fall under the broader umbrella of Artificial Intelligence (AI), which encompasses various technologies that simulate human intelligence processes in machines.

Assistants, Copilots, and GPTs – Generative AI for Dummies

Copilots, Assistants, and GPTs are all tools leveraging advanced AI to enhance productivity and efficiency in business.

Assistants

Assistants, such as virtual or digital assistants, are AI-powered programs that help manage schedules, handle communications, and perform various tasks, similar to having an intelligent secretary.

GPTs

GPTs are custom versions of ChatGPT that combine instructions, extra knowledge, and any combination of skills like code interpretation, web browsing, and external APIs. The GPTs could be published in the GPTs Store from OpenAI.

In the future, not having GPTs in your company might be like not having an app or a website today. These GPTs are specialized AI Assistants for different tasks. OpenAI decided to call Assistants to a similar concept of GPTs but with the difference that you can only call these assistants by API instead of using them in the chatGPT front end.

Copilots

Copilots are AI-driven systems designed to support professionals by automating tasks, providing suggestions, and streamlining workflows, acting like a second pair of hands. Copilots share capabilities with GPTs or Assistance, with the difference that the copilot shares “the context” with the pilot (the pilot is you!).

What exactly is ‘the context’? It could be anything from your screen, your audio stream, your camera, or any other information that you, as a user or pilot, are receiving. This information is submitted simultaneously to the copilot.

This allows the copilot to help in a very smart and efficient way working together with the pilot. The copilot can access this context and, with the pilot’s agreement, can also execute actions within this context (which is recommended).

Microsoft is leading the development of copilots integrating these copilots in each product.

Vector Databases and Embeddings

Vector databases and embeddings are crucial tools for handling and utilizing complex data in business. Embeddings are a way to represent data, such as text or images, as numerical vectors in a high-dimensional space, capturing the essence and relationships of the data points.

For instance, in a customer review, the embedding would convert the text into a vector that represents the review sentiment and context. Vector databases store these embeddings efficiently, allowing for fast retrieval and similarity/semantic searches.

This means that businesses can quickly find and analyze related data points, such as identifying similar customer feedback or finding relevant documents, enhancing decision-making and personalized customer experiences.

Tokens: What Is This?

In the context of AI and LLMs, tokens are the fundamental units of information (text/image) that the model processes.

Think of tokens as pieces of words or characters. For example, in the sentence “Artificial intelligence is transforming business,” each word might be considered a token. LLMs like GPT-4 break down text into these tokens to understand and generate language.

The number of tokens impacts the model’s processing time and cost; more tokens mean more complexity and computation. For businesses, understanding tokens helps manage the efficiency and cost of using AI models for tasks like content generation, data analysis, and customer interactions.

Retrieval Augmented Generation (RAG)

At the heart of Generative AI lies the concept of Retrieval Augmented Generation (RAG). This innovative approach combines information retrieval techniques with generative language models (LLMs), such as those capable of understanding and generating over a dozen languages, to improve the quality and relevance of generated text.

By accessing external knowledge sources (usually but not only stored in vector databases), RAG enables models to produce more accurate, informative, and contextually relevant content.

RAG operates through a series of steps, starting with the retrieval of relevant information from vast databases. This information is then pre-processed and integrated into the LLM, enhancing its understanding of the topic.

Generative AI for Dummies

Image: RAG Retrieval Augmented Generation.

The result? A generative model that can access the most up-to-date information, grounded in factual knowledge, and capable of generating responses with unparalleled contextual relevance and factual consistency.

Specific Tools for Specific Tasks

It’s important to point out that not all queries or requirements can be effectively solved using a one-size-fits-all LLM approach.

Often, tasks that need data from other systems or involve complicated math calculations are better off with specialized AI tools, as described in this article by LangChain. This approach helps make Generative AI applications efficient and customized to meet the unique requirements of each project. The LLM-based system can answer the prompt using specific tools or RAG information to complete the response. These tools could execute your existing code or connect to existing APIs in your backend services like CRM or ERP.

Don’t miss this article! Testing Applications Powered by Generative Artificial Intelligence.

Train, Fine-Tune Or Just Use a Pre-Trained Model?

Generative AI offers flexibility in how to utilize models. Companies often face the decision of whether to train their own models, leverage existing pre-trained models or just fine-tune a pre-trained model.

This choice depends on several factors, including the use case-specific requirements, the data availability, and the resources at hand.

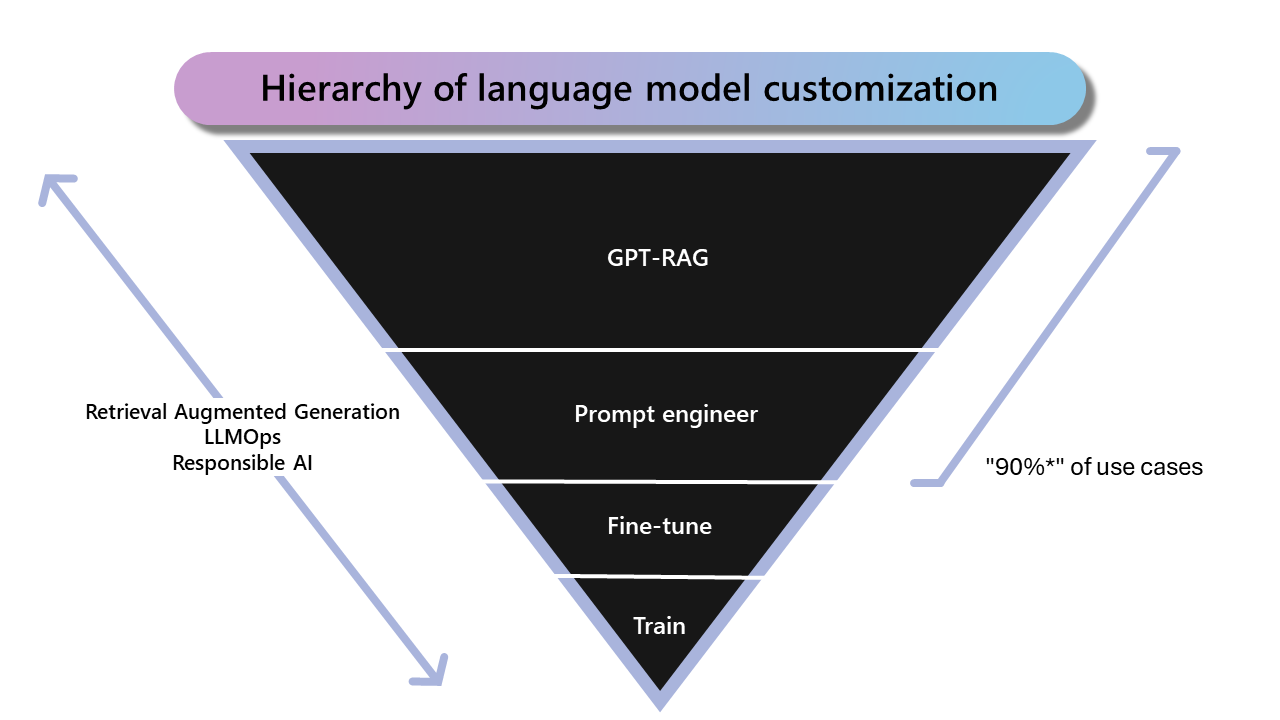

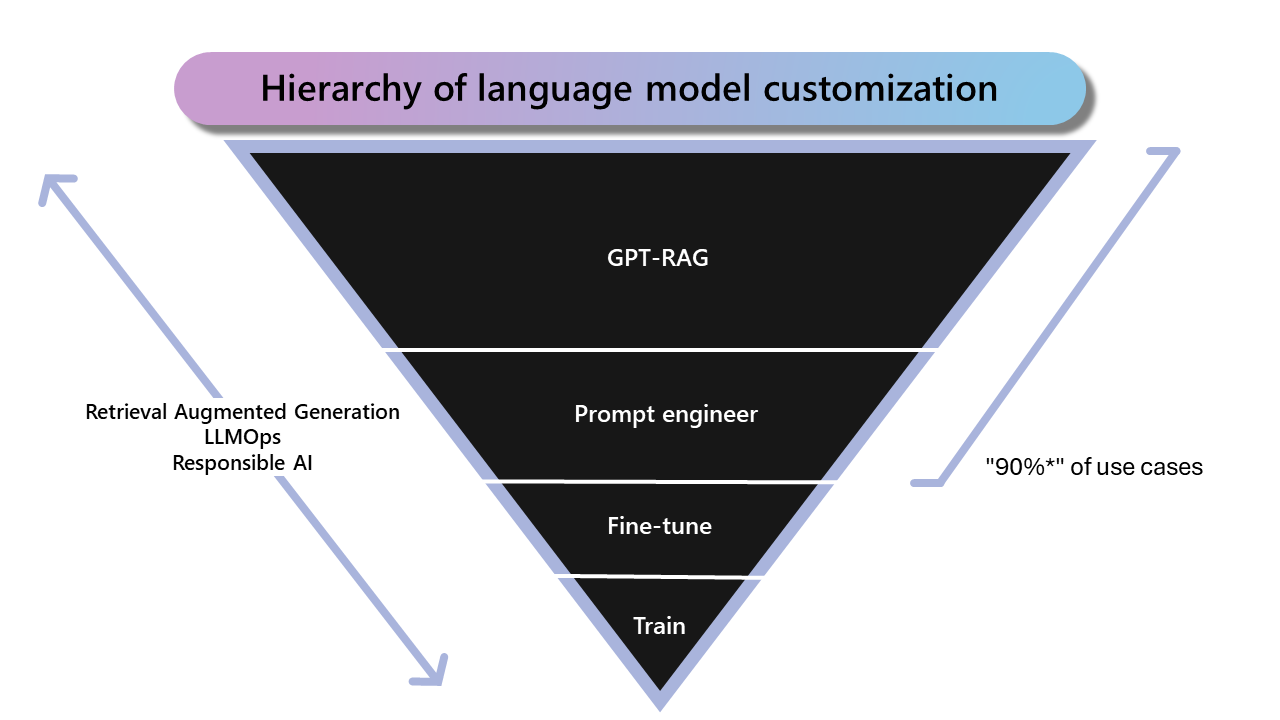

Graph: Hierarchy of language model customization

Based on our experience and information from Microsoft, 90% of the use cases can be resolved with RAG and prompt engineering. This is good news! Because fine-tuning requires a lot of data and the training process not only demands quality data but also consumes significant hardware resources, such as GPU capacity.

When considering whether to train specific models from scratch or to fine-tune an existing model, different scenarios might lead to different recommendations. Here’s how your scenarios align with these approaches:

Low Latency Requirements

- Fine-tuning a Model: We often recommend fine-tuning because it adjusts only the final layers. This can be quicker and less computationally intensive than training a model.

Recommended for “Small Models”

- Training Specific Models: If the task is narrow and well-defined, starting from scratch might be better. You can design the architecture to be compact.

- Fine-tuning a Model: Alternatively, fine-tuning a small pre-trained model for your needs is also an option. The choice depends on the project’s constraints.

Hardware Efficiency Requirements

- Fine-tuning a Model: Starting with a pre-trained model usually requires less computation. Thus, it’s generally more hardware-efficient.

- Training Specific Models: If the task is highly specialized, designing an efficient model from scratch might be considered. This approach requires careful planning.

In summary, the choice between training specific models from scratch or fine-tuning an existing model depends on the specific requirements of the project, including latency, model size, and hardware constraints.

Note: when we train or fine-tune a model, the data used in this process is incorporated into the model and we can not control who can access this data. All the people using these models could access this data.

Open Source vs. Proprietary Models

The Generative AI landscape is populated with both open-source and proprietary models. The open-source models (Llama3, Vicuña, Alpaca) allow us to run these models in our own infrastructure. The proprietary models (GPT4, GPT4o, Claude, Gemini) are only available in the clouds providers like Azure or OpenAI.

Running the models in our own infrastructure could reduce the cost (in some scenarios with heavy usage of the models) and give us more control over the sensitive data.

Choosing between these options involves considering the project’s security requirements, budget, and desired level of customization.

Costs: Managing the Budget

When we encourage a new LLM initiative, we need to understand the relative costs associated with this kind of system. We have similar costs regarding the teams who develop and maintain the system and also need to contemplate the costs associated with using the LLMs.

While we anticipate that Large Language Model costs will continue to decrease, we need to be sure about the ROI of the new AI system.

The Large Language Model cost depends on how many tokens we are using, the LLM model we are employing, the provider of the model, and if we are hosting the model in our infrastructure (open source model). For this reason, one important feature that all LLM systems have to implement is the observability of the usage and costs for each model.

Some important features to control the budget in your project with LLMs are:

- Limit the budget for each user

- Caching queries and results

- Evaluate for the specific tasks if you can use a reduced model

- Evaluate the cost and benefits of hosting an open-source model in your infrastructure

If managing budget and security are a priority for your business, we invite you to take a look at solutions like PrivateGPT.

Security: Handling Data with Care

In the realm of Generative AI, data security and privacy are paramount.

We can use LLMs through APIs offered by Azure (they make available new models from OpenAI very fast!) or Google or Amazon. In these scenarios, we will have control of our data in this cloud provider. All these providers have security standards and certifications.

If this is not an option for our project (for example, we can’t send information outside a country or our own infrastructure), then we could use an open source model and have total control of our data.

Another important consideration is that, when we train a model, the data that was used in training can later be accessed by anyone. This does not allow us to define “permissions” for each role and person in the organization. That is why for most cases, the RAG approach is the best option, giving us total control over who accesses what information.

Explore our enhanced security solutions to improve your overall system robustness.

Developing Systems Based on Large Language Models

What is Langchain?

Langchain represents a cutting-edge open-source framework designed for building applications powered by LLMs. It simplifies the development process, enabling developers to create context-aware, reasoning applications that leverage company data and APIs.

Langchain not only facilitates the building and deployment of AI applications but also provides tools for monitoring and improving their performance, enabling developers to deliver high-quality, reliable solutions.

Microsoft Copilot Studio

Microsoft Copilot Studio introduces a new era of personalized AI copilots, allowing for the customization of Microsoft 365 experiences or the creation of unique copilot applications. It leverages the core technologies of AI conversational models to transform customer and employee experiences.

With Copilot Studio, developers can design, build, and deploy AI copilots that cater to specific scenarios, fostering compliance and enhancing efficiency.

GeneXus Enterprise AI: A Proprietary Framework for Open Source Architectures

GeneXus Enterprise AI offers a unique solution for companies looking to harness the power of LLMs in a secure, monitored, and cost-effective manner. It boosts the creation of AI assistants that can seamlessly integrate with existing operations, enhancing productivity and innovation.

By focusing on data privacy and optimizing AI spending, GeneXus empowers users to solve complex problems using natural language, making advanced AI more accessible than ever.

Abstracta Copilot

Abstracta Copilot is an AI-powered tool designed to enhance software testing, developed by Abstracta in collaboration with Co-Innovation Lab de Microsoft. Launched in October 2024 at Quality Sense Conf 2024, this tool leverages AI to optimize software testing and documentation, increase efficiency, enhance productivity by up to 30%, and cut costs by about 25%.

- Knowledge Base Access: Searches product documentation and Abstracta’s 16 years of expertise for quick insights.

- AI-Powered Assistance: Provides real-time guidance for application development and testing. It allows testing and analysis teams to interact directly with systems using natural language.

- Business Analysis: Quickly interprets functional requirements and generates user stories.

- Test Case Generation: Creates manual and automated test cases from user stories, focusing on maximizing coverage from the end-user perspective.

- Performance and Security Monitoring: Identifies issues during manual testing in real-time, enabling teams to respond faster.

- Seamless Integration: Operates within the system under test.

- Custom Workflows: Tailored to specific organizational needs, allowing for greater customization and productivity.

At Abstracta, we include Abstracta Copilot as part of our services. Contact us to get started!

Browser Copilot: Simplifying Web Interactions

Developed by our experts at Abstracta, Browser Copilot is an open-source and revolutionary framework that integrates AI assistants into your browser. It supports a wide range of applications, from SAP to Salesforce, providing personalized assistance across various web applications.

Browser Copilot enhances user experience by offering contextual automation and interoperability, making it an invaluable tool for developers and users alike.

We invite you to read our Bantotal’s case study.

FAQs – Generative AI for Dummies

What is Generative AI in Simple Terms?

Generative AI refers to artificial intelligence systems that can generate new content, ideas, or data based on their training. It’s like teaching a computer to be creative, enabling it to produce everything from text to images that never existed before.

How to Learn Generative AI for Beginners?

Start by understanding the basics of machine learning and AI. Online courses, tutorials, and resources can provide a solid foundation. Experimenting with tools and platforms that offer pre-trained models can also be a practical way to learn by doing.

What is Gen AI for Dummies?

This Generative AI for Dummies comprehensive guide is an approach to understanding generative AI. It breaks down complex concepts into understandable chunks, making it easier for beginners to grasp how AI can create new, original content from what it has learned.

How Do You Explain AI to Beginners?

AI is like teaching a computer to think and learn. It’s about creating algorithms that allow computers to solve problems, and make decisions. And even understand human language, making them capable of performing tasks that typically require human intelligence.

Which AI is Best for Developers?

The best AI for developers depends on their specific needs and projects. Large language models like GPT (Generative Pre-trained Transformer) are highly versatile for coding, content generation, and more. Tools like GitHub Copilot can also enhance coding efficiency by suggesting code snippets.

Can AI Replace Coders, Developers, and Testers?

AI can automate certain aspects of coding and testing, and assist software developers and testers by suggesting code snippets and identifying errors. However, it’s unlikely to replace coders and testers, as human creativity, problem-solving, and decision-making are crucial in software development. Anyway, it’s paramount for professionals to be updated and be capable of leveraging AI

Don’t miss this article! ChatGPT: Will AI Replace Testers?

How We Can Help You

With over 16 years of experience and a global presence, Abstracta is a leading technology solutions company specializing in end-to-end software testing services and AI software development.

We believe that actively bonding ties propels us further and helps us enhance our clients’ software. That’s why we’ve forged robust partnerships with industry leaders like Microsoft, Datadog, Tricentis, and Perforce BlazeMeter, to create and implement customized solutions for your business applications and empower companies with AI.

Explore our AI software development services Contact us and join us in shaping the future of technology.

Follow us on Linkedin & X to be part of our community!

Recommended for you

Generative AI in Healthcare: Unlocking New Horizons

Generative AI for Legal: Revolutionizing Law Firm Practices and Outcomes

Generative AI in Accounting: How to Harness Today’s Growth Potential

Tags In

Matias Reina

Related Posts

Generative AI in Accounting: How to Harness Today’s Growth Potential

Discover how Generative AI in Accounting transforms efficiency and decision-making. See the benefits, challenges, and opportunities and how we can help you.

Generative AI in Healthcare: Unlocking New Horizons

Explore the power of Generative AI in the healthcare industry, its transformative applications, challenges, and future potential.

Leave a Reply Cancel reply

Search

Contents