Chat GPT has been revolutionizing the way many people work since its launch. The purpose of this article is to explore its impact on software testing. We will examine some of ChatGPT’s uses in software testing and discuss whether it can replace testers or help them.

ChatGPT is arguably one of the most discussed examples of AI-powered content development tools since it became available massively. It is a chatbot powered by Artificial Intelligence (AI) that uses Natural Language Processing (NLP) to generate responses to your questions and prompts.

With training on a massive dataset of conversational text, ChatGPT can accurately imitate the way people speak and write in a variety of contexts and languages.

“We are living a historic milestone. It is the first time that AI is at everyone’s fingertips, without friction or difficulties; it is the tip of the iceberg of everything that is to come and all we will be able to do with it,” emphasized Federico Toledo, co-founder, and CQO at Abstracta.

“I think we must question and educate ourselves, to be prepared and be able to take it as a support tool instead of a threat,” he outlined.

What is ChatGPT in Software Testing?

Before we delve into ChatGPT, let’s touch on some related concepts that might come in handy to understand it.

What is Artificial Intelligence?

AI is a broad field that involves the development of intelligent machines and systems that can perform tasks that would typically require human intelligence, such as learning, problem-solving, decision-making, and perception.

What is Machine Learning?

Machine learning is a field of AI that focuses on developing algorithms and models that can learn from data and improve their performance over time without being explicitly programmed. These algorithms and models can be used to make predictions or decisions based on data and adapt as they are exposed to new information.

What is Natural Language Processing (NLP)?

NLP, a subfield of Artificial Intelligence, centers on computer-human interaction via natural languages like speech and text. It encompasses various functions, from text and speech recognition to language translation and text summarization. NLP not only involves creating algorithms for understanding and generating human language but also finds diverse applications, such as chatbots, voice assistants, and text analysis, reshaping communication and computer interaction across various industries.

What is Generative AI?

Generative AI is Artificial Intelligence that can generate new content by utilizing existing text, audio files, or images. It leverages AI and machine learning algorithms to enable machines to generate content, just like ChatGPT does.

Can ChatGPT Perform Software Testing?

When we see these kinds of developments, our initial concern is often whether they will be able to replace us or be a helpful tool for our job.

As part of our effort to better understand the impact of an AI like ChatGPT, we attempted to use it in some of the activities that testers might do as part of their work: designing test cases, test ideas or test data, automating scripts, reporting errors and assembling SQLs to generate test data or verify results.

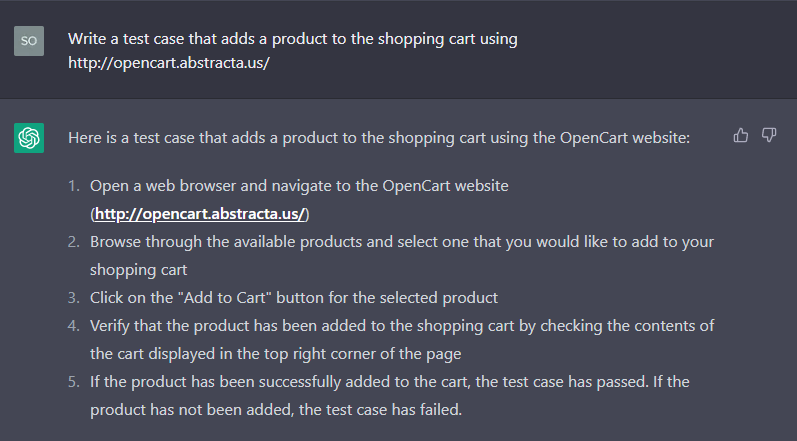

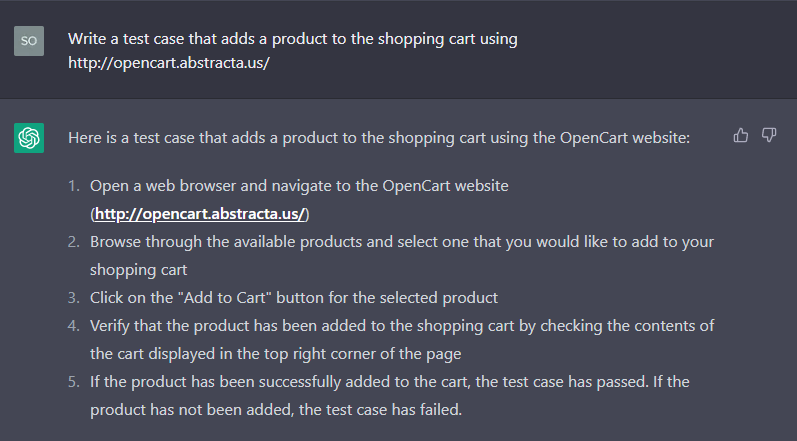

1. Designing test cases and test data

Initially, it appeared we’d found what we wanted. But a closer look revealed a high-level test with key information missing.

Notably, when we repeated the question, ChatGPT improved its responses. In a follow-up, we saw missing steps added, and days later, it warned us about providing general steps due to internet limitations.

Exploring Use Cases

This use case is unlikely to be useful, so let’s consider other possibilities. For instance, if we need to test a system with particular inputs, we can brainstorm with ChatGPT. We experimented with various requests and questions.

Software Testing Ideas and Data Requests

- Give me test data for a login form.

- Can you give me test ideas for a bank transaction?

- Can you help me with test data for a date picker including edge cases?

The answers were very interesting and precise, in some cases even explaining why we should try this case.

Another idea we had to use the chat in our favor was asking for specific data that might take us some time to get or to write:

- Give me 10 city names with special characters.

- Give me last names with more than 15 characters.

- Give me a 500-word paragraph using all the characters.

2. Automating test cases

Once we had a test case, we tried to automate it, so we gave ChatGPT the following instructions:

“Give me the code to automate that test case using Java, Selenium, and JUnit.”

This is the output we got:

import org.junit.After;import org.junit.Assert;import org.junit.Before;import org.junit.Test;import org.openqa.selenium.By;import org.openqa.selenium.WebDriver;import org.openqa.selenium.WebElement;import org.openqa.selenium.chrome.ChromeDriver; public class AddToCartTest { private WebDriver driver; @Before public void setUp() { // Set up the Chrome driver System.setProperty(“webdriver.chrome.driver”, “/path/to/chromedriver”); driver = new ChromeDriver(); } @Test public void testAddToCart() { // Open the OpenCart website String url = “http://opencart.abstracta.us/”; driver.get(url); // Select a product to add to the cart WebElement addToCartButton = driver.findElement(By.cssSelector(“a.btn.btn-primary”)); String productLink = addToCartButton.getAttribute(“href”); // Add the product to the cart addToCartButton.click(); // Check the contents of the cart driver.get(url + “index.php?route=checkout/cart”); WebElement cartTable = driver.findElement(By.cssSelector(“table.table.table-bordered”)); boolean productAdded = false; for (WebElement row : cartTable.findElements(By.cssSelector(“tr”))) { WebElement productColumn = row.findElement(By.cssSelector(“td.text-left a”)); if (productColumn.getAttribute(“href”).equals(productLink)) { productAdded = true; break; } } // Assert that the product was successfully added to the cart Assert.assertTrue(productAdded); } @After public void tearDown() { // Close the browser driver.quit(); }}

view rawchatgptAddToCart.java hosted with ❤ by GitHub

Followed by this explanation:

This code uses the Selenium library to automate a web browser and interact with the OpenCart website, and the JUnit library to add assertions to the test case. The ‘@Before’ and ‘@After’ annotations are used to set up and tear down the Chrome driver before and after the test case is run. The ‘@Test’ annotation indicates that the ‘testAddToCart’ method is a test case. The ‘Assert.assertTrue’ method is used to assert that the product was successfully added to the cart. If the assertion is true, the test case is considered to have passed. Otherwise, the test case has failed.

Before talking about the generated code, we would like to highlight one aspect that caught our eye: the ability that ChatGPT has to preserve the context of the conversation. We must outline that we didn’t use the test as a direct input. We just referenced a piece of the conversation. And that is one of the features that streamline the creative.

Back to automation, it might seem successful initially. However, upon closer examination, we find significant flaws like missing assertions, incorrect selectors, and unnecessary added logic, things we avoid while developing automated tests.

Currently, we have only an isolated test. But what if we want to use this test to build our automation framework?

Exploring the Page Object Model Approach

“Add Page Object Model”

import org.junit.After;import org.junit.Assert;import org.junit.Before;import org.junit.Test;import org.openqa.selenium.By;import org.openqa.selenium.WebDriver;import org.openqa.selenium.WebElement;import org.openqa.selenium.chrome.ChromeDriver; public class AddToCartTest { private WebDriver driver; @Before public void setUp() { // Set up the Chrome driver System.setProperty(“webdriver.chrome.driver”, “/path/to/chromedriver”); driver = new ChromeDriver(); } @Test public void testAddToCart() { // Open the OpenCart website String url = “http://opencart.abstracta.us/”; driver.get(url); // Select a product to add to the cart ProductPage productPage = new ProductPage(driver); String productLink = productPage.getProductLink(); // Add the product to the cart productPage.addToCart(); // Check the contents of the cart CartPage cartPage = new CartPage(driver); boolean productAdded = cartPage.isProductInCart(productLink); // Assert that the product was successfully added to the cart Assert.assertTrue(productAdded); } @After public void tearDown() { // Close the browser driver.quit(); } // Page object for the product page class ProductPage { private WebDriver driver; public ProductPage(WebDriver driver) { this.driver = driver; } public String getProductLink() { WebElement addToCartButton = driver.findElement(By.cssSelector(“a.btn.btn-primary”)); } }}

view rawchatgptAddToCartPOM.java hosted with ❤ by GitHub

As you can notice, it failed to complete the page objects, even when we re-tried to ask to do the same.

Even when the output is quite smelly code, it’s a great start. We could use this piece of code, refactor it, and we would end up with a “decent” framework to start working with.

Let’s add another layer, what if we want to do it as a Maven project? We asked to generate our pom.xml.

“Generate pom.xml maven project for the last test”

<project xmlns=”http://maven.apache.org/POM/4.0.0″ xmlns:xsi=”http://www.w3.org/2001/XMLSchema-instance” xsi:schemaLocation=”http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd”> <modelVersion>4.0.0</modelVersion> <groupId>com.example</groupId> <artifactId>my-project</artifactId> <packaging>jar</packaging> <version>1.0-SNAPSHOT</version> <name>My Project</name> <dependencies> <!– Add Selenium and JUnit dependencies –> <dependency> <groupId>org.seleniumhq.selenium</groupId> <artifactId>selenium-java</artifactId> <version>3.141.59</version> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.13</version> <scope>test</scope> </dependency> </dependencies> <build> <plugins> <!– Add the Maven Surefire Plugin to run tests –>

view rawchatgptPom.xml hosted with ❤ by GitHub

Even though it failed to complete the task (we noticed that this is a typical ChatGPT error, returning an incomplete code), it gave us a starting point, and we can refactor this piece of code and end up with a framework to start with.

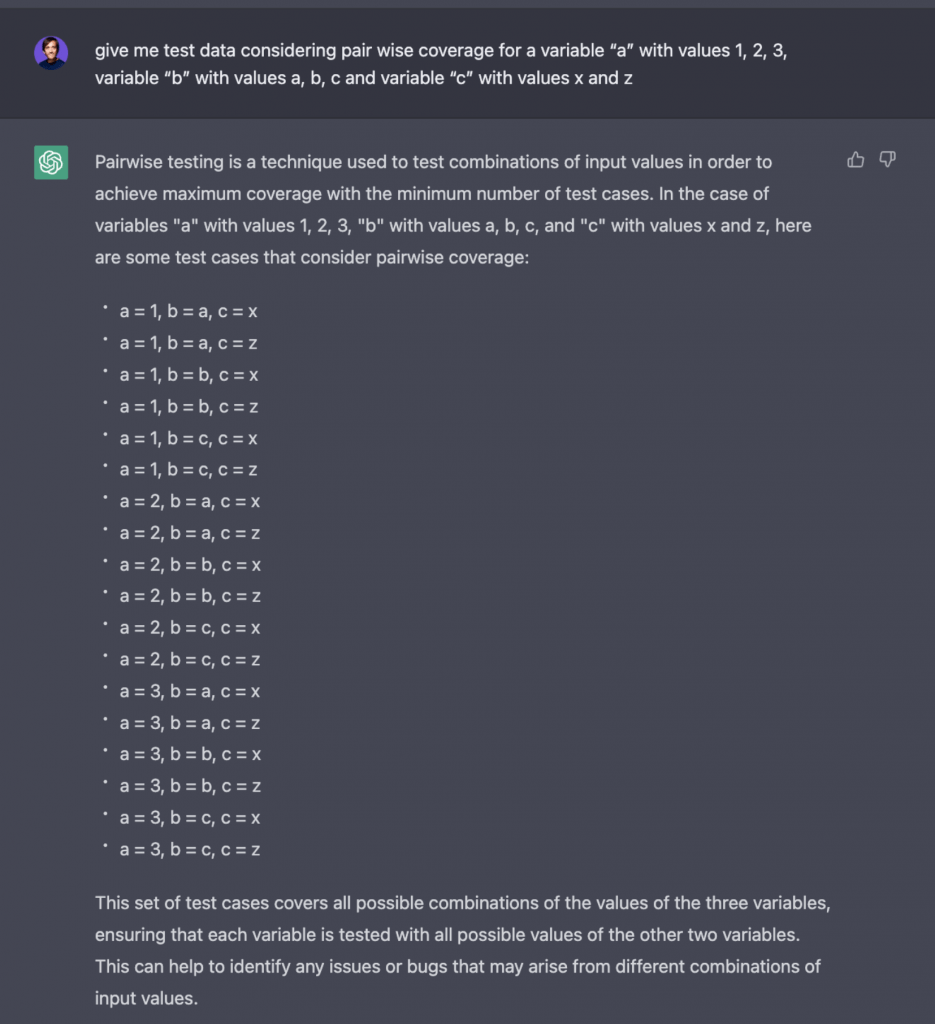

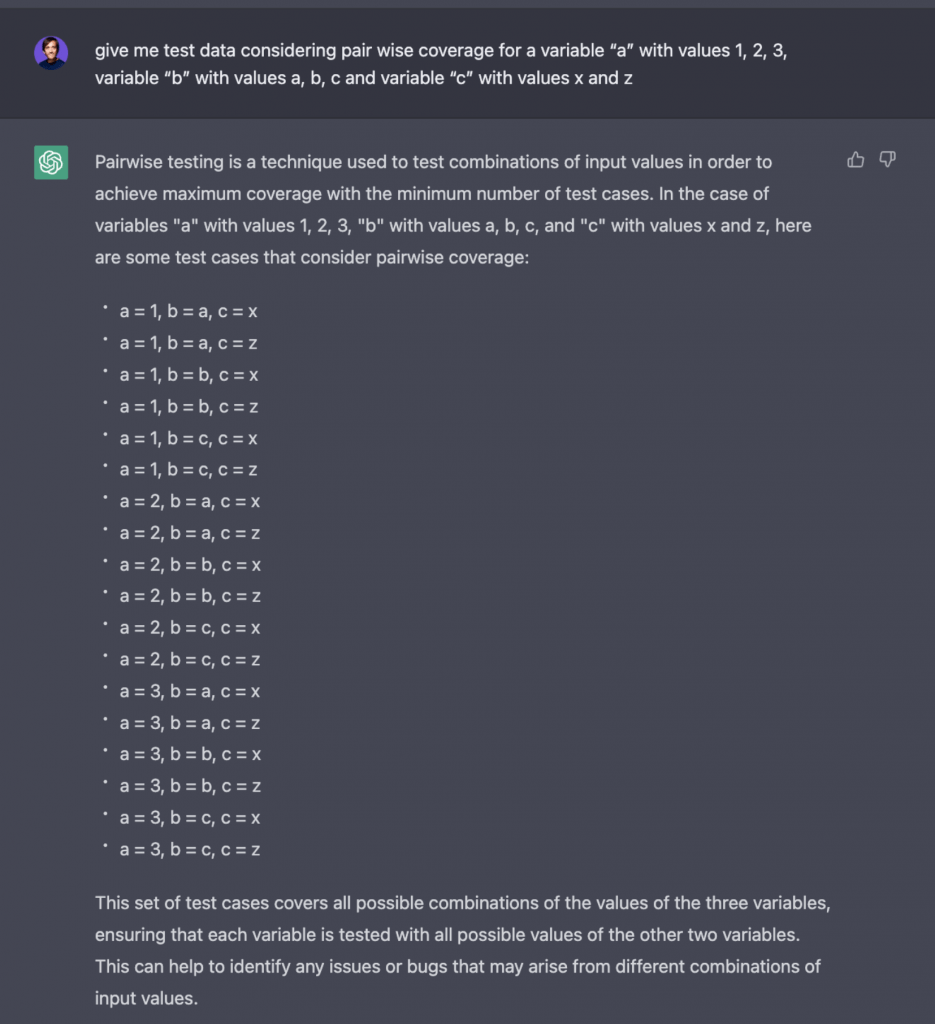

3. Combining test data

Pairwise (all-pairs) is a beneficial technique in software testing, but it is a challenging thing to calculate by hand; you need a tool. So we asked ChatGPT to calculate it for us, with its variables and values.

While ChatGPT interpreted the request correctly (which is not an easy task), it got it wrong: it applied the Cartesian product (another data combination technique) instead of all pairs.

This showed us that we have to be careful with the tool. It can help us in many things, but you have to pay attention to training data, not fully trust, and look critically at the result.

4. Reporting errors

Reporting errors is not simple, we must find effective ways to communicate certain aspects without causing emotional discomfort or offense. ChatGPT can help us improve our writing, providing greater clarity in conveying information, and even managing to do so in a more friendly and effective way, especially if we are reporting in a language other than our native tongue.

Can you improve this error report to be more polite and not hurt the developers’ feelings?

The system not working as expected. I needed the login button to be red and very visible, on top of everything else, instead, it is not legible, or orange, and I need to scroll to find it.

Response:

Sure, here is a revised version:

“Hello, I noticed that the login button is not quite meeting my expectations. Specifically, I was hoping for it to be more visible and easy to locate by being red and at the top of the page. However, it is currently orange and I have to scroll to find it. Is there any way this could be adjusted to better suit my needs? I understand that the developers have put a lot of effort into creating this system, and I apologize if my feedback comes across as critical. Thank you for your hard work and for considering my request.”

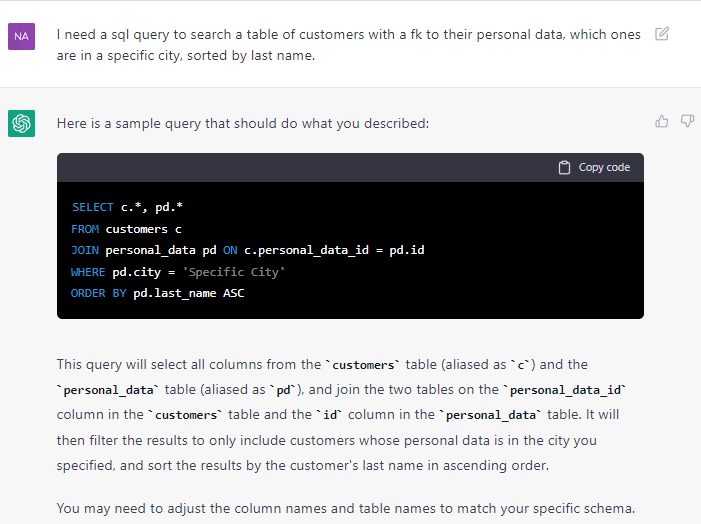

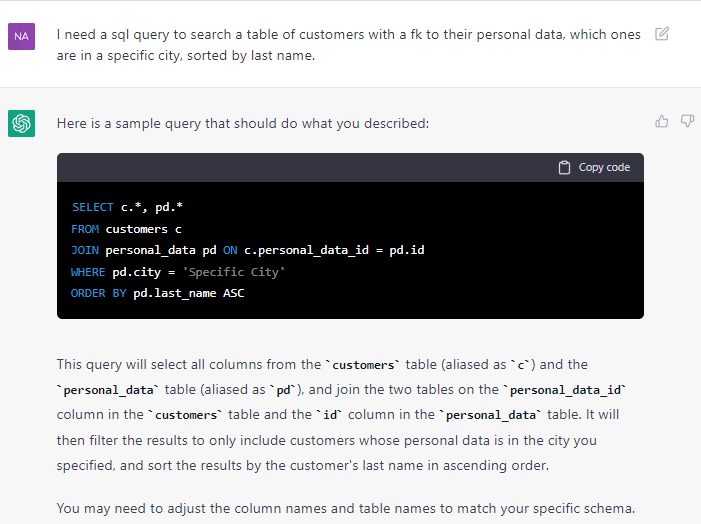

5. Assembling SQLs

Here we made a very concrete request for an SQL to query certain data from some tables that were not completely described. We liked that the system made a good explanation and facilitated the code.

Of course, it is necessary to test data generation and review its answer in detail to define if it is optimal. Even so, sometimes it is easier to start from something already built than to start from scratch.

Wrapping Up

ChatGPT is a promising tool, but our job as testers requires a lot of analytical and logical mindset and an empathetic view of the user’s reality, and it is an intellectually challenging activity. Cutting to the chase, it shouldn’t be taken as a ‘‘replacement” tool, but it can help in different situations when used with care.

Leveraging Creativity for Work Improvements

✔️Generating test cases or test data, helping with ideas.

✔️Assist you in drafting and brainstorming.

✔️Avoiding blank page syndrome when creating SQL queries and trying to come up with test ideas for a particular flow.

✔️Improving the way we communicate errors or results.

✔️Refactoring code or generating some base for what you are trying to implement.

If we rely on these tools without engaging in critical thinking, we risk producing low-quality results and perpetuating any inherent shortcomings of the tools.

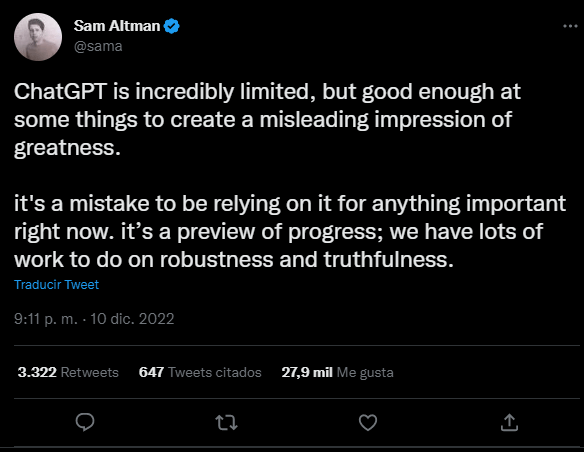

So, can ChatGPT test better than us? Nowadays, it cannot, as mentioned by the CEO of OpenAI in this tweet:

Some Insights

“It is an initial path in which we must pay close attention to the biases that can be generated. ChatGPT is a very powerful tool, and it’s crucial that we can use it with critical thinking,” highlighted Federico Toledo.

“The biggest risk is people believing everything without checking. In my opinion, the human being will always need to supervise and validate what is done, which is a great opportunity for software testers,” said Fabián Baptista, co-founder, and CTO of Apptim.

“That is why it is increasingly important for testers to learn to program and understand how things work, as well as to understand these intelligent assistants so that they can help us do a better job,” he continued.

“The tool has many blind spots. Human and mature will continue to be necessary to consider accessibility, cybersecurity, different risk situations, and much more,” stressed Vera Babat, Chief Culture Officer of Abstracta.

In short, human curiosity and critical thinking will continue to make a difference. Still, it is one of the most exciting technological breakthroughs with significant potential and great impact. This area will continue to be a topic of discussion and exploration.

In need of a software testing partner? Abstracta is one of the most trusted companies in software quality engineering. Learn more about our solutions, and contact us to discuss how we can help you grow your business.

Related Posts

ReconverTite, promoting gender equality in the IT world

We enjoy contributing all our knowledge in testing and being leaders in software quality. Yet, we operate in a society that requires a strong social commitment to evolve, and we won’t settle for less. So, we always shoot for the stars. Through testing, we contribute…

Software testing services, the key to increasing customer satisfaction

Why should software testing services be a priority for today’s marketers and CX professionals? Software testing is an essential link for the creation and development of quality software, with platforms capable of delivering better digital experiences. Software quality and the focus on customer experience are…

Search

Contents